publications

I attempt to keep this list up to date. Also check my Google Scholar profile.

2025

Conference Publications

-

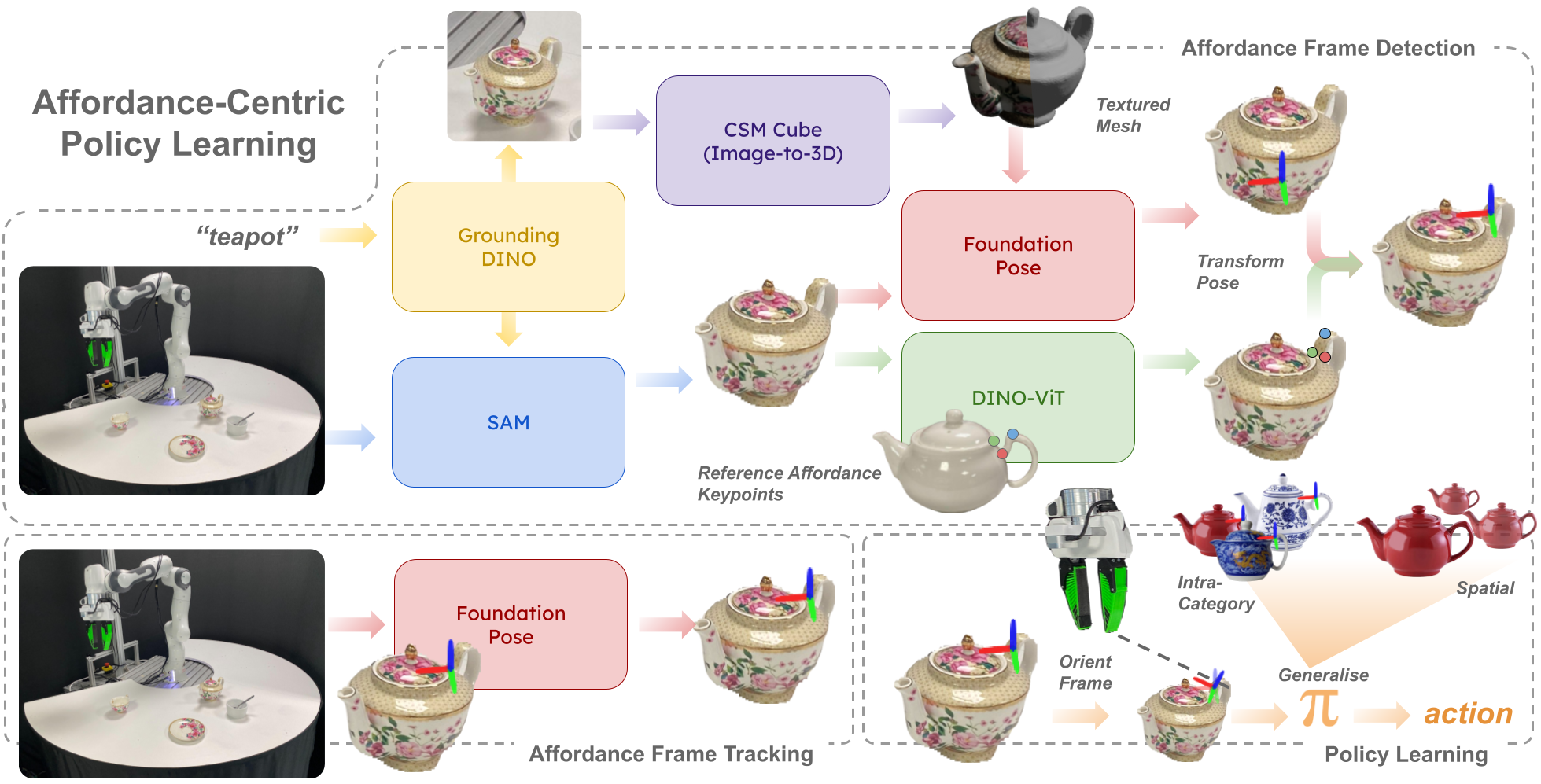

Learning from 10 Demos: Generalisable and Sample-Efficient Policy Learning with Oriented Affordance Frames In Conference on Robot Learning (CoRL), 2025.

We introduce oriented affordance frames, a structured representation for state and action spaces that improves spatial and intra-category generalisation and enables policies to be learned efficiently from only 10 demonstrations. More importantly, we show how this abstraction allows for compositional generalisation of independently trained sub-policies to solve long-horizon, multi-object tasks. To seamlessly transition between sub-policies, we introduce the notion of self-progress prediction, which we directly derive from the duration of the training demonstrations. We validate our method across three real-world tasks, each requiring multi-step, multi-object interactions. Despite the small dataset, our policies generalise robustly to unseen object appearances, geometries, and spatial arrangements, achieving high success rates without reliance on exhaustive training data.

[arXiv]

[website]

We introduce oriented affordance frames, a structured representation for state and action spaces that improves spatial and intra-category generalisation and enables policies to be learned efficiently from only 10 demonstrations. More importantly, we show how this abstraction allows for compositional generalisation of independently trained sub-policies to solve long-horizon, multi-object tasks. To seamlessly transition between sub-policies, we introduce the notion of self-progress prediction, which we directly derive from the duration of the training demonstrations. We validate our method across three real-world tasks, each requiring multi-step, multi-object interactions. Despite the small dataset, our policies generalise robustly to unseen object appearances, geometries, and spatial arrangements, achieving high success rates without reliance on exhaustive training data.

[arXiv]

[website]

-

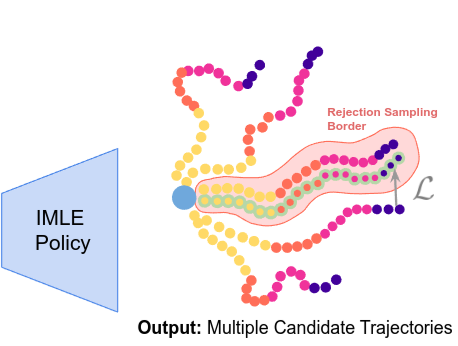

IMLE Policy: Fast and Sample Efficient Visuomotor Policy Learning via Implicit Maximum Likelihood Estimation In Robotics: Science and Systems (RSS), 2025.

We introduce IMLE Policy, a novel behaviour cloning approach based on Implicit Maximum Likelihood Estimation (IMLE). IMLE Policy excels in low-data regimes, effectively learning from minimal demonstrations and requiring 38% less data on average to match the performance of baseline methods in learning complex multi-modal behaviours. Its simple generator-based architecture enables single-step action generation, improving inference speed by 97.3% compared to Diffusion Policy, while outperforming single-step Flow Matching.

[arXiv]

[website]

We introduce IMLE Policy, a novel behaviour cloning approach based on Implicit Maximum Likelihood Estimation (IMLE). IMLE Policy excels in low-data regimes, effectively learning from minimal demonstrations and requiring 38% less data on average to match the performance of baseline methods in learning complex multi-modal behaviours. Its simple generator-based architecture enables single-step action generation, improving inference speed by 97.3% compared to Diffusion Policy, while outperforming single-step Flow Matching.

[arXiv]

[website]

-

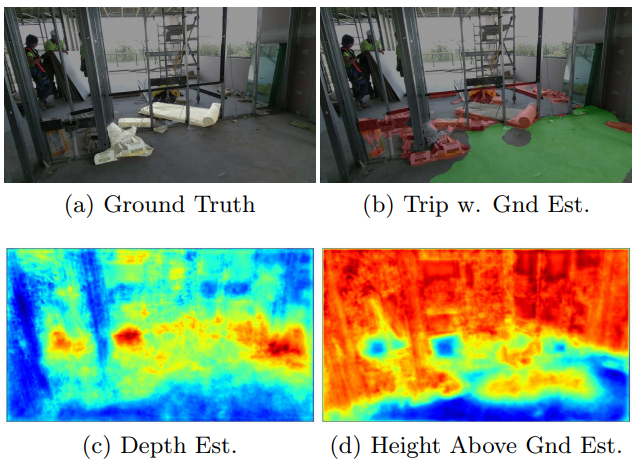

RMMI: Enhanced Obstacle Avoidance for Reactive Mobile Manipulation using an Implicit Neural Map In International Conference on Intelligent Robots and Systems (IROS), 2025.

We introduce a novel reactive control framework for mobile manipulators operating in complex, static environments. Our approach leverages a neural Signed Distance Field (SDF) to model intricate environment details and incorporates this representation as inequality constraints within a Quadratic Program (QP) to coordinate robot joint and base motion. A key contribution is the introduction of an active collision avoidance cost term that maximises the total robot distance to obstacles during the motion. We first evaluate our approach in a simulated reaching task, outperforming previous methods that rely on representing both the robot and the scene as a set of primitive geometries. Compared with the baseline, we improved the task success rate by 25% in total, which includes increases of 10% by using the active collision cost. We also demonstrate our approach on a real-world platform, showing its effectiveness in reaching target poses in cluttered and confined spaces using environment models built directly from sensor data

[arXiv]

[website]

We introduce a novel reactive control framework for mobile manipulators operating in complex, static environments. Our approach leverages a neural Signed Distance Field (SDF) to model intricate environment details and incorporates this representation as inequality constraints within a Quadratic Program (QP) to coordinate robot joint and base motion. A key contribution is the introduction of an active collision avoidance cost term that maximises the total robot distance to obstacles during the motion. We first evaluate our approach in a simulated reaching task, outperforming previous methods that rely on representing both the robot and the scene as a set of primitive geometries. Compared with the baseline, we improved the task success rate by 25% in total, which includes increases of 10% by using the active collision cost. We also demonstrate our approach on a real-world platform, showing its effectiveness in reaching target poses in cluttered and confined spaces using environment models built directly from sensor data

[arXiv]

[website]

-

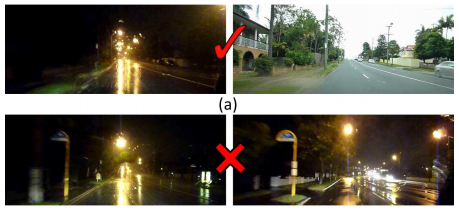

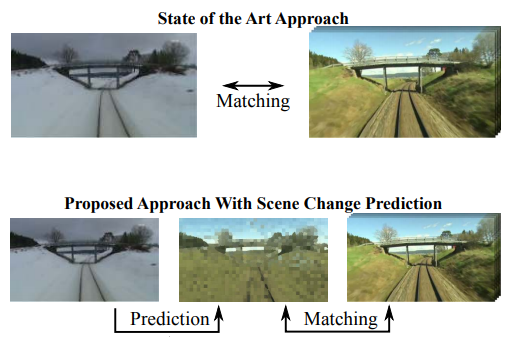

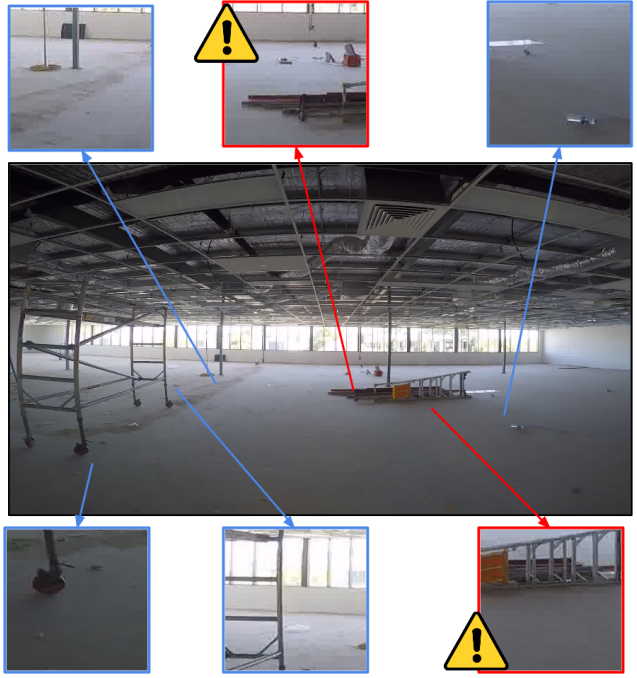

Multi-View Pose-Agnostic Change Localization with Zero Labels In Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

We propose a novel label-free, pose-agnostic change detection method that integrates information from multiple viewpoints to construct a change-aware 3D Gaussian Splatting (3DGS) representation of the scene. With as few as 5 images of the post-change scene, our approach can learn additional change channels in a 3DGS and produce change masks that outperform single-view techniques. Our change-aware 3D scene representation additionally enables the generation of accurate change masks for unseen viewpoints. Experimental results demonstrate state-of-the-art performance in complex multi-object scenes, achieving a 1.7 and 1.6 improvement in Mean Intersection Over Union and F1 score respectively over other baselines. We also contribute a new real-world dataset to benchmark change detection in diverse challenging scenes in the presence of lighting variations.

[arXiv]

We propose a novel label-free, pose-agnostic change detection method that integrates information from multiple viewpoints to construct a change-aware 3D Gaussian Splatting (3DGS) representation of the scene. With as few as 5 images of the post-change scene, our approach can learn additional change channels in a 3DGS and produce change masks that outperform single-view techniques. Our change-aware 3D scene representation additionally enables the generation of accurate change masks for unseen viewpoints. Experimental results demonstrate state-of-the-art performance in complex multi-object scenes, achieving a 1.7 and 1.6 improvement in Mean Intersection Over Union and F1 score respectively over other baselines. We also contribute a new real-world dataset to benchmark change detection in diverse challenging scenes in the presence of lighting variations.

[arXiv]

arXiv Preprints

-

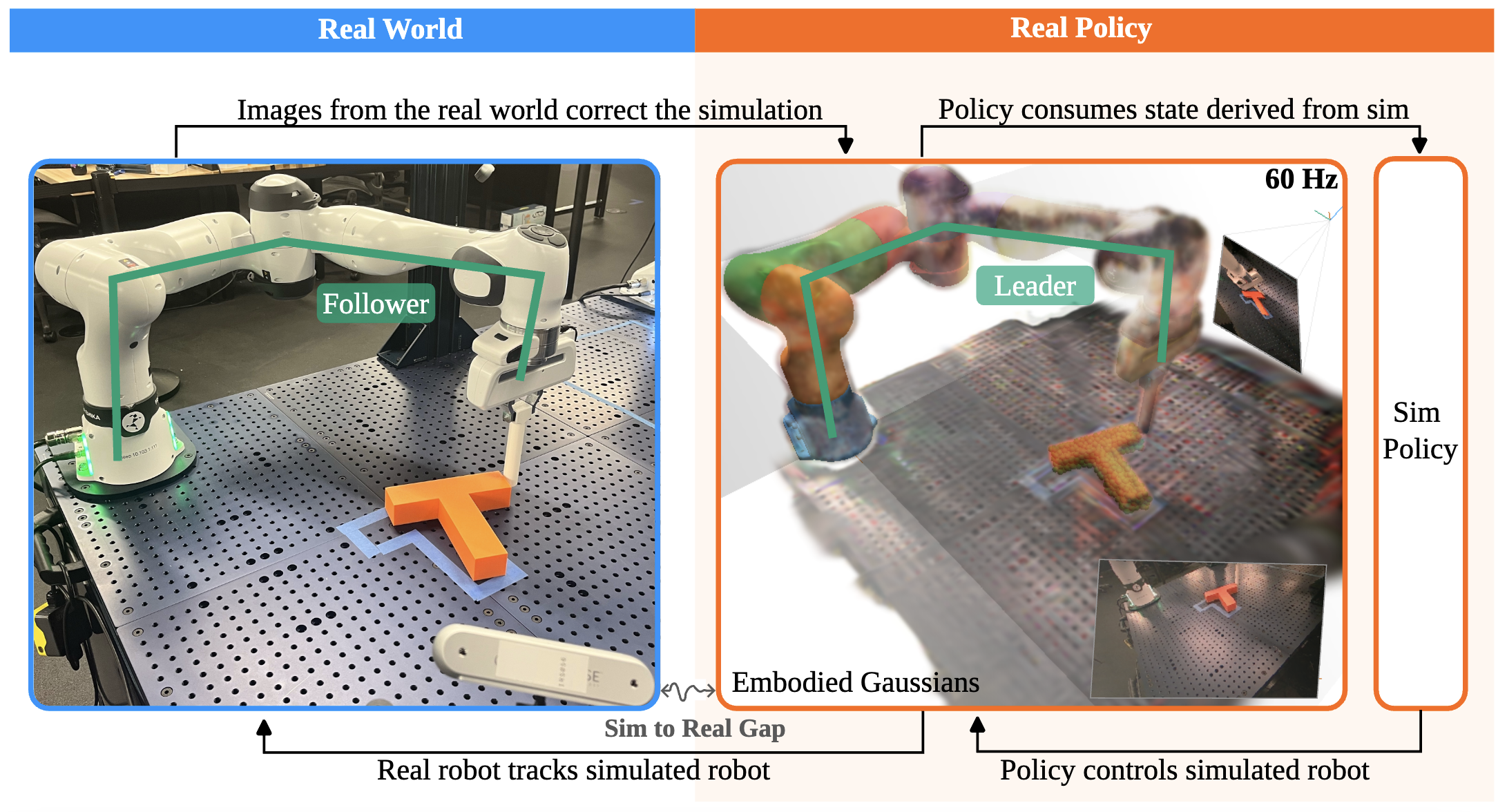

Real-is-Sim: Bridging the Sim-to-Real Gap with a Dynamic Digital Twin for Real-World Robot Policy Evaluation arXiv preprint arXiv:2504.03597, 2025.

We introduce real-is-sim, a new approach to integrating simulation into behavior cloning pipelines. In contrast to real-only methods, which lack the ability to safely test policies before deployment, and sim-to-real methods, which require complex adaptation to cross the sim-to-real gap, our framework allows policies to seamlessly switch between running on real hardware and running in parallelized virtual environments. At the center of real-is-sim is a dynamic digital twin, powered by the Embodied Gaussian simulator, that synchronizes with the real world at 60Hz. This twin acts as a mediator between the behavior cloning policy and the real robot. Policies are trained using representations derived from simulator states and always act on the simulated robot, never the real one. During deployment, the real robot simply follows the simulated robot’s joint states, and the simulation is continuously corrected with real world measurements. This setup, where the simulator drives all policy execution and maintains real-time synchronization with the physical world, shifts the responsibility of crossing the sim-to-real gap to the digital twin’s synchronization mechanisms, instead of the policy itself.

[arXiv]

[website]

We introduce real-is-sim, a new approach to integrating simulation into behavior cloning pipelines. In contrast to real-only methods, which lack the ability to safely test policies before deployment, and sim-to-real methods, which require complex adaptation to cross the sim-to-real gap, our framework allows policies to seamlessly switch between running on real hardware and running in parallelized virtual environments. At the center of real-is-sim is a dynamic digital twin, powered by the Embodied Gaussian simulator, that synchronizes with the real world at 60Hz. This twin acts as a mediator between the behavior cloning policy and the real robot. Policies are trained using representations derived from simulator states and always act on the simulated robot, never the real one. During deployment, the real robot simply follows the simulated robot’s joint states, and the simulation is continuously corrected with real world measurements. This setup, where the simulator drives all policy execution and maintains real-time synchronization with the physical world, shifts the responsibility of crossing the sim-to-real gap to the digital twin’s synchronization mechanisms, instead of the policy itself.

[arXiv]

[website]

2024

Journal Articles

-

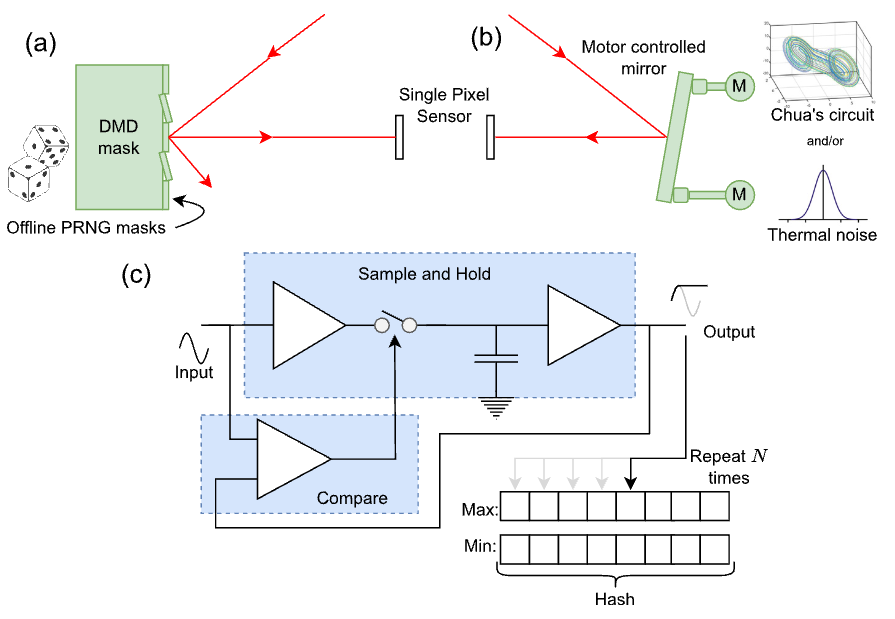

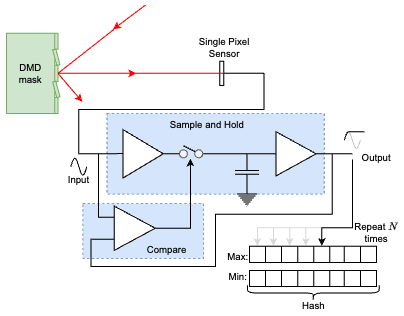

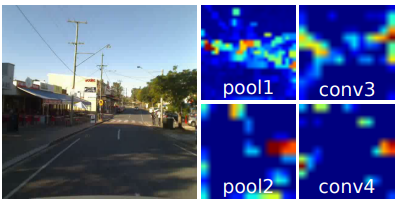

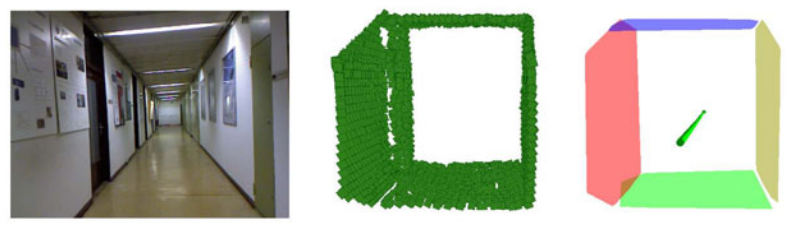

Inherently privacy-preserving vision for trustworthy autonomous systems: Needs and solutions Journal of Responsible Technology, 2024.

This paper is a call to action to consider privacy in robotic vision. We propose a specific form of inherent privacy preservation in which no images are captured or could be reconstructed by an attacker, even with full remote access. We present a set of principles by which such systems could be designed, employing data-destroying operations and obfuscation in the optical and analogue domains. These cameras never see a full scene. Our localisation case study demonstrates in simulation four implementations that all fulfil this task. The design space of such systems is vast despite the constraints of optical-analogue processing. We hope to inspire future works that expand the range of applications open to sighted robotic systems.

[website]

This paper is a call to action to consider privacy in robotic vision. We propose a specific form of inherent privacy preservation in which no images are captured or could be reconstructed by an attacker, even with full remote access. We present a set of principles by which such systems could be designed, employing data-destroying operations and obfuscation in the optical and analogue domains. These cameras never see a full scene. Our localisation case study demonstrates in simulation four implementations that all fulfil this task. The design space of such systems is vast despite the constraints of optical-analogue processing. We hope to inspire future works that expand the range of applications open to sighted robotic systems.

[website]

Conference Publications

-

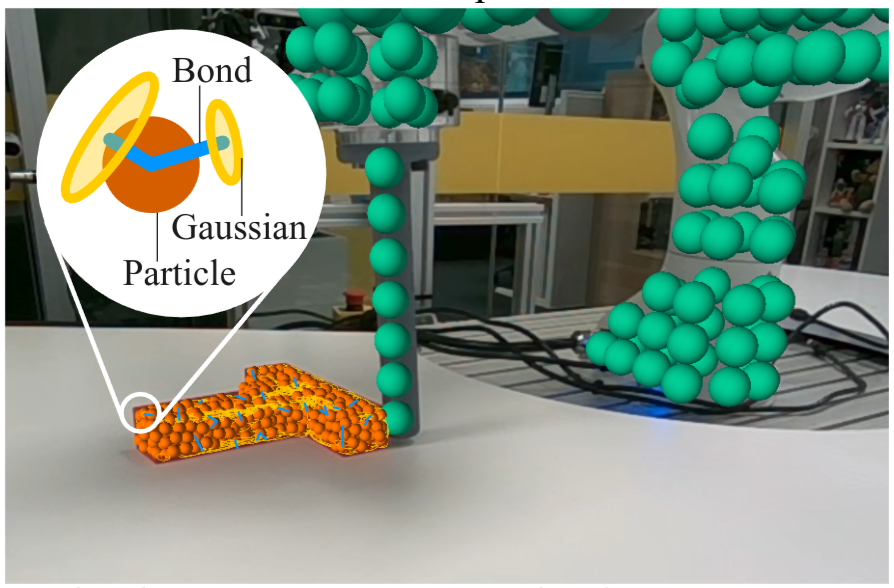

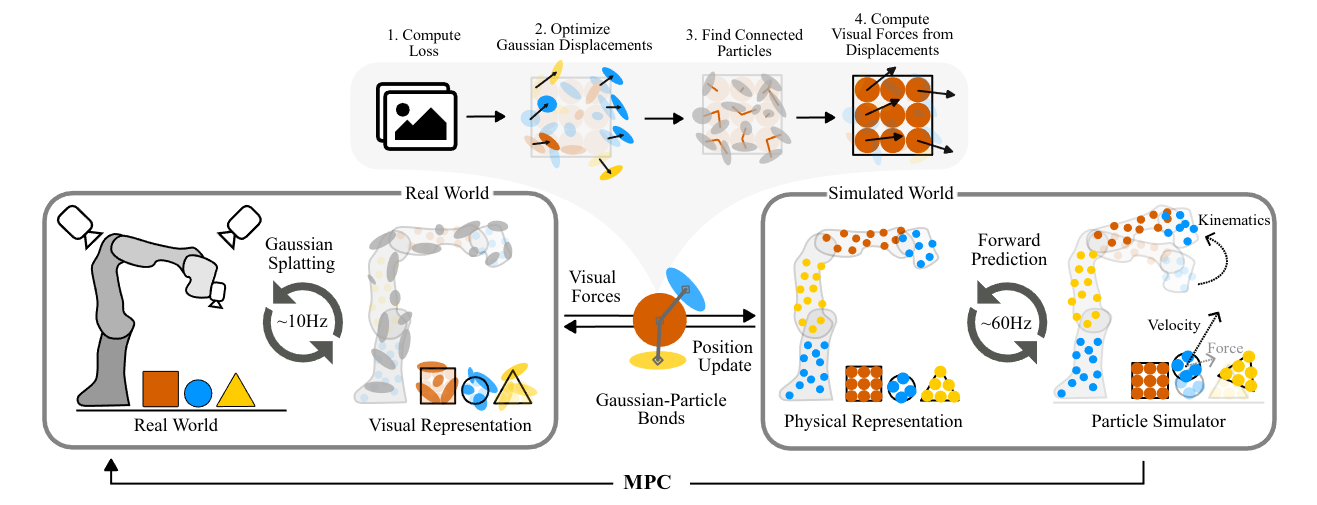

Physically Embodied Gaussian Splatting: A Realtime Correctable World Model for Robotics In Conference on Robot Learning (CoRL), 2024. Oral Presentation

We propose a novel dual "Gaussian-Particle" representation that models the physical world while (i) enabling predictive simulation of future states and (ii) allowing online correction from visual observations in a dynamic world. Our representation comprises particles that capture the geometrical aspect of objects in the world and can be used alongside a particle-based physics system to anticipate physically plausible future states. Attached to these particles are 3D Gaussians that render images from any viewpoint through a splatting process thus capturing the visual state. By comparing the predicted and observed images, our approach generates "visual forces" that correct the particle positions while respecting known physical constraints. By integrating predictive physical modeling with continuous visually-derived corrections, our unified representation reasons about the present and future while synchronizing with reality.

[arXiv]

[website]

We propose a novel dual "Gaussian-Particle" representation that models the physical world while (i) enabling predictive simulation of future states and (ii) allowing online correction from visual observations in a dynamic world. Our representation comprises particles that capture the geometrical aspect of objects in the world and can be used alongside a particle-based physics system to anticipate physically plausible future states. Attached to these particles are 3D Gaussians that render images from any viewpoint through a splatting process thus capturing the visual state. By comparing the predicted and observed images, our approach generates "visual forces" that correct the particle positions while respecting known physical constraints. By integrating predictive physical modeling with continuous visually-derived corrections, our unified representation reasons about the present and future while synchronizing with reality.

[arXiv]

[website]

-

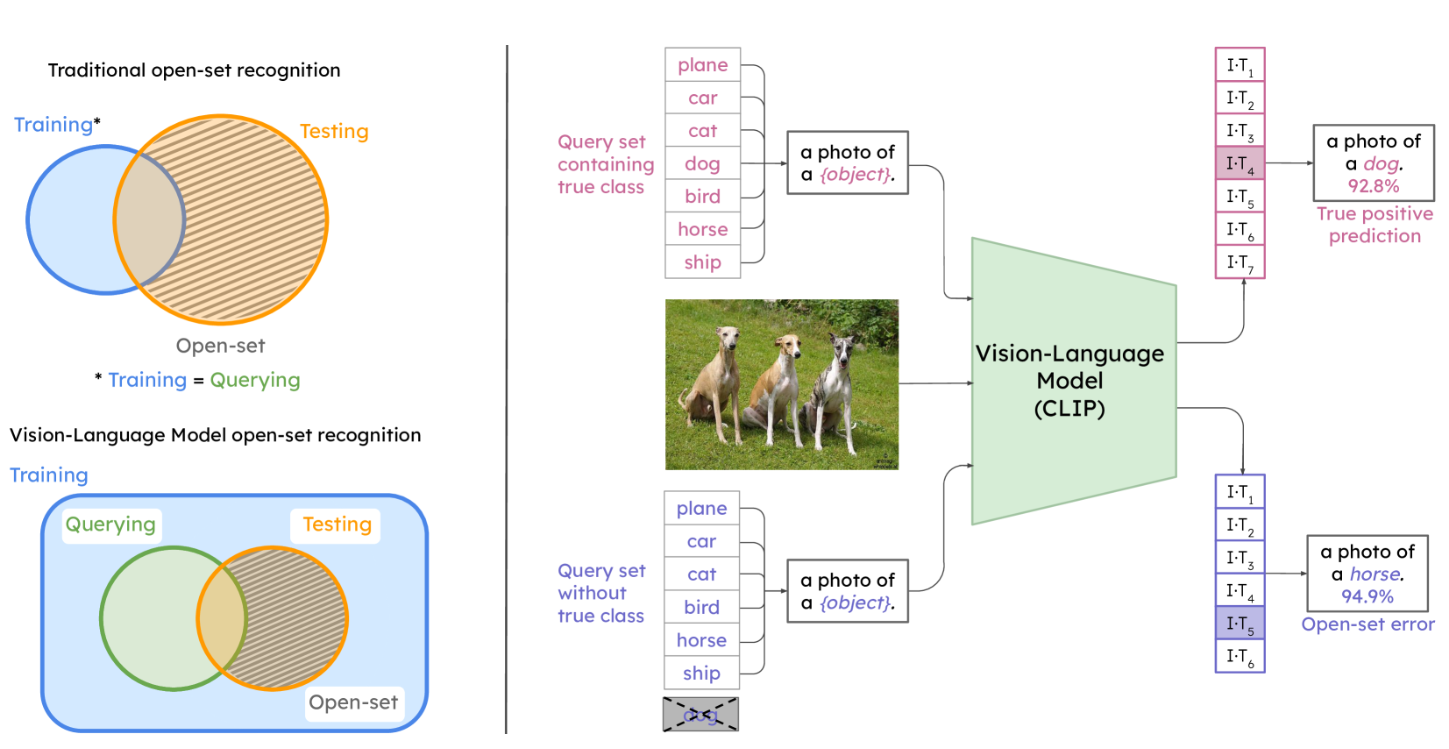

Open-Set Recognition in the Age of Vision-Language Models In European Conference on Computer Vision (ECCV), 2024.

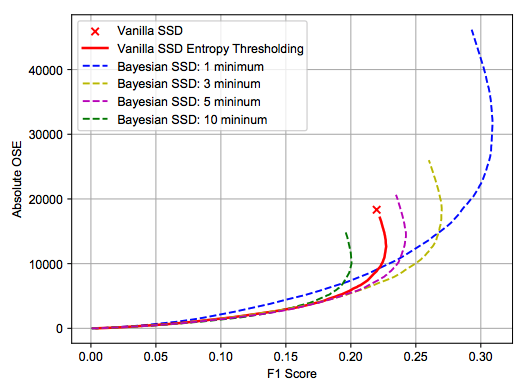

Are vision-language models (VLMs) open-set models because they are trained on internet-scale datasets? We answer this question with a clear no – VLMs introduce closed-set assumptions via their finite query set, making them vulnerable to open-set conditions. We systematically evaluate VLMs for open-set recognition and find they frequently misclassify objects not contained in their query set, leading to alarmingly low precision when tuned for high recall and vice versa. We show that naively increasing the size of the query set to contain more and more classes does not mitigate this problem, but instead causes diminishing task performance and open-set performance. We establish a revised definition of the open-set problem for the age of VLMs, define a new benchmark and evaluation protocol to facilitate standardised evaluation and research in this important area, and evaluate promising baseline approaches based on predictive uncertainty and dedicated negative embeddings on a range of VLM classifiers and object detectors.

[arXiv]

[Code]

Are vision-language models (VLMs) open-set models because they are trained on internet-scale datasets? We answer this question with a clear no – VLMs introduce closed-set assumptions via their finite query set, making them vulnerable to open-set conditions. We systematically evaluate VLMs for open-set recognition and find they frequently misclassify objects not contained in their query set, leading to alarmingly low precision when tuned for high recall and vice versa. We show that naively increasing the size of the query set to contain more and more classes does not mitigate this problem, but instead causes diminishing task performance and open-set performance. We establish a revised definition of the open-set problem for the age of VLMs, define a new benchmark and evaluation protocol to facilitate standardised evaluation and research in this important area, and evaluate promising baseline approaches based on predictive uncertainty and dedicated negative embeddings on a range of VLM classifiers and object detectors.

[arXiv]

[Code]

-

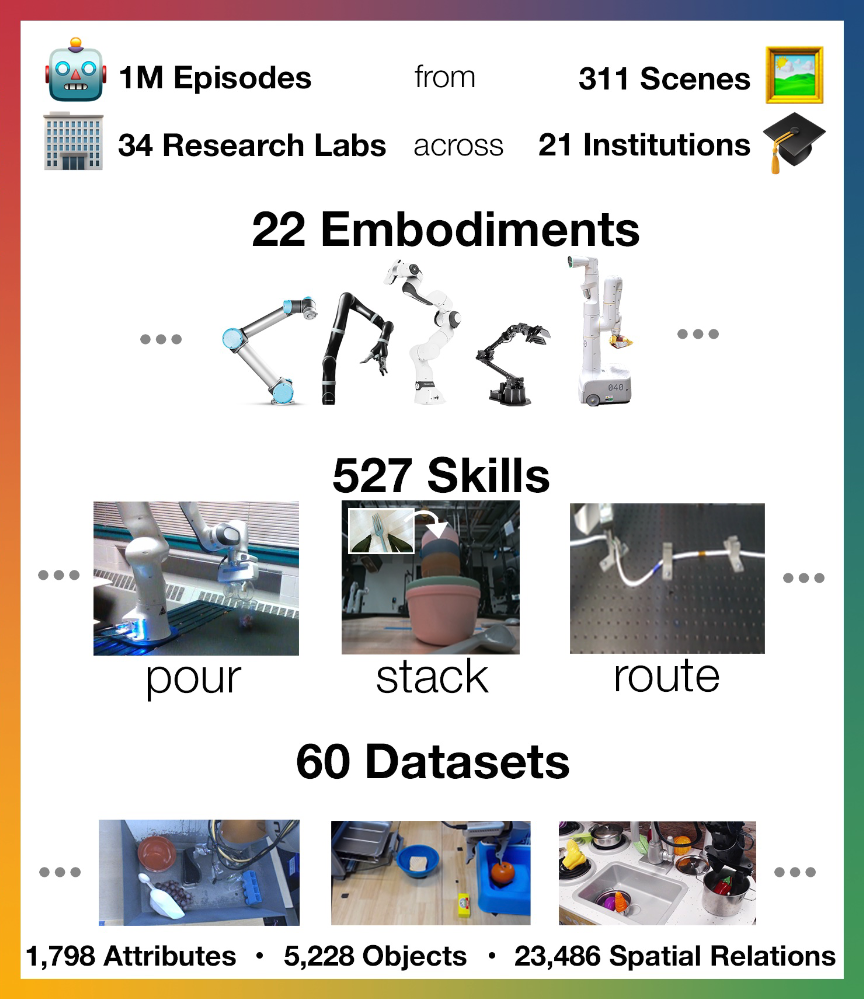

Open X-Embodiment: Robotic Learning Datasets and RT-X Models In IEEE Conference on Robotics and Automation (ICRA), 2024. ICRA Best Conference Paper. ICRA Best Paper Award in Robot Manipulation.

Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train “generalist” X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms.

[arXiv]

[website]

Conventionally, robotic learning methods train a separate model for every application, every robot, and even every environment. Can we instead train “generalist” X-robot policy that can be adapted efficiently to new robots, tasks, and environments? In this paper, we provide datasets in standardized data formats and models to make it possible to explore this possibility in the context of robotic manipulation, alongside experimental results that provide an example of effective X-robot policies. We assemble a dataset from 22 different robots collected through a collaboration between 21 institutions, demonstrating 527 skills (160266 tasks). We show that a high-capacity model trained on this data, which we call RT-X, exhibits positive transfer and improves the capabilities of multiple robots by leveraging experience from other platforms.

[arXiv]

[website]

-

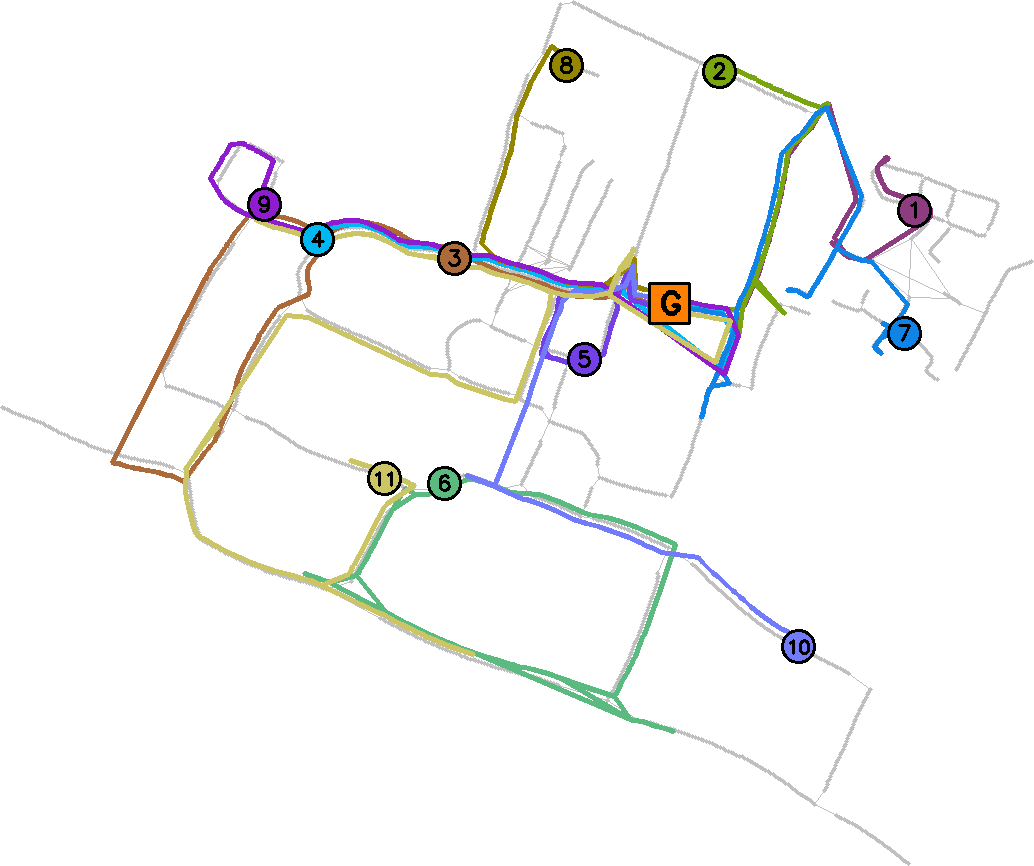

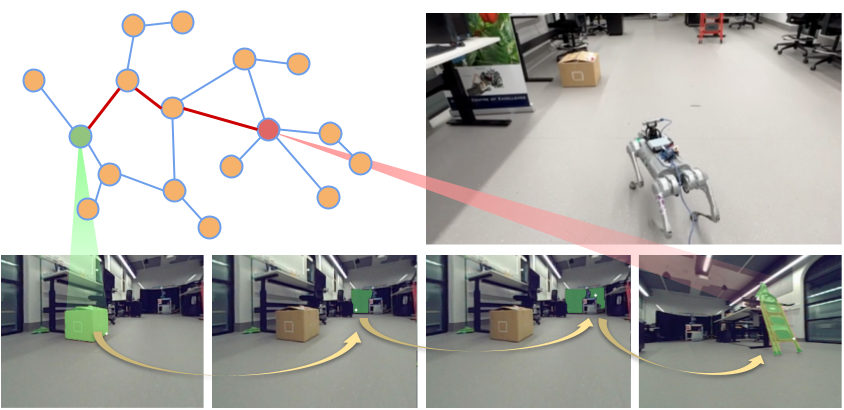

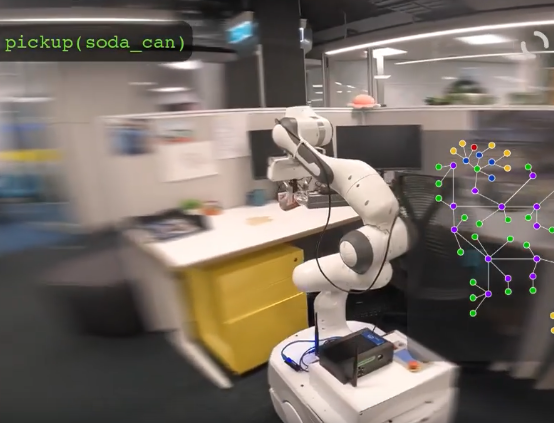

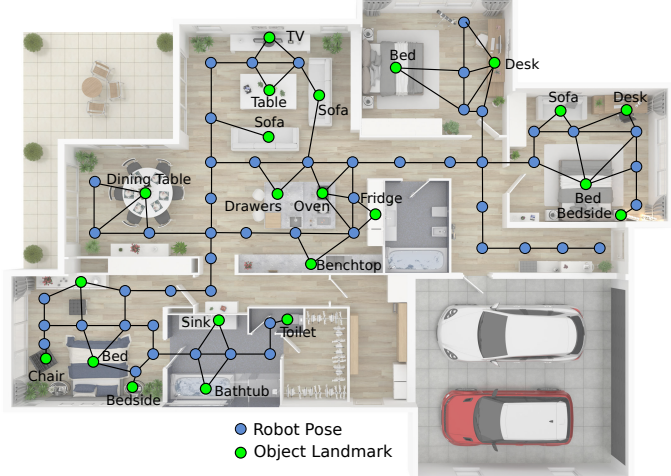

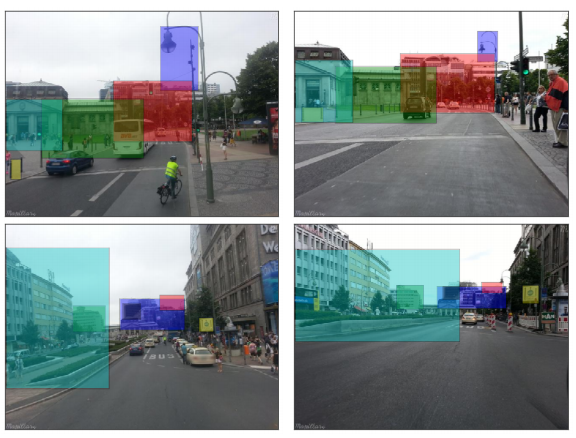

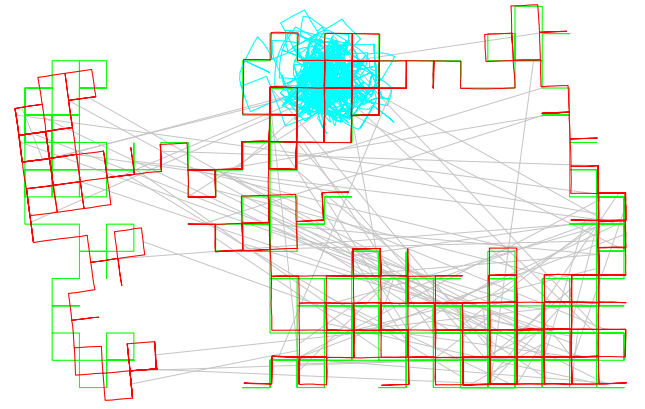

RoboHop: Segment-based Topological Map Representation for Open-World Visual Navigation In IEEE Conference on Robotics and Automation (ICRA), 2024. Oral Presentation at ICRA, Oral Presentation at CoRL Workshop

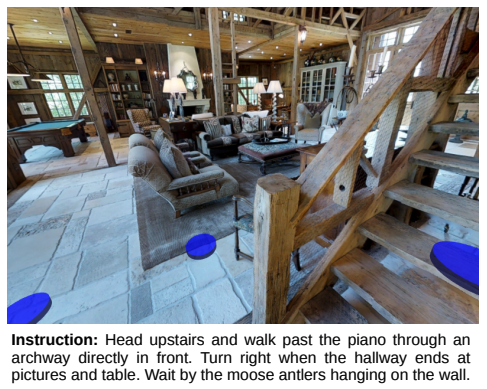

We propose a novel topological representation of an environment based on image segments, which are semantically meaningful and open-vocabulary queryable, conferring several advantages over previous works based on pixel-level features. Unlike 3D scene graphs, we create a purely topological graph but with segments as nodes, where edges are formed by a) associating segment-level descriptors between pairs of consecutive images and b) connecting neighboring segments within an image using their pixel centroids. This unveils a continuous sense of a ‘place’, defined by inter-image persistence of segments along with their intra-image neighbours. It further enables us to represent and update segment-level descriptors through neighborhood aggregation using graph convolution layers, which improves robot localization based on segment-level retrieval.

[website]

We propose a novel topological representation of an environment based on image segments, which are semantically meaningful and open-vocabulary queryable, conferring several advantages over previous works based on pixel-level features. Unlike 3D scene graphs, we create a purely topological graph but with segments as nodes, where edges are formed by a) associating segment-level descriptors between pairs of consecutive images and b) connecting neighboring segments within an image using their pixel centroids. This unveils a continuous sense of a ‘place’, defined by inter-image persistence of segments along with their intra-image neighbours. It further enables us to represent and update segment-level descriptors through neighborhood aggregation using graph convolution layers, which improves robot localization based on segment-level retrieval.

[website]

-

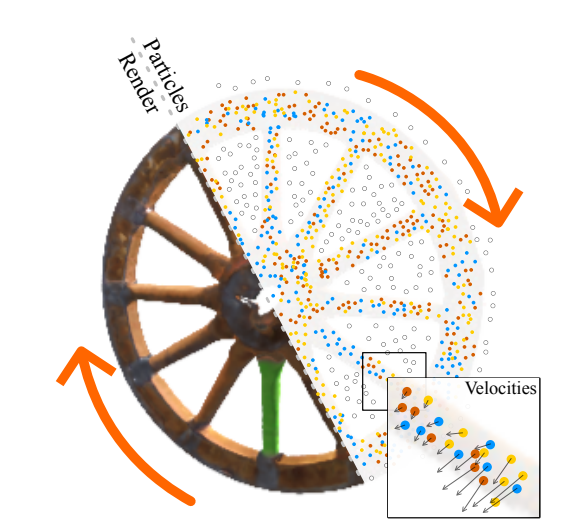

ParticleNeRF: Particle Based Encoding for Online Neural Radiance Fields in Dynamic Scenes In IEEE Winter Conference on Applications of Computer Vision (WACV), 2024. Best Paper Honourable Mention & Oral Presentation

While existing Neural Radiance Fields (NeRFs) for dynamic scenes are offline methods with an emphasis on visual fidelity, our paper addresses the online use case that prioritises real-time adaptability. We present ParticleNeRF, a new approach that dynamically adapts to changes in the scene geometry by learning an up-to-date representation online, every 200ms. ParticleNeRF achieves this using a novel particle-based parametric encoding. We couple features to particles in space and backpropagate the photometric reconstruction loss into the particles’ position gradients, which are then interpreted as velocity vectors. Governed by a lightweight physics system to handle collisions, this lets the features move freely with the changing scene geometry.

[arXiv]

[website]

While existing Neural Radiance Fields (NeRFs) for dynamic scenes are offline methods with an emphasis on visual fidelity, our paper addresses the online use case that prioritises real-time adaptability. We present ParticleNeRF, a new approach that dynamically adapts to changes in the scene geometry by learning an up-to-date representation online, every 200ms. ParticleNeRF achieves this using a novel particle-based parametric encoding. We couple features to particles in space and backpropagate the photometric reconstruction loss into the particles’ position gradients, which are then interpreted as velocity vectors. Governed by a lightweight physics system to handle collisions, this lets the features move freely with the changing scene geometry.

[arXiv]

[website]

Workshop Publications

-

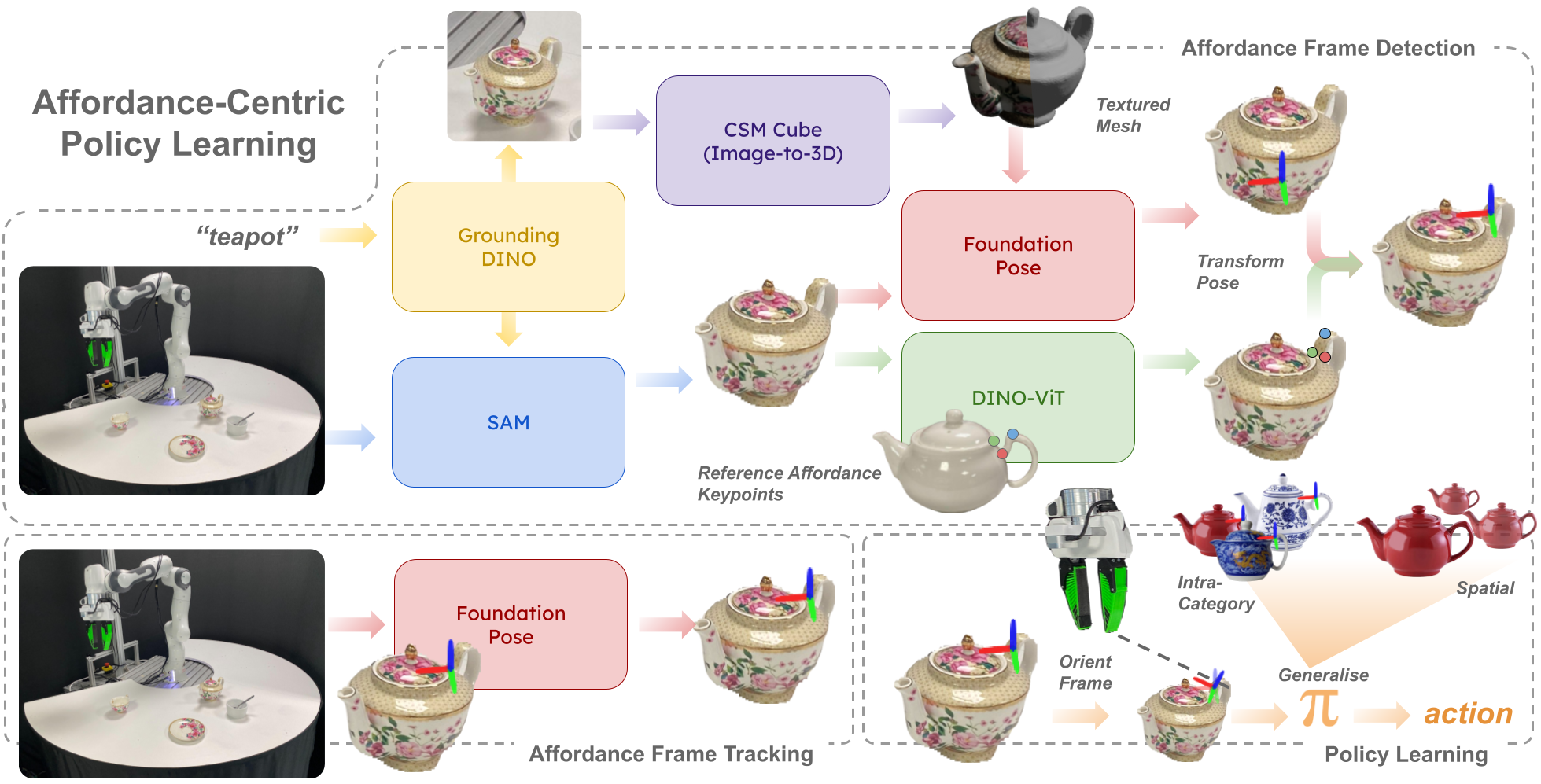

Affordance-Centric Policy Learning: Sample Efficient and Generalisable Robot Policy Learning using Affordance-Centric Task Frames In Workshop on Learning Robotic Assembly of Industrial and Everyday Objects, Conference on Robot Learning (CoRL), 2024.

In this paper, we propose an affordance-centric policy-learning approach that centres and appropriately orients a task frame on these affordance regions allowing us to achieve both intra-category invariance – where policies can generalise across different instances within the same object category – and spatial invariance — which enables consistent performance regardless of object placement in the environment. We propose a method to leverage existing generalist large vision models to extract and track these affordance frames, and demonstrate that our approach can learn manipulation tasks using behaviour cloning from as little as 10 demonstrations, with equivalent generalisation to an image-based policy trained on 305 demonstrations.

[arXiv]

[website]

In this paper, we propose an affordance-centric policy-learning approach that centres and appropriately orients a task frame on these affordance regions allowing us to achieve both intra-category invariance – where policies can generalise across different instances within the same object category – and spatial invariance — which enables consistent performance regardless of object placement in the environment. We propose a method to leverage existing generalist large vision models to extract and track these affordance frames, and demonstrate that our approach can learn manipulation tasks using behaviour cloning from as little as 10 demonstrations, with equivalent generalisation to an image-based policy trained on 305 demonstrations.

[arXiv]

[website]

-

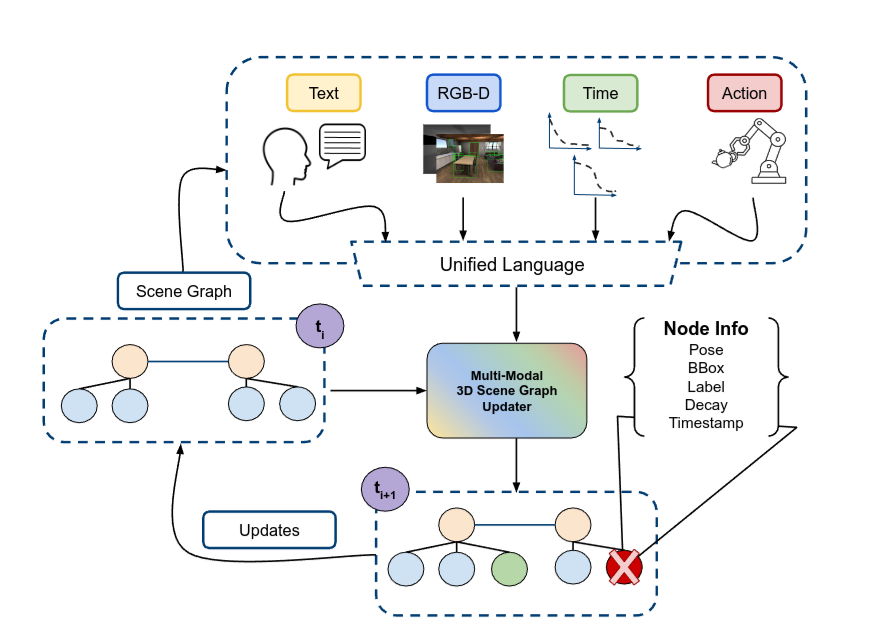

Multi-Modal 3D Scene Graph Updater for Shared and Dynamic Environments In Workshop on Lifelong Learning for Home Robots, Conference on Robot Learning (CoRL), 2024.

The advent of generalist Large Language Models (LLMs) and Large Vision Models (VLMs) have streamlined the construction of semantically enriched maps that can enable robots to ground high-level reasoning and planning into their representations. One of the most widely used semantic map formats is the 3D Scene Graph, which captures both metric (low-level) and semantic (high-level) information. However, these maps often assume a static world, while real environments, like homes and offices, are dynamic. Even small changes in these spaces can significantly impact task performance. To integrate robots into dynamic environments, they must detect changes and update the scene graph in real-time. This update process is inherently multimodal, requiring input from various sources, such as human agents, the robot’s own perception system, time, and its actions. This work proposes a framework that leverages these multimodal inputs to maintain the consistency of scene graphs during real-time operation, presenting promising initial results and outlining a roadmap for future research.

[arXiv]

The advent of generalist Large Language Models (LLMs) and Large Vision Models (VLMs) have streamlined the construction of semantically enriched maps that can enable robots to ground high-level reasoning and planning into their representations. One of the most widely used semantic map formats is the 3D Scene Graph, which captures both metric (low-level) and semantic (high-level) information. However, these maps often assume a static world, while real environments, like homes and offices, are dynamic. Even small changes in these spaces can significantly impact task performance. To integrate robots into dynamic environments, they must detect changes and update the scene graph in real-time. This update process is inherently multimodal, requiring input from various sources, such as human agents, the robot’s own perception system, time, and its actions. This work proposes a framework that leverages these multimodal inputs to maintain the consistency of scene graphs during real-time operation, presenting promising initial results and outlining a roadmap for future research.

[arXiv]

-

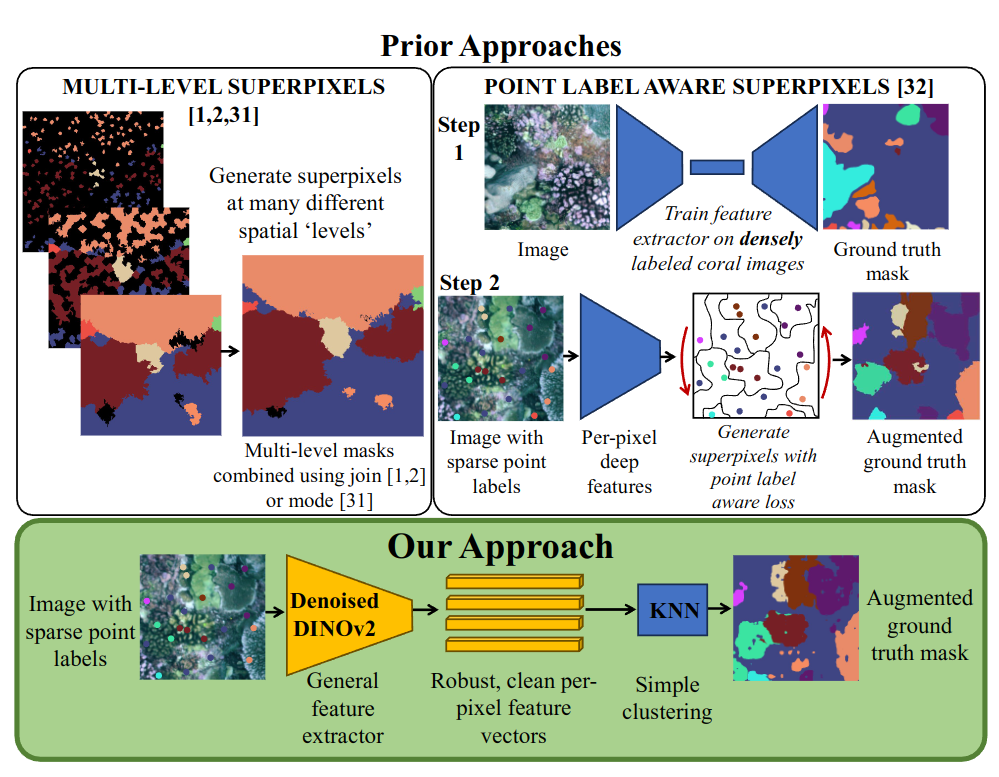

Human-in-the-Loop Segmentation of Multi-species Coral Imagery In CVPR workshop on Learning with Limited Labelled Data for Image and Video Understanding, 2024.

We first demonstrate that recent advances in foundation models enable generation of multi-species coral augmented ground truth masks using denoised DINOv2 features and K-Nearest Neighbors (KNN), without the need for any pre-training or custom-designed algorithms. For extremely sparsely labeled images, we propose a labeling regime based on human-in-the-loop principles, resulting in significant improvement in annotation efficiency.

[arXiv]

We first demonstrate that recent advances in foundation models enable generation of multi-species coral augmented ground truth masks using denoised DINOv2 features and K-Nearest Neighbors (KNN), without the need for any pre-training or custom-designed algorithms. For extremely sparsely labeled images, we propose a labeling regime based on human-in-the-loop principles, resulting in significant improvement in annotation efficiency.

[arXiv]

arXiv Preprints

-

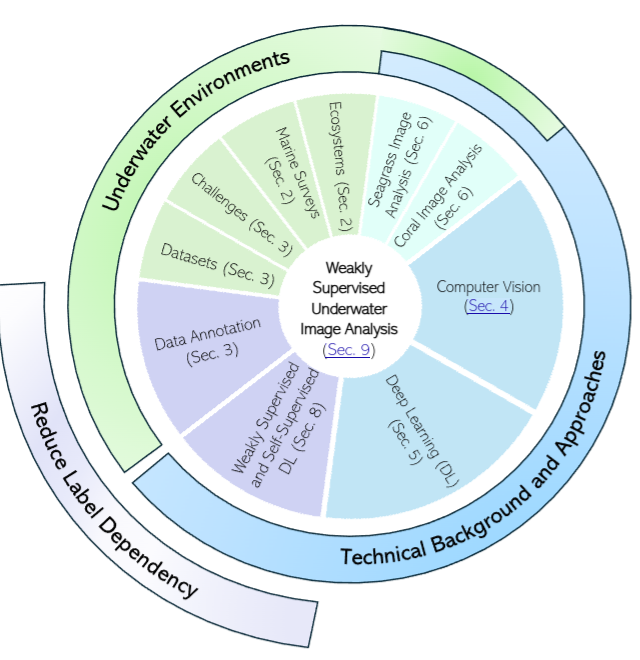

Reducing Label Dependency for Underwater Scene Understanding: A Survey of Datasets, Techniques and Applications arXiv preprint arXiv:2411.11287, 2024.

The complexity of underwater images, coupled with the specialist expertise needed to accurately identify species at the pixel level, makes the labeling process costly, time-consuming, and heavily dependent on domain experts. In recent years, some works have performed automated analysis of underwater imagery, and a smaller number of studies have focused on weakly supervised approaches which aim to reduce the expert-provided labelled data required. This survey focuses on approaches which reduce dependency on human expert input, while reviewing the prior and related approaches to position these works in the wider field of underwater perception. Further, we offer an overview of coastal ecosystems and the challenges of underwater imagery.

[arXiv]

The complexity of underwater images, coupled with the specialist expertise needed to accurately identify species at the pixel level, makes the labeling process costly, time-consuming, and heavily dependent on domain experts. In recent years, some works have performed automated analysis of underwater imagery, and a smaller number of studies have focused on weakly supervised approaches which aim to reduce the expert-provided labelled data required. This survey focuses on approaches which reduce dependency on human expert input, while reviewing the prior and related approaches to position these works in the wider field of underwater perception. Further, we offer an overview of coastal ecosystems and the challenges of underwater imagery.

[arXiv]

-

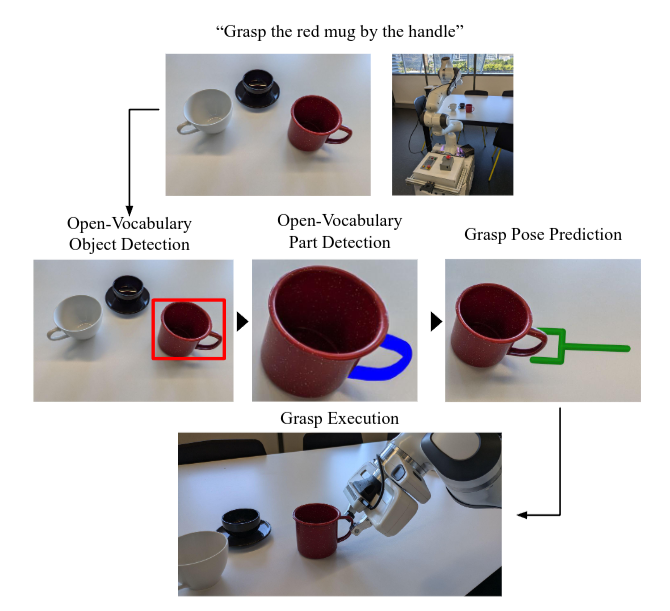

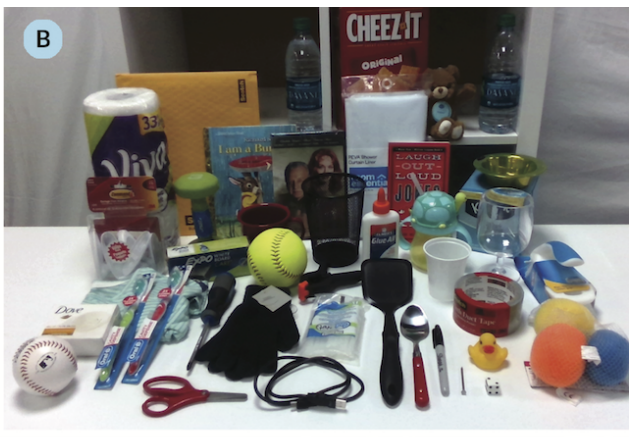

Open-Vocabulary Part-Based Grasping arXiv preprint arXiv:2406.05951, 2024.

Many robotic applications require to grasp objects

not arbitrarily but at a very specific object part. This is especially

important for manipulation tasks beyond simple pick-and-place

scenarios or in robot-human interactions, such as object handovers. We propose AnyPart, a practical system that combines

open-vocabulary object detection, open-vocabulary part segmentation and 6DOF grasp pose prediction to infer a grasp pose on

a specific part of an object in 800 milliseconds. We contribute

two new datasets for the task of open-vocabulary part-based

grasping, a hand-segmented dataset containing 1014 object-part

segmentations, and a dataset of real-world scenarios gathered

during our robot trials for individual objects and table-clearing

tasks. We evaluate AnyPart on a mobile manipulator robot using

a set of 28 common household objects over 360 grasping trials.

AnyPart is capable of producing successful grasps 69.52 %, when

ignoring robot-based grasp failures, AnyPart predicts a grasp

location on the correct part 88.57% of the time.

[arXiv]

Many robotic applications require to grasp objects

not arbitrarily but at a very specific object part. This is especially

important for manipulation tasks beyond simple pick-and-place

scenarios or in robot-human interactions, such as object handovers. We propose AnyPart, a practical system that combines

open-vocabulary object detection, open-vocabulary part segmentation and 6DOF grasp pose prediction to infer a grasp pose on

a specific part of an object in 800 milliseconds. We contribute

two new datasets for the task of open-vocabulary part-based

grasping, a hand-segmented dataset containing 1014 object-part

segmentations, and a dataset of real-world scenarios gathered

during our robot trials for individual objects and table-clearing

tasks. We evaluate AnyPart on a mobile manipulator robot using

a set of 28 common household objects over 360 grasping trials.

AnyPart is capable of producing successful grasps 69.52 %, when

ignoring robot-based grasp failures, AnyPart predicts a grasp

location on the correct part 88.57% of the time.

[arXiv]

2023

Journal Articles

-

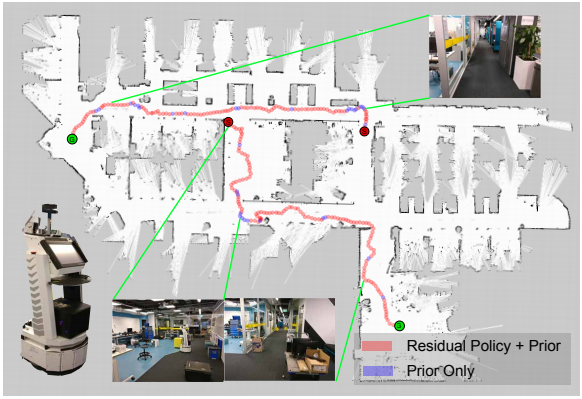

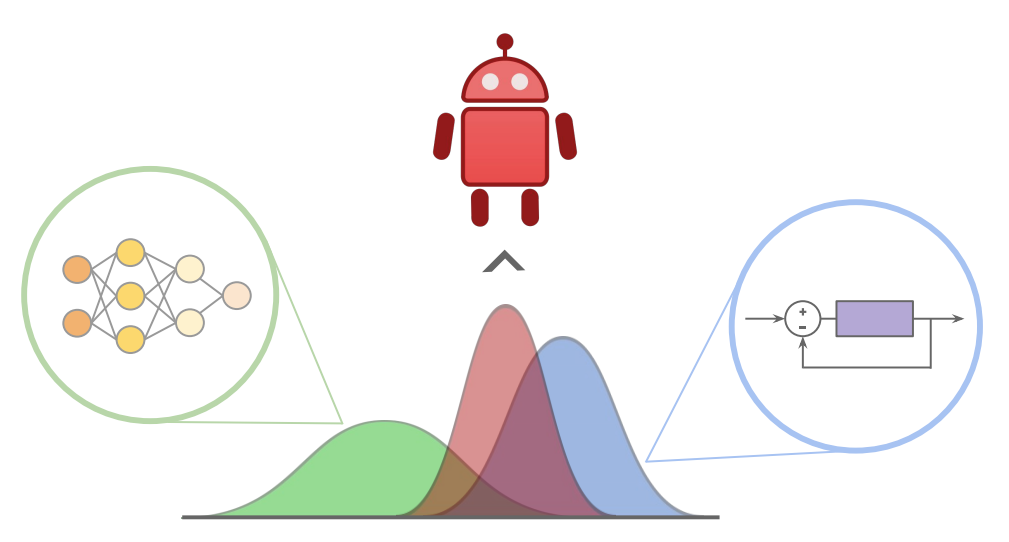

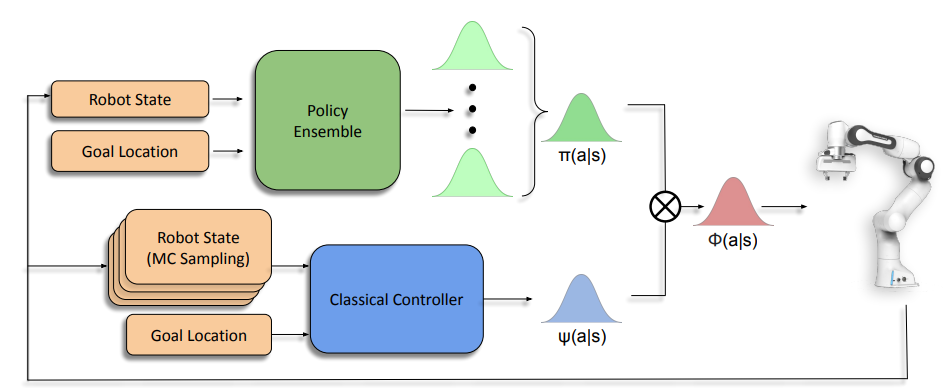

Bayesian Controller Fusion: Leveraging Control Priors in Deep Reinforcement Learning for Robotics The International Journal of Robotics Research (IJRR), 2023.

We present Bayesian Controller Fusion (BCF): a hybrid control strategy that combines the strengths of traditional hand-crafted controllers and model-free deep reinforcement learning (RL). BCF thrives in the robotics domain, where reliable but suboptimal control priors exist for many tasks, but RL from scratch remains unsafe and data-inefficient. By fusing uncertainty-aware distributional outputs from each system, BCF arbitrates control between them, exploiting their respective strengths.

As exploration is naturally guided by the prior in the early stages of training, BCF accelerates learning, while substantially improving beyond the performance of the control prior, as the policy gains more experience.

More importantly, given the risk-aversity of the control prior, BCF ensures safe exploration and deployment, where the control prior naturally dominates the action distribution in states unknown to the policy.

We additionally show BCF’s applicability to the zero-shot sim-to-real setting and its ability to deal with out-of-distribution states in the real-world.

BCF is a promising approach for combining the complementary strengths of deep RL and traditional robotic control, surpassing what either can achieve independently.

[arXiv]

[website]

We present Bayesian Controller Fusion (BCF): a hybrid control strategy that combines the strengths of traditional hand-crafted controllers and model-free deep reinforcement learning (RL). BCF thrives in the robotics domain, where reliable but suboptimal control priors exist for many tasks, but RL from scratch remains unsafe and data-inefficient. By fusing uncertainty-aware distributional outputs from each system, BCF arbitrates control between them, exploiting their respective strengths.

As exploration is naturally guided by the prior in the early stages of training, BCF accelerates learning, while substantially improving beyond the performance of the control prior, as the policy gains more experience.

More importantly, given the risk-aversity of the control prior, BCF ensures safe exploration and deployment, where the control prior naturally dominates the action distribution in states unknown to the policy.

We additionally show BCF’s applicability to the zero-shot sim-to-real setting and its ability to deal with out-of-distribution states in the real-world.

BCF is a promising approach for combining the complementary strengths of deep RL and traditional robotic control, surpassing what either can achieve independently.

[arXiv]

[website]

Conference Publications

-

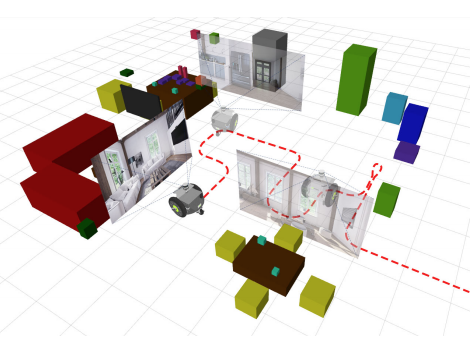

Sayplan: Grounding Large Language Models Using 3d Scene Graphs for Scalable Robot Task Planning In Conference on Robot Learning (CoRL), 2023. Oral Presentation

We introduce SayPlan, a scalable approach to LLM-based,

large-scale task planning for robotics using 3D scene graph (3DSG) representations. To ensure the scalability of our approach, we: (1) exploit the hierarchical

nature of 3DSGs to allow LLMs to conduct a semantic search for task-relevant

subgraphs from a smaller, collapsed representation of the full graph; (2) reduce the

planning horizon for the LLM by integrating a classical path planner and (3) introduce an iterative replanning pipeline that refines the initial plan using feedback

from a scene graph simulator, correcting infeasible actions and avoiding planning

failures. We evaluate our approach on two large-scale environments spanning up

to 3 floors and 36 rooms with 140 assets and objects and show that our approach is

capable of grounding large-scale, long-horizon task plans from abstract, and natural language instruction for a mobile manipulator robot to execute.

[arXiv]

[website]

We introduce SayPlan, a scalable approach to LLM-based,

large-scale task planning for robotics using 3D scene graph (3DSG) representations. To ensure the scalability of our approach, we: (1) exploit the hierarchical

nature of 3DSGs to allow LLMs to conduct a semantic search for task-relevant

subgraphs from a smaller, collapsed representation of the full graph; (2) reduce the

planning horizon for the LLM by integrating a classical path planner and (3) introduce an iterative replanning pipeline that refines the initial plan using feedback

from a scene graph simulator, correcting infeasible actions and avoiding planning

failures. We evaluate our approach on two large-scale environments spanning up

to 3 floors and 36 rooms with 140 assets and objects and show that our approach is

capable of grounding large-scale, long-horizon task plans from abstract, and natural language instruction for a mobile manipulator robot to execute.

[arXiv]

[website]

-

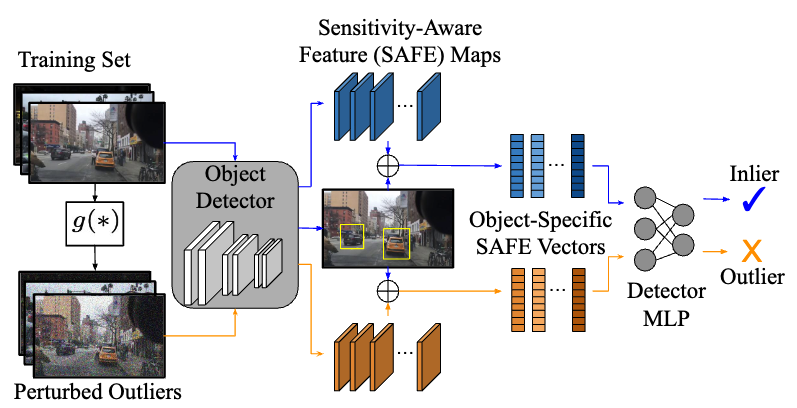

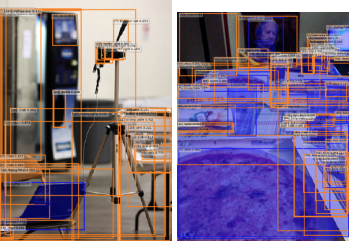

SAFE: Sensitivity-Aware Features for Out-of-Distribution Object Detection In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023.

We address the problem of out-of-distribution (OOD)

detection for the task of object detection. We show that

residual convolutional layers with batch normalisation produce Sensitivity-Aware FEatures (SAFE) that are consistently powerful for distinguishing in-distribution from outof-distribution detections. We extract SAFE vectors for every detected object, and train a multilayer perceptron on

the surrogate task of distinguishing adversarially perturbed

from clean in-distribution examples. This circumvents the

need for realistic OOD training data, computationally expensive generative models, or retraining of the base object detector.

[arXiv]

We address the problem of out-of-distribution (OOD)

detection for the task of object detection. We show that

residual convolutional layers with batch normalisation produce Sensitivity-Aware FEatures (SAFE) that are consistently powerful for distinguishing in-distribution from outof-distribution detections. We extract SAFE vectors for every detected object, and train a multilayer perceptron on

the surrogate task of distinguishing adversarially perturbed

from clean in-distribution examples. This circumvents the

need for realistic OOD training data, computationally expensive generative models, or retraining of the base object detector.

[arXiv]

-

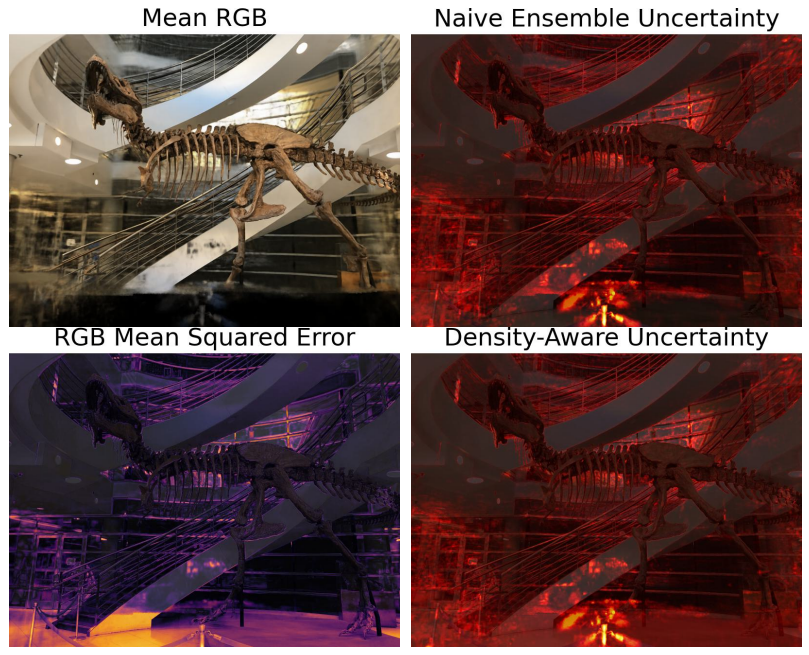

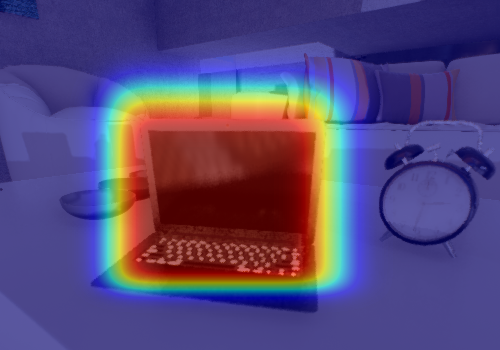

Density-aware NeRF Ensembles: Quantifying Predictive Uncertainty in Neural Radiance Fields In IEEE Conference on Robotics and Automation (ICRA), 2023.

We show that ensembling effectively quantifies

model uncertainty in Neural Radiance Fields (NeRFs) if a

density-aware epistemic uncertainty term is considered. The

naive ensembles investigated in prior work simply average

rendered RGB images to quantify the model uncertainty caused

by conflicting explanations of the observed scene. In contrast,

we additionally consider the termination probabilities along

individual rays to identify epistemic model uncertainty due to

a lack of knowledge about the parts of a scene unobserved

during training. We achieve new state-of-the-art performance

across established uncertainty quantification benchmarks for

NeRFs, outperforming methods that require complex changes

to the NeRF architecture and training regime. We furthermore

demonstrate that NeRF uncertainty can be utilised for next-best

view selection and model refinement.

[arXiv]

We show that ensembling effectively quantifies

model uncertainty in Neural Radiance Fields (NeRFs) if a

density-aware epistemic uncertainty term is considered. The

naive ensembles investigated in prior work simply average

rendered RGB images to quantify the model uncertainty caused

by conflicting explanations of the observed scene. In contrast,

we additionally consider the termination probabilities along

individual rays to identify epistemic model uncertainty due to

a lack of knowledge about the parts of a scene unobserved

during training. We achieve new state-of-the-art performance

across established uncertainty quantification benchmarks for

NeRFs, outperforming methods that require complex changes

to the NeRF architecture and training regime. We furthermore

demonstrate that NeRF uncertainty can be utilised for next-best

view selection and model refinement.

[arXiv]

-

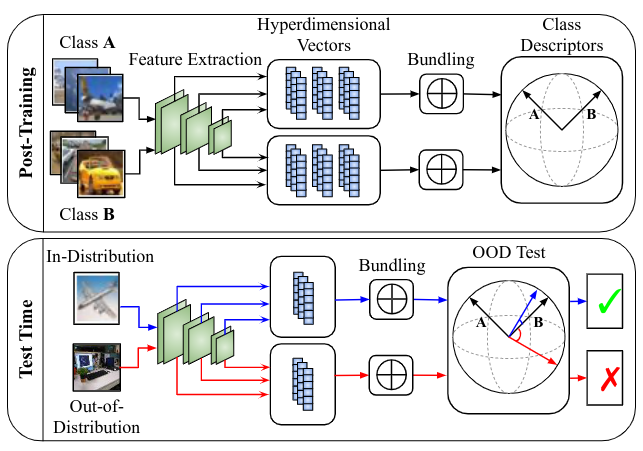

Hyperdimensional Feature Fusion for Out-Of-Distribution Detection In IEEE Winter Conference on Applications of Computer Vision (WACV), 2023.

We introduce powerful ideas from Hyperdimensional

Computing into the challenging field of Out-of-Distribution

(OOD) detection. In contrast to most existing works that perform OOD detection based on only a single layer of a neural

network, we use similarity-preserving semi-orthogonal projection matrices to project the feature maps from multiple

layers into a common vector space. By repeatedly applying the bundling operation ⊕, we create expressive classspecific descriptor vectors for all in-distribution classes.

[arXiv]

We introduce powerful ideas from Hyperdimensional

Computing into the challenging field of Out-of-Distribution

(OOD) detection. In contrast to most existing works that perform OOD detection based on only a single layer of a neural

network, we use similarity-preserving semi-orthogonal projection matrices to project the feature maps from multiple

layers into a common vector space. By repeatedly applying the bundling operation ⊕, we create expressive classspecific descriptor vectors for all in-distribution classes.

[arXiv]

Workshop Publications

-

Physically Embodied Gaussian Splatting: Embedding Physical Priors Into a Visual 3d World Model for Robotics In Workshop for Neural Representation Learning for Robot Manipulation, Conference on Robot Learning (CoRL), 2023.

Our dual Gaussian-Particle representation captures visual (Gaussians) and physical (particles) aspects of the world and enables forward prediction of robot interactions with the world.

A photometric loss between rendered Gaussians and observed images is computed (Gaussian Splatting) and converted into visual forces. These and other physical phenomena such as gravity, collisions, and mechanical forces are resolved by the always-active physics system and applied to the particles, which in turn influence the position of their associated Gaussians.

[website]

Our dual Gaussian-Particle representation captures visual (Gaussians) and physical (particles) aspects of the world and enables forward prediction of robot interactions with the world.

A photometric loss between rendered Gaussians and observed images is computed (Gaussian Splatting) and converted into visual forces. These and other physical phenomena such as gravity, collisions, and mechanical forces are resolved by the always-active physics system and applied to the particles, which in turn influence the position of their associated Gaussians.

[website]

-

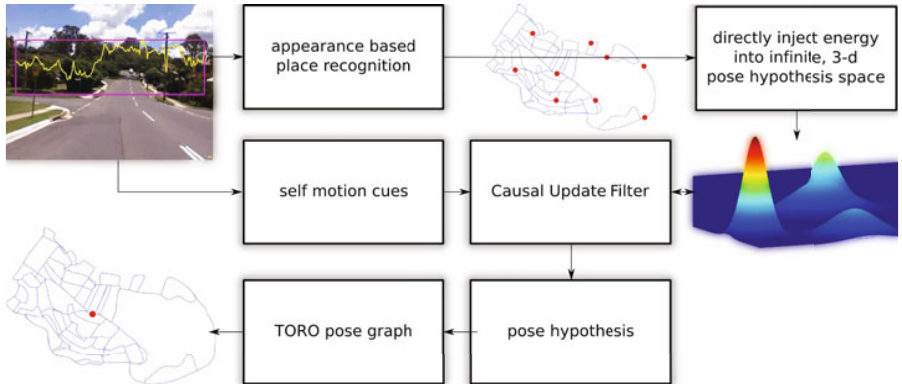

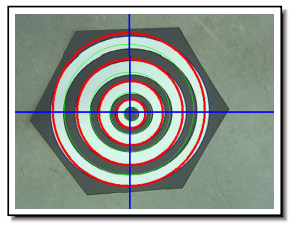

The Need for Inherently Privacy-Preserving Vision in Trustworthy Autonomous Systems In ICRA Workshop on Multidisciplinary Approaches to Co-Creating Trustworthy Autonomous Systems, 2023. Best Poster Award

This paper is a call to action to consider privacy

in the context of robotic vision. We propose a specific form

privacy preservation in which no images are captured or could

be reconstructed by an attacker even with full remote access.

We present a set of principles by which such systems can be

designed, and through a case study in localisation demonstrate

in simulation a specific implementation that delivers an important robotic capability in an inherently privacy-preserving

manner. This is a first step, and we hope to inspire future works

that expand the range of applications open to sighted robotic

systems.

[arXiv]

This paper is a call to action to consider privacy

in the context of robotic vision. We propose a specific form

privacy preservation in which no images are captured or could

be reconstructed by an attacker even with full remote access.

We present a set of principles by which such systems can be

designed, and through a case study in localisation demonstrate

in simulation a specific implementation that delivers an important robotic capability in an inherently privacy-preserving

manner. This is a first step, and we hope to inspire future works

that expand the range of applications open to sighted robotic

systems.

[arXiv]

-

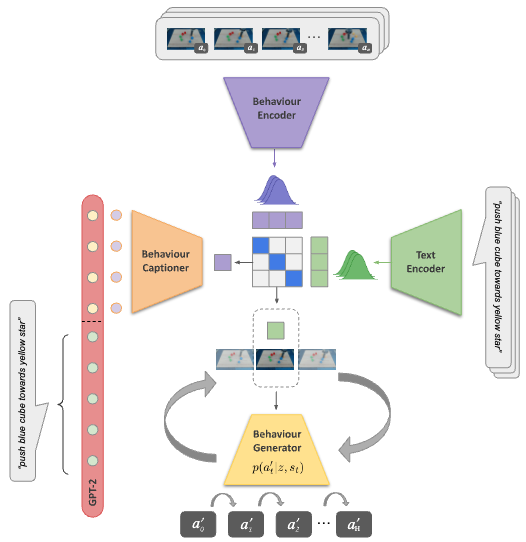

Contrastive Language, Action, and State Pre-training for Robot Learning In ICRA Workshop on Pretraining for Robotics (PT4R), 2023.

We introduce a method for unifying language, action, and state information in a shared embedding space to facilitate a range of downstream tasks in robot learning. Our method, Contrastive Language, Action, and State Pre-training (CLASP), extends the CLIP formulation by incorporating distributional learning, capturing the inherent complexities and one-to-many relationships in behaviour-text alignment. By employing distributional outputs for both text and behaviour encoders, our model effectively associates diverse textual commands with a single behaviour and vice-versa. We demonstrate the utility of our method for the following downstream tasks: zero-shot text-behaviour retrieval, captioning unseen robot behaviours, and learning a behaviour prior for language-conditioned reinforcement learning.

[arXiv]

We introduce a method for unifying language, action, and state information in a shared embedding space to facilitate a range of downstream tasks in robot learning. Our method, Contrastive Language, Action, and State Pre-training (CLASP), extends the CLIP formulation by incorporating distributional learning, capturing the inherent complexities and one-to-many relationships in behaviour-text alignment. By employing distributional outputs for both text and behaviour encoders, our model effectively associates diverse textual commands with a single behaviour and vice-versa. We demonstrate the utility of our method for the following downstream tasks: zero-shot text-behaviour retrieval, captioning unseen robot behaviours, and learning a behaviour prior for language-conditioned reinforcement learning.

[arXiv]

arXiv Preprints

-

LHManip: A Dataset for Long-Horizon Language-Grounded Manipulation Tasks in Cluttered Tabletop Environments arXiv preprint arXiv:2312.12036, 2023.

We present the Long-Horizon Manipulation (LHManip) dataset comprising 200

episodes, demonstrating 20 different manipulation tasks via real robot teleoperation. The tasks entail multiple sub-tasks,

including grasping, pushing, stacking and throwing objects in highly cluttered environments. Each task is paired with a

natural language instruction and multi-camera viewpoints for point-cloud or NeRF reconstruction. In total, the dataset

comprises 176,278 observation-action pairs which form part of the Open X-Embodiment dataset.

[arXiv]

[website]

We present the Long-Horizon Manipulation (LHManip) dataset comprising 200

episodes, demonstrating 20 different manipulation tasks via real robot teleoperation. The tasks entail multiple sub-tasks,

including grasping, pushing, stacking and throwing objects in highly cluttered environments. Each task is paired with a

natural language instruction and multi-camera viewpoints for point-cloud or NeRF reconstruction. In total, the dataset

comprises 176,278 observation-action pairs which form part of the Open X-Embodiment dataset.

[arXiv]

[website]

2022

Journal Articles

-

A Holistic Approach to Reactive Mobile Manipulation IEEE Robotics and Automation Letters, 2022.

We present the design and implementation of a taskable reactive mobile manipulation system. In contrary to related work, we treat the arm and base degrees of freedom as a holistic structure which greatly improves the speed and fluidity of the resulting motion. At the core of this approach is a robust and reactive motion controller which can achieve a desired end-effector pose, while avoiding joint position and velocity limits, and ensuring the mobile manipulator is manoeuvrable throughout the trajectory. This can support sensor-based behaviours such as closed-loop visual grasping. As no planning is involved in our approach, the robot is never stationary thinking about what to do next. We show the versatility of our holistic motion controller by implementing a pick and place system using behaviour trees and demonstrate this task on a 9-degree-of-freedom mobile manipulator.

[arXiv]

[website]

We present the design and implementation of a taskable reactive mobile manipulation system. In contrary to related work, we treat the arm and base degrees of freedom as a holistic structure which greatly improves the speed and fluidity of the resulting motion. At the core of this approach is a robust and reactive motion controller which can achieve a desired end-effector pose, while avoiding joint position and velocity limits, and ensuring the mobile manipulator is manoeuvrable throughout the trajectory. This can support sensor-based behaviours such as closed-loop visual grasping. As no planning is involved in our approach, the robot is never stationary thinking about what to do next. We show the versatility of our holistic motion controller by implementing a pick and place system using behaviour trees and demonstrate this task on a 9-degree-of-freedom mobile manipulator.

[arXiv]

[website]

-

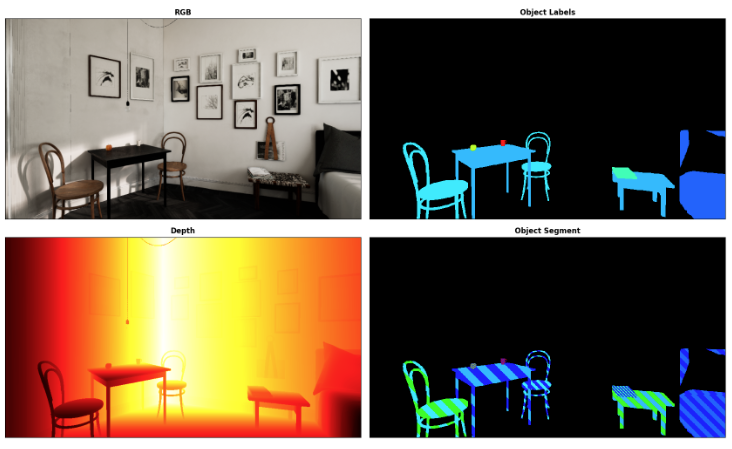

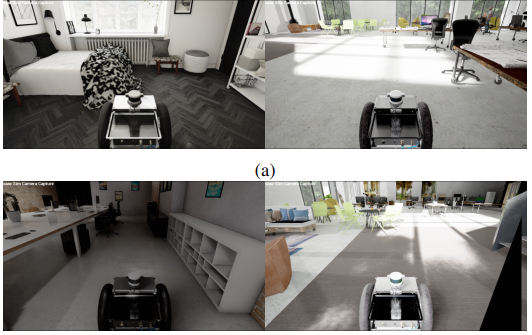

BenchBot environments for active robotics (BEAR): Simulated data for active scene understanding research The International Journal of Robotics Research, 2022.

We present a platform to foster research in active scene understanding, consisting of high-fidelity simulated environments and a simple yet powerful API that controls a mobile robot in simulation and reality. In contrast to static, pre-recorded datasets that focus on the perception aspect of scene understanding, agency is a top priority in our work. We provide three levels of robot agency, allowing users to control a robot at varying levels of difficulty and realism. While the most basic level provides pre-defined trajectories and ground-truth localisation, the more realistic levels allow us to evaluate integrated behaviours comprising perception, navigation, exploration and SLAM.

[website]

We present a platform to foster research in active scene understanding, consisting of high-fidelity simulated environments and a simple yet powerful API that controls a mobile robot in simulation and reality. In contrast to static, pre-recorded datasets that focus on the perception aspect of scene understanding, agency is a top priority in our work. We provide three levels of robot agency, allowing users to control a robot at varying levels of difficulty and realism. While the most basic level provides pre-defined trajectories and ground-truth localisation, the more realistic levels allow us to evaluate integrated behaviours comprising perception, navigation, exploration and SLAM.

[website]

-

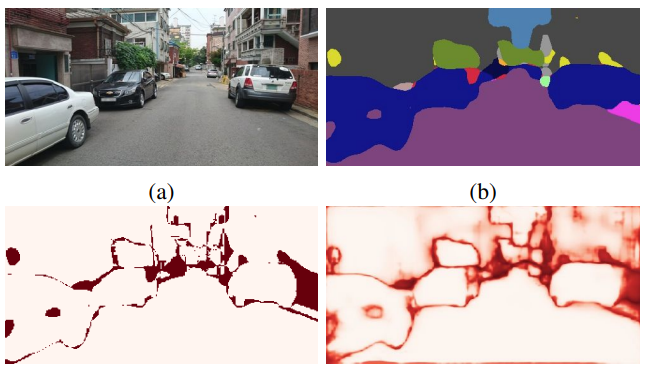

FSNet: A Failure Detection Framework for Semantic Segmentation IEEE Robotics and Automation Letters, 2022.

Semantic segmentation is an important task that helps autonomous vehicles understand their surroundings and navigate safely. However, during deployment, even the most mature segmentation models are vulnerable to various external factors that can degrade the segmentation performance with potentially catastrophic consequences for the vehicle and its surroundings. To address this issue, we propose a failure detection framework to identify pixel-level misclassification. We do so by exploiting internal features of the segmentation model and training it simultaneously with a failure detection network. During deployment, the failure detector flags areas in the image where the segmentation model has failed to segment correctly.

[arXiv]

Semantic segmentation is an important task that helps autonomous vehicles understand their surroundings and navigate safely. However, during deployment, even the most mature segmentation models are vulnerable to various external factors that can degrade the segmentation performance with potentially catastrophic consequences for the vehicle and its surroundings. To address this issue, we propose a failure detection framework to identify pixel-level misclassification. We do so by exploiting internal features of the segmentation model and training it simultaneously with a failure detection network. During deployment, the failure detector flags areas in the image where the segmentation model has failed to segment correctly.

[arXiv]

-

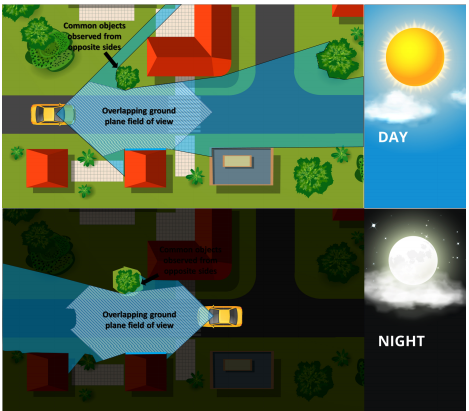

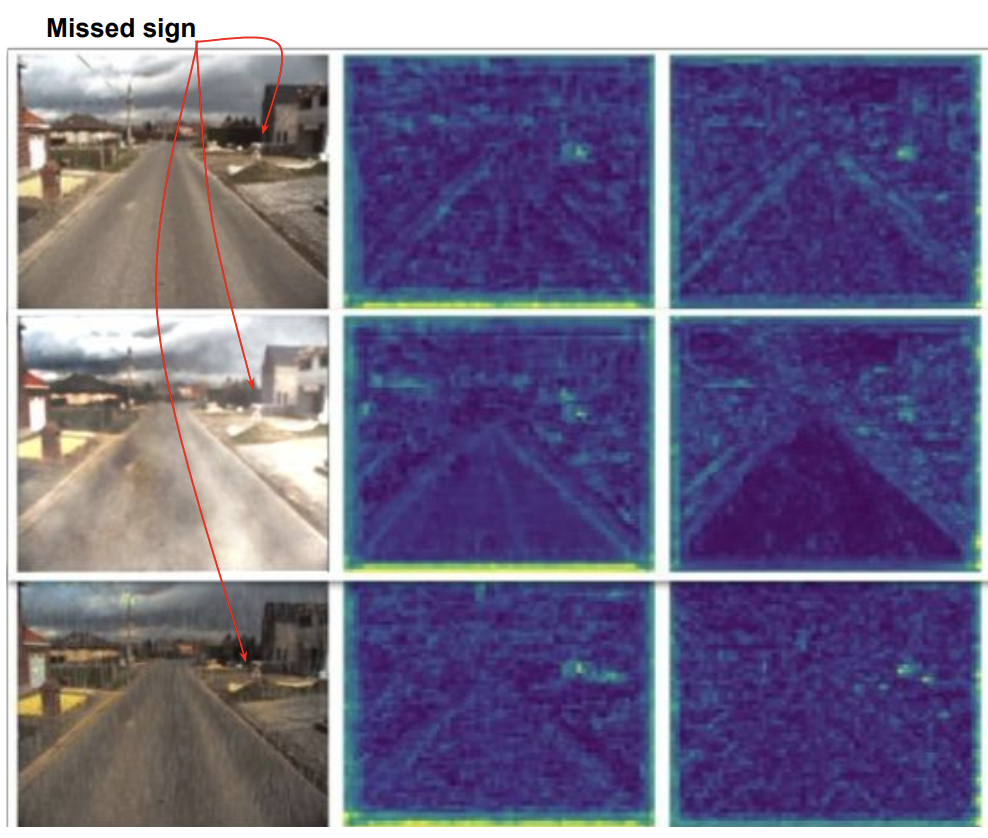

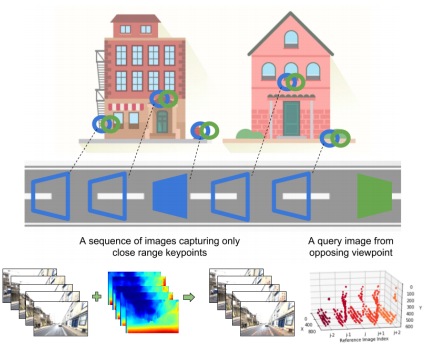

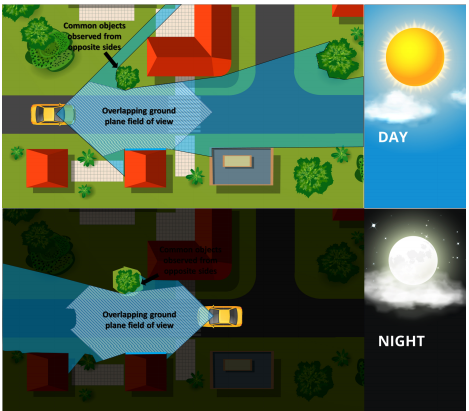

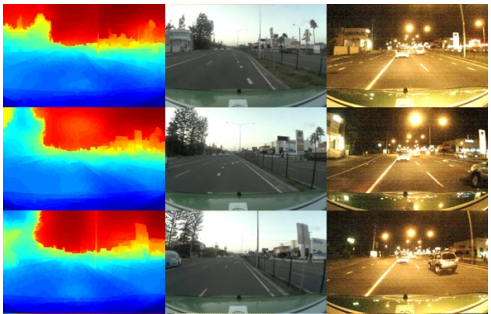

Semantic–geometric visual place recognition: a new perspective for reconciling opposing views The International Journal of Robotics Research (IJRR), 2022.

We propose a hybrid image descriptor that semantically aggregates salient visual information, complemented by appearance-based description, and augment a conventional coarse-to-fine recognition pipeline with keypoint correspondences extracted from within the convolutional feature maps of a pre-trained network. Finally, we introduce descriptor normalization and local score enhancement strategies for improving the robustness of the system. Using both existing benchmark datasets and extensive new datasets that for the first time combine the three challenges of opposing viewpoints, lateral viewpoint shifts, and extreme appearance change, we show that our system can achieve practical place recognition performance where existing state-of-the-art methods fail.

We propose a hybrid image descriptor that semantically aggregates salient visual information, complemented by appearance-based description, and augment a conventional coarse-to-fine recognition pipeline with keypoint correspondences extracted from within the convolutional feature maps of a pre-trained network. Finally, we introduce descriptor normalization and local score enhancement strategies for improving the robustness of the system. Using both existing benchmark datasets and extensive new datasets that for the first time combine the three challenges of opposing viewpoints, lateral viewpoint shifts, and extreme appearance change, we show that our system can achieve practical place recognition performance where existing state-of-the-art methods fail.

Conference Publications

-

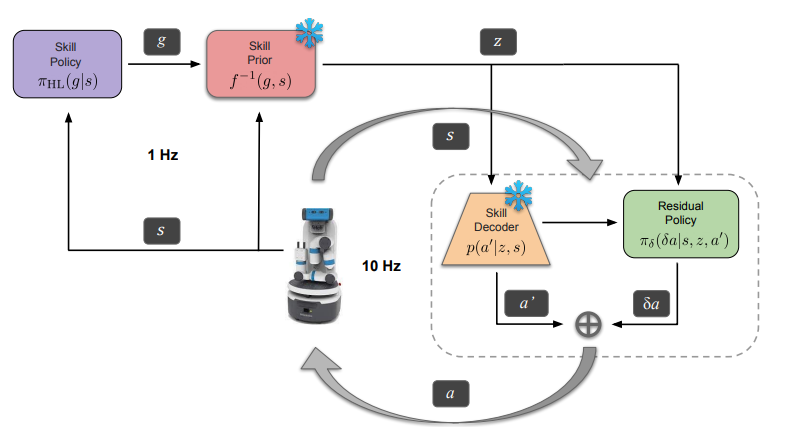

Residual Skill Policies: Learning an Adaptable Skill-based Action Space for Reinforcement Learning for Robotics In Conference on Robot Learning (CoRL), 2022.

Skill-based reinforcement learning (RL) has emerged as a promising strategy to

leverage prior knowledge for accelerated robot learning. We firstly

propose accelerating exploration in the skill space using state-conditioned generative models to directly bias the high-level agent towards only sampling skills

relevant to a given state based on prior experience. Next, we propose a low-level

residual policy for fine-grained skill adaptation enabling downstream RL agents

to adapt to unseen task variations. Finally, we validate our approach across four

challenging manipulation tasks that differ from those used to build the skill space,

demonstrating our ability to learn across task variations while significantly accelerating exploration, outperforming prior works.

[arXiv]

[website]

Skill-based reinforcement learning (RL) has emerged as a promising strategy to

leverage prior knowledge for accelerated robot learning. We firstly

propose accelerating exploration in the skill space using state-conditioned generative models to directly bias the high-level agent towards only sampling skills

relevant to a given state based on prior experience. Next, we propose a low-level

residual policy for fine-grained skill adaptation enabling downstream RL agents

to adapt to unseen task variations. Finally, we validate our approach across four

challenging manipulation tasks that differ from those used to build the skill space,

demonstrating our ability to learn across task variations while significantly accelerating exploration, outperforming prior works.

[arXiv]

[website]

-

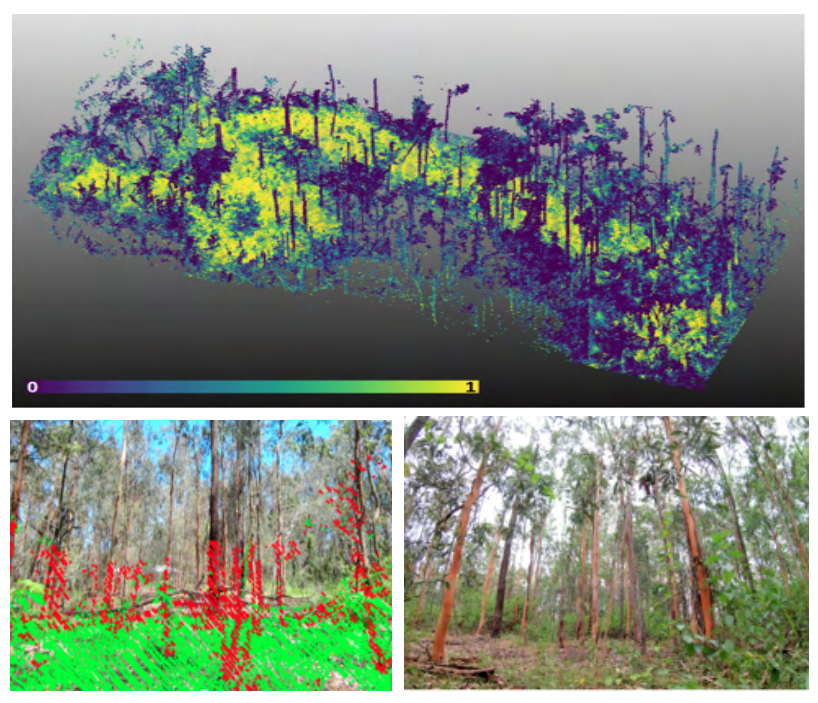

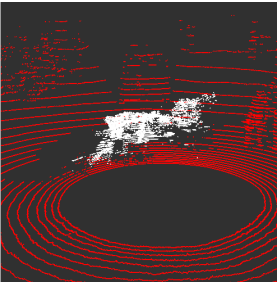

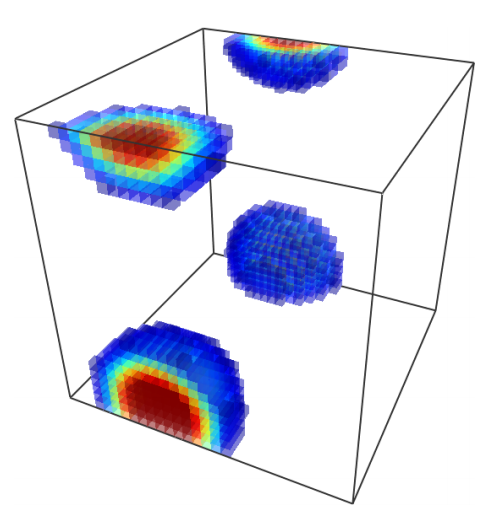

Forest Traversability Mapping (FTM): Traversability estimation using 3D voxel-based Normal Distributed Transform to enable forest navigation In 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022.

Autonomous navigation in dense vegetation remains an open challenge and is an area of major interest for the research community. In this paper we propose a novel traversability estimation method, the Forest Traversability Map, that gives autonomous ground vehicles the ability to navigate in harsh forests or densely vegetated environments. The method estimates travers ability in unstructured environments dominated by vegetation, void of any dominant human structures, gravel or dirt roads, with higher accuracy than the state of the art.

Autonomous navigation in dense vegetation remains an open challenge and is an area of major interest for the research community. In this paper we propose a novel traversability estimation method, the Forest Traversability Map, that gives autonomous ground vehicles the ability to navigate in harsh forests or densely vegetated environments. The method estimates travers ability in unstructured environments dominated by vegetation, void of any dominant human structures, gravel or dirt roads, with higher accuracy than the state of the art.

Workshop Publications

-

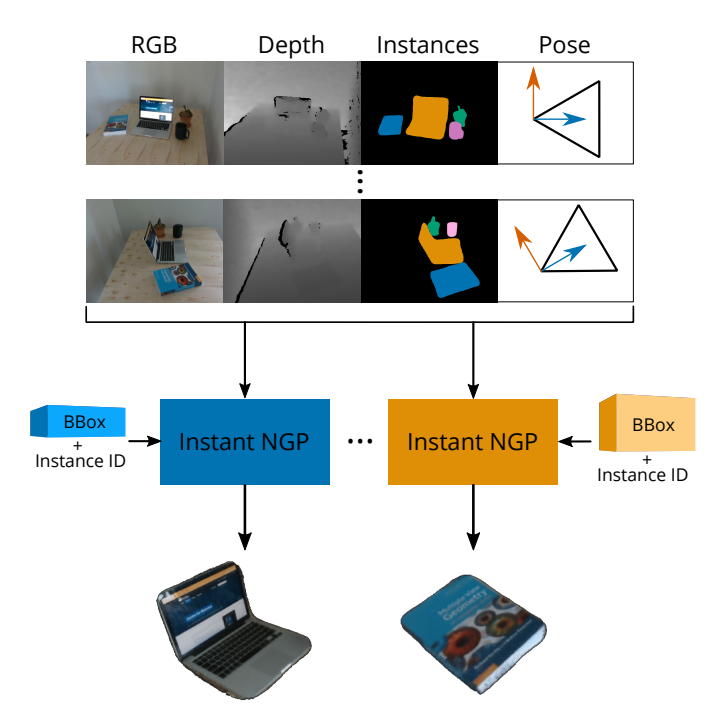

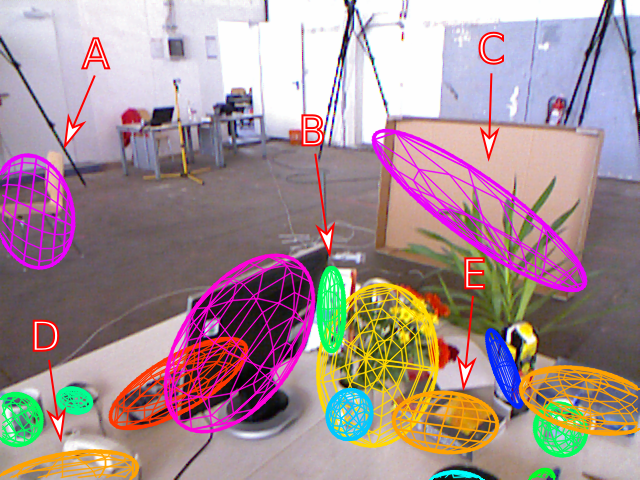

Implicit Object Mapping With Noisy Data In RSS Workshop on Implicit Representations for Robotic Manipulation, 2022.

This paper uses the outputs of an object-based SLAM system to bound objects in the scene with coarse primitives and - in concert with instance masks - identify obstructions in the training images. Objects are therefore automatically bounded, and non-relevant geometry is excluded from the NeRF representation. The method’s performance is benchmarked under ideal conditions and tested against errors in the poses and instance masks. Our results show that object-based NeRFs are robust to pose variations but sensitive to the quality of the instance masks.

[arXiv]

This paper uses the outputs of an object-based SLAM system to bound objects in the scene with coarse primitives and - in concert with instance masks - identify obstructions in the training images. Objects are therefore automatically bounded, and non-relevant geometry is excluded from the NeRF representation. The method’s performance is benchmarked under ideal conditions and tested against errors in the poses and instance masks. Our results show that object-based NeRFs are robust to pose variations but sensitive to the quality of the instance masks.

[arXiv]

arXiv Preprints

-

Retrospectives on the Embodied AI Workshop arXiv preprint arXiv:2210.06849, 2022.

We present a retrospective on the state of Embodied AI

research. Our analysis focuses on 13 challenges presented

at the Embodied AI Workshop at CVPR. These challenges

are grouped into three themes: (1) visual navigation, (2) rearrangement, and (3) embodied vision-and-language. We

discuss the dominant datasets within each theme, evaluation metrics for the challenges, and the performance of stateof-the-art models. We highlight commonalities between top

approaches to the challenges and identify potential future

directions for Embodied AI research.

[arXiv]

We present a retrospective on the state of Embodied AI

research. Our analysis focuses on 13 challenges presented

at the Embodied AI Workshop at CVPR. These challenges

are grouped into three themes: (1) visual navigation, (2) rearrangement, and (3) embodied vision-and-language. We

discuss the dominant datasets within each theme, evaluation metrics for the challenges, and the performance of stateof-the-art models. We highlight commonalities between top

approaches to the challenges and identify potential future

directions for Embodied AI research.

[arXiv]

2021

Journal Articles

-

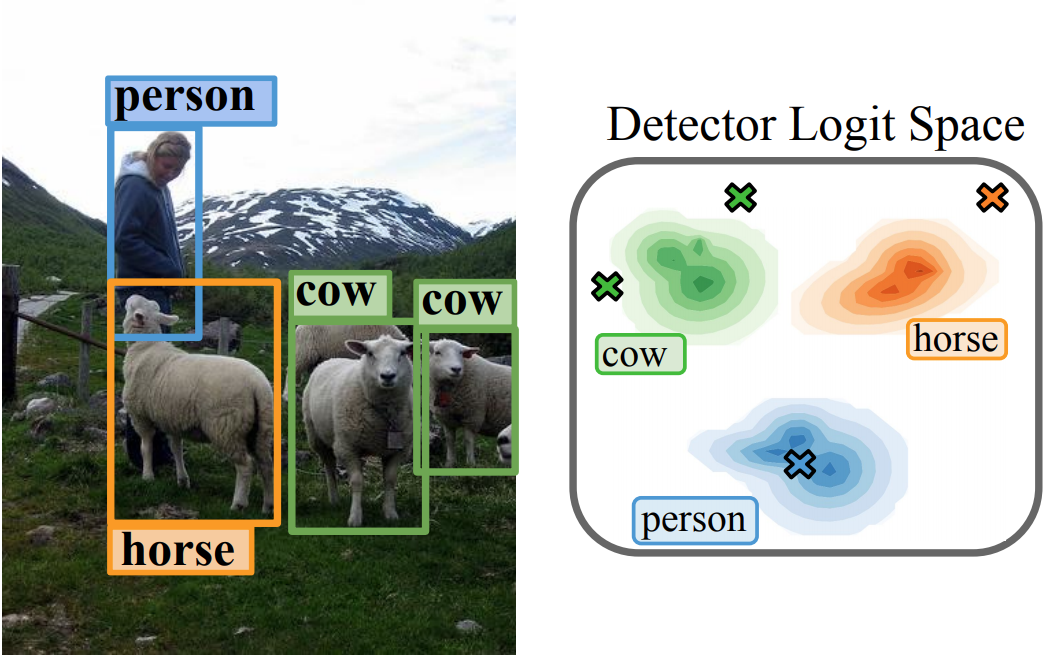

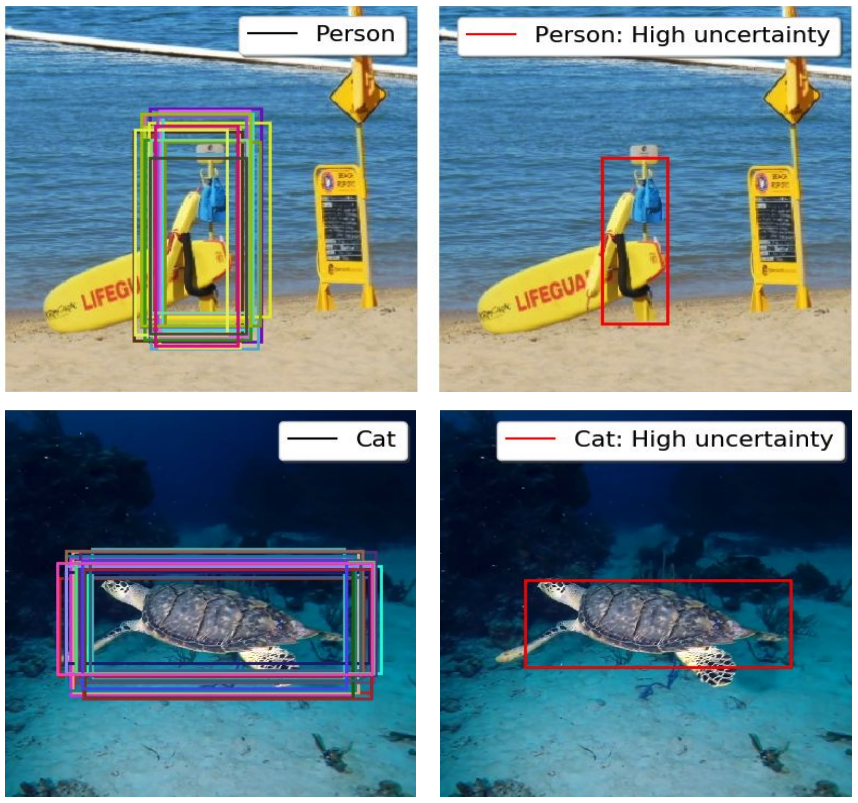

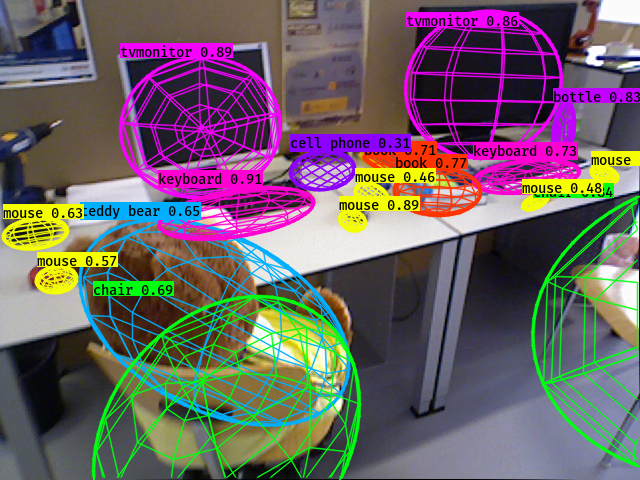

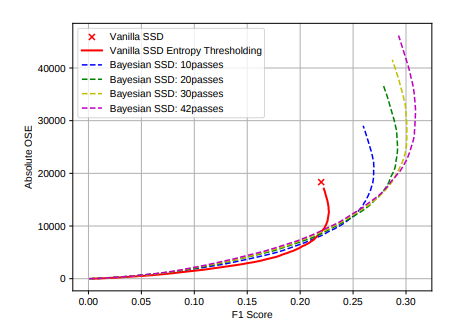

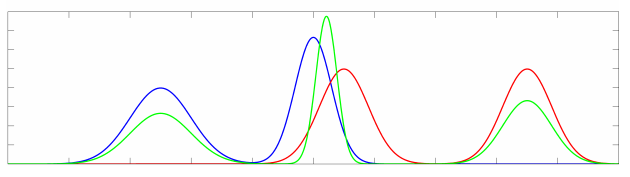

Uncertainty for Identifying Open-Set Errors in Visual Object Detection IEEE Robotics and Automation Letters (RA-L), 2021.

We propose GMM-Det, a real-time method for extracting

epistemic uncertainty from object detectors to identify and reject open-set errors. GMM-Det trains the detector to produce a

structured logit space that is modelled with class-specific Gaussian Mixture Models. At test time, open-set errors are identified

by their low log-probability under all Gaussian Mixture Models.

We test two common detector architectures, Faster R-CNN and

RetinaNet, across three varied datasets spanning robotics and

computer vision. Our results show that GMM-Det consistently

outperforms existing uncertainty techniques for identifying and

rejecting open-set detections, especially at the low-error-rate

operating point required for safety-critical applications. GMMDet maintains object detection performance, and introduces

only minimal computational overhead.

[arXiv]

We propose GMM-Det, a real-time method for extracting

epistemic uncertainty from object detectors to identify and reject open-set errors. GMM-Det trains the detector to produce a

structured logit space that is modelled with class-specific Gaussian Mixture Models. At test time, open-set errors are identified

by their low log-probability under all Gaussian Mixture Models.

We test two common detector architectures, Faster R-CNN and

RetinaNet, across three varied datasets spanning robotics and

computer vision. Our results show that GMM-Det consistently

outperforms existing uncertainty techniques for identifying and

rejecting open-set detections, especially at the low-error-rate

operating point required for safety-critical applications. GMMDet maintains object detection performance, and introduces

only minimal computational overhead.

[arXiv]

-

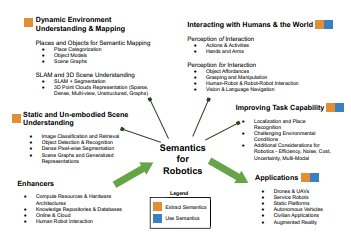

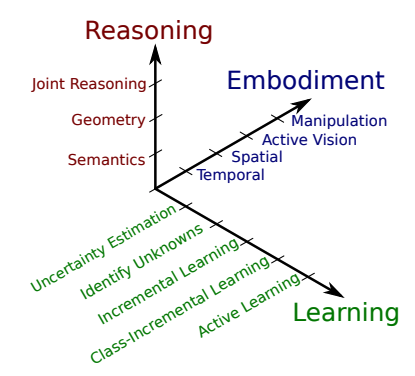

Semantics for robotic mapping, perception and interaction: A survey Foundations and Trends in Robotics, 2021.

This survey provides an overarching snapshot of where semantics in robotics stands today. We establish a taxonomy for semantics research in or relevant to robotics, split into four broad categories of activity, in which semantics are extracted, used, or both. Within these broad categories we survey dozens of major topics including fundamentals from the computer vision field and key robotics research areas utilizing semantics, including mapping, navigation and interaction with the world. The survey also covers key practical considerations, including enablers like increased data availability and improved computational hardware, and major application areas where semantics is or is likely to play a key role. In creating this survey, we hope to provide researchers across academia and industry with a comprehensive reference that helps facilitate future research in this exciting field.

[arXiv]

This survey provides an overarching snapshot of where semantics in robotics stands today. We establish a taxonomy for semantics research in or relevant to robotics, split into four broad categories of activity, in which semantics are extracted, used, or both. Within these broad categories we survey dozens of major topics including fundamentals from the computer vision field and key robotics research areas utilizing semantics, including mapping, navigation and interaction with the world. The survey also covers key practical considerations, including enablers like increased data availability and improved computational hardware, and major application areas where semantics is or is likely to play a key role. In creating this survey, we hope to provide researchers across academia and industry with a comprehensive reference that helps facilitate future research in this exciting field.

[arXiv]

-

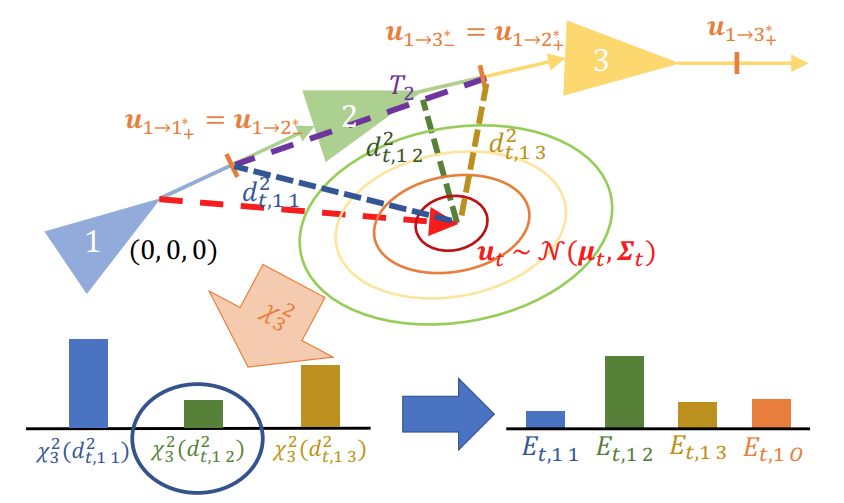

Probabilistic Appearance-Invariant Topometric Localization with New Place Awareness IEEE Robotics and Automation Letters (RA-L), 2021.

We present a new probabilistic topometric localization system which incorporates full 3-dof odometry into the motion model and furthermore, adds an “off-map” state within the state-estimation framework, allowing query traverses which feature significant route detours from the reference map to be successfully localized. We perform extensive evaluation on multiple query traverses from the Oxford RobotCar dataset exhibiting both significant appearance change and deviations from routes previously traversed.

[arXiv]

We present a new probabilistic topometric localization system which incorporates full 3-dof odometry into the motion model and furthermore, adds an “off-map” state within the state-estimation framework, allowing query traverses which feature significant route detours from the reference map to be successfully localized. We perform extensive evaluation on multiple query traverses from the Oxford RobotCar dataset exhibiting both significant appearance change and deviations from routes previously traversed.

[arXiv]

-

Probabilistic Visual Place Recognition for Hierarchical Localization IEEE Robotics and Automation Letters (RA-L), 2021.

We propose two methods which adapt image retrieval techniques used for visual place recognition to the Bayesian state estimation formulation for localization. We demonstrate significant improvements to the

localization accuracy of the coarse localization stage using our methods, whilst retaining state-of-the-art performance under severe appearance change. Using extensive experimentation on the Oxford RobotCar dataset, results show that our approach

outperforms comparable state-of-the-art methods in terms of precision-recall performance for localizing image sequences. In addition, our proposed methods provides the flexibility

to contextually scale localization latency in order to achieve these improvements.

[arXiv]

We propose two methods which adapt image retrieval techniques used for visual place recognition to the Bayesian state estimation formulation for localization. We demonstrate significant improvements to the

localization accuracy of the coarse localization stage using our methods, whilst retaining state-of-the-art performance under severe appearance change. Using extensive experimentation on the Oxford RobotCar dataset, results show that our approach

outperforms comparable state-of-the-art methods in terms of precision-recall performance for localizing image sequences. In addition, our proposed methods provides the flexibility

to contextually scale localization latency in order to achieve these improvements.

[arXiv]

Conference Publications

-

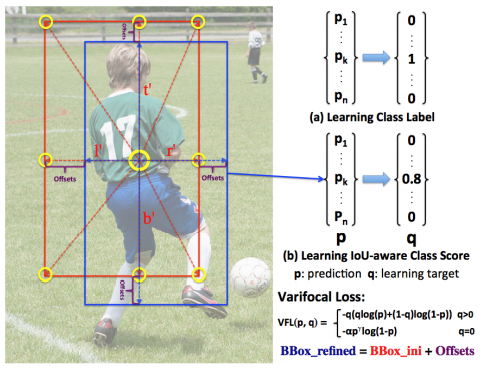

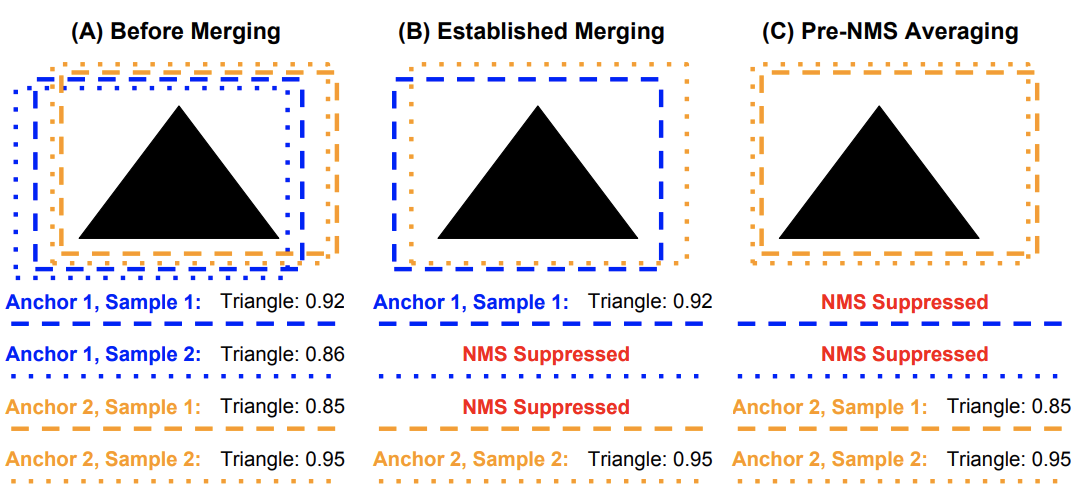

VarifocalNet: An IoU-aware Dense Object Detector In Conference on Computer Vision and Pattern Recognition (CVPR), 2021. Oral Presentation

Accurately ranking the vast number of candidate detections is crucial for dense object detectors to achieve high performance. Prior work uses the classification score or a combination of classification and predicted localization scores to rank candidates. However, neither option results in a reliable ranking, thus degrading detection performance. In this paper, we propose to learn an Iou-aware Classification Score (IACS) as a joint representation of object presence confidence and localization accuracy. We show that dense object detectors can achieve a more accurate ranking of candidate detections based on the IACS. We design a new loss function, named Varifocal Loss, to train a dense object detector to predict the IACS, and propose a new star-shaped bounding box feature representation for IACS prediction and bounding box refinement. Combining these two new components and a bounding box refinement branch, we build an IoU-aware dense object detector based on the FCOS+ATSS architecture, that we call VarifocalNet or VFNet for short.

[arXiv]

[website]

Accurately ranking the vast number of candidate detections is crucial for dense object detectors to achieve high performance. Prior work uses the classification score or a combination of classification and predicted localization scores to rank candidates. However, neither option results in a reliable ranking, thus degrading detection performance. In this paper, we propose to learn an Iou-aware Classification Score (IACS) as a joint representation of object presence confidence and localization accuracy. We show that dense object detectors can achieve a more accurate ranking of candidate detections based on the IACS. We design a new loss function, named Varifocal Loss, to train a dense object detector to predict the IACS, and propose a new star-shaped bounding box feature representation for IACS prediction and bounding box refinement. Combining these two new components and a bounding box refinement branch, we build an IoU-aware dense object detector based on the FCOS+ATSS architecture, that we call VarifocalNet or VFNet for short.

[arXiv]

[website]

-

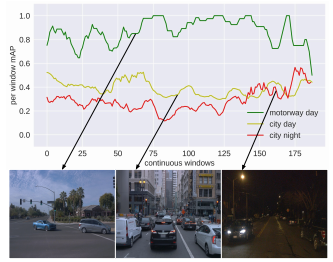

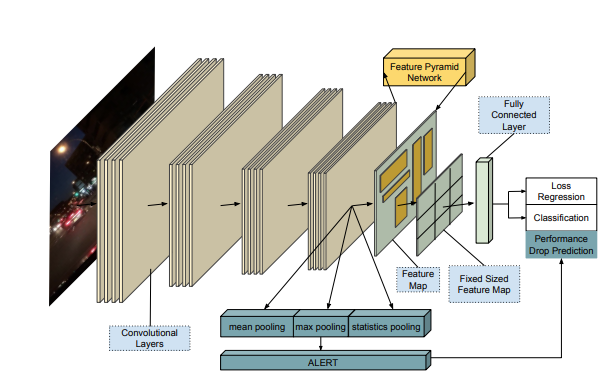

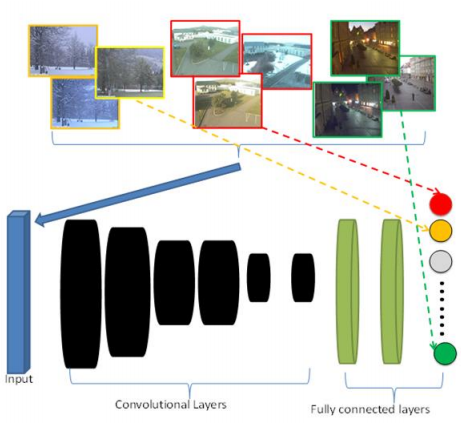

Online Monitoring of Object Detection Performance Post-Deployment In Proc. of IEEE International Conference on Intelligent Robots and Systems (IROS), 2021.

Post-deployment, an object detector is expected to operate at a similar level of performance that was reported on its testing dataset. However, when deployed onboard mobile robots that operate under varying and complex environmental conditions, the detector’s performance can fluctuate and occasionally degrade severely without warning. Undetected, this can lead the robot to take unsafe and risky actions based on low-quality and unreliable object detections. We address this problem and introduce a cascaded neural network that monitors the performance of the object detector by predicting the quality of its mean average precision (mAP) on a sliding window of the input frames.

[arXiv]

Post-deployment, an object detector is expected to operate at a similar level of performance that was reported on its testing dataset. However, when deployed onboard mobile robots that operate under varying and complex environmental conditions, the detector’s performance can fluctuate and occasionally degrade severely without warning. Undetected, this can lead the robot to take unsafe and risky actions based on low-quality and unreliable object detections. We address this problem and introduce a cascaded neural network that monitors the performance of the object detector by predicting the quality of its mean average precision (mAP) on a sliding window of the input frames.

[arXiv]

-

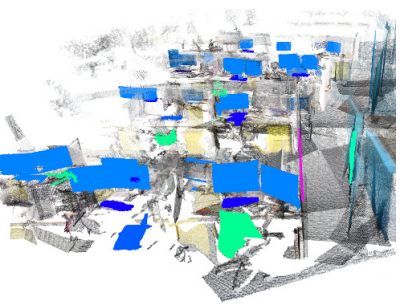

Evaluating the Impact of Semantic Segmentation and Pose Estimationon Dense Semantic SLAM In Proc. of IEEE International Conference on Intelligent Robots and Systems (IROS), 2021.

Recent Semantic SLAM methods combine classical geometry-based estimation with deep learning-based object detection or semantic segmentation.

In this paper we evaluate the quality of semantic maps generated by state-of-the-art class- and instance-aware dense semantic SLAM algorithms whose codes are publicly available and explore the impacts both semantic segmentation and pose estimation have on the quality of semantic maps.

We obtain these results by providing algorithms with ground-truth pose and/or semantic segmentation data available from simulated environments. We establish that semantic segmentation is the largest source of error through our experiments, dropping mAP and OMQ performance by up to 74.3% and 71.3% respectively.

Recent Semantic SLAM methods combine classical geometry-based estimation with deep learning-based object detection or semantic segmentation.

In this paper we evaluate the quality of semantic maps generated by state-of-the-art class- and instance-aware dense semantic SLAM algorithms whose codes are publicly available and explore the impacts both semantic segmentation and pose estimation have on the quality of semantic maps.

We obtain these results by providing algorithms with ground-truth pose and/or semantic segmentation data available from simulated environments. We establish that semantic segmentation is the largest source of error through our experiments, dropping mAP and OMQ performance by up to 74.3% and 71.3% respectively.

-

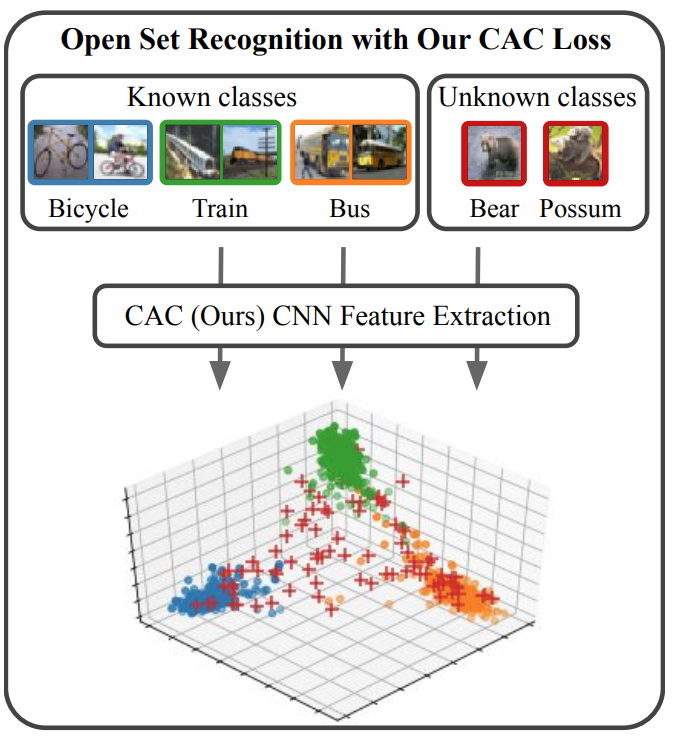

Class Anchor Clustering: A Loss for Distance-based Open Set Recognition In IEEE Winter Conference on Applications of Computer Vision (WACV), 2021.

Existing open set classifiers distinguish between known and unknown inputs by measuring distance in a network’s logit space, assuming that known inputs cluster closer to the training data than unknown inputs. However, this approach is typically applied post-hoc to networks trained with cross-entropy loss, which neither guarantees nor encourages the hoped-for clustering behaviour. To overcome this limitation, we introduce Class Anchor Clustering (CAC) loss. CAC is an entirely distance-based loss that explicitly encourages training data to form tight clusters techniques on the challenging TinyImageNet dataset, achieving a 2.4% performance increase in AUROC.

[arXiv]

Existing open set classifiers distinguish between known and unknown inputs by measuring distance in a network’s logit space, assuming that known inputs cluster closer to the training data than unknown inputs. However, this approach is typically applied post-hoc to networks trained with cross-entropy loss, which neither guarantees nor encourages the hoped-for clustering behaviour. To overcome this limitation, we introduce Class Anchor Clustering (CAC) loss. CAC is an entirely distance-based loss that explicitly encourages training data to form tight clusters techniques on the challenging TinyImageNet dataset, achieving a 2.4% performance increase in AUROC.

[arXiv]

Workshop Publications

-

Zero-Shot Uncertainty-Aware Deployment of Simulation Trained Policies on Real-World Robots In NeuIPS Workshop on Deployable Decision Makig in Embodied Systems, 2021.

While deep reinforcement learning (RL) agents have demonstrated incredible potential in attaining dexterous behaviours for robotics, they tend to make errors when deployed in the real world due to mismatches between the training and execution environments. In contrast, the classical robotics community have developed a range of controllers that can safely operate across most states in the real world given their explicit derivation. These controllers however lack the dexterity required for complex tasks given limitations in analytical modelling and approximations. In this paper, we propose Bayesian Controller Fusion (BCF), a novel uncertainty-aware deployment strategy that combines the strengths of deep RL policies and traditional handcrafted controllers.

[arXiv]

While deep reinforcement learning (RL) agents have demonstrated incredible potential in attaining dexterous behaviours for robotics, they tend to make errors when deployed in the real world due to mismatches between the training and execution environments. In contrast, the classical robotics community have developed a range of controllers that can safely operate across most states in the real world given their explicit derivation. These controllers however lack the dexterity required for complex tasks given limitations in analytical modelling and approximations. In this paper, we propose Bayesian Controller Fusion (BCF), a novel uncertainty-aware deployment strategy that combines the strengths of deep RL policies and traditional handcrafted controllers.

[arXiv]

arXiv Preprints

2020

Journal Articles

Special Issues

-

Special Issue on Deep Learning for Robotic Vision The International Journal for Computer Vision (IJCV), 2020.

Conference Publications

-

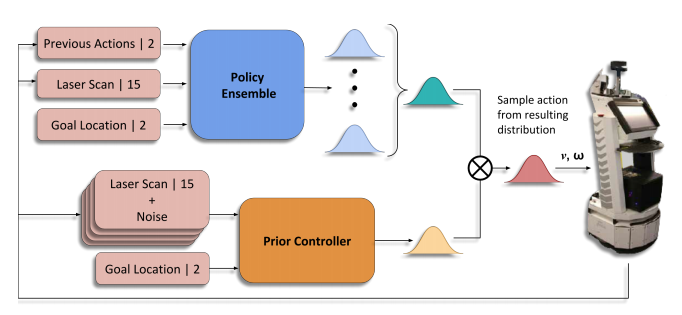

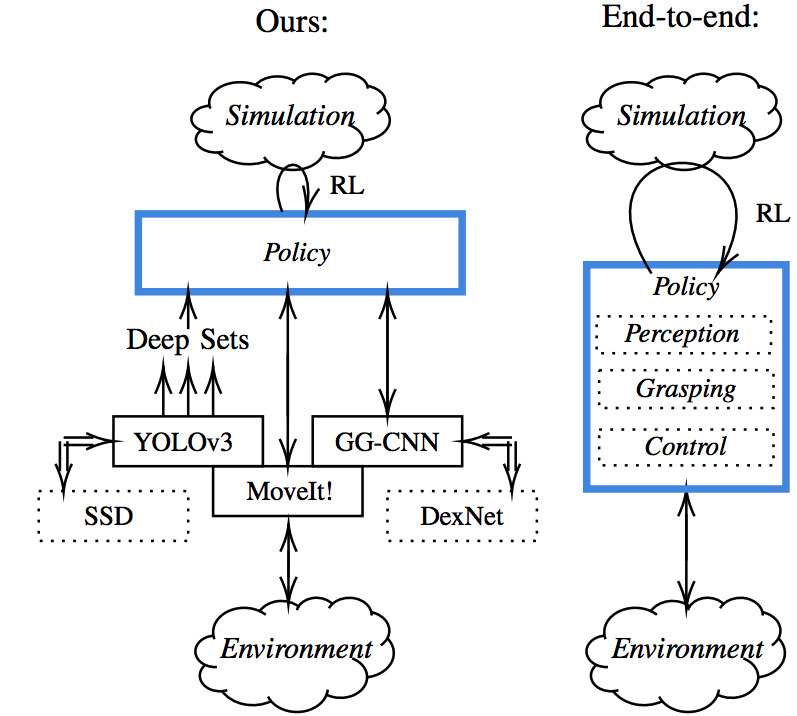

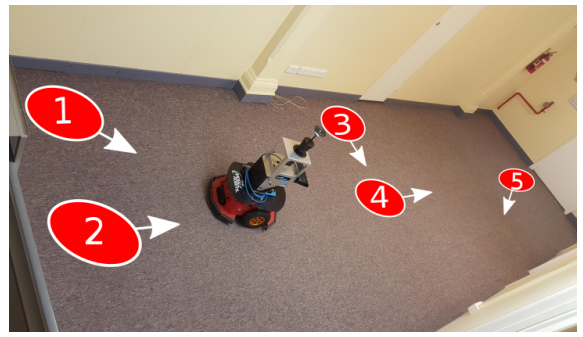

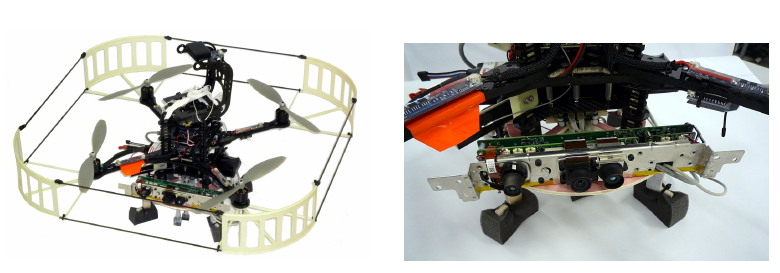

Multiplicative Controller Fusion: Leveraging Algorithmic Priors for Sample-efficient Reinforcement Learning and Safe Sim-To-Real Transfer In Proc. of IEEE International Conference on Intelligent Robots and Systems (IROS), 2020.

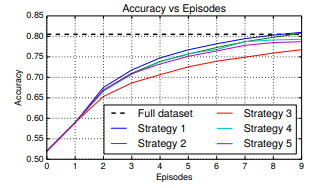

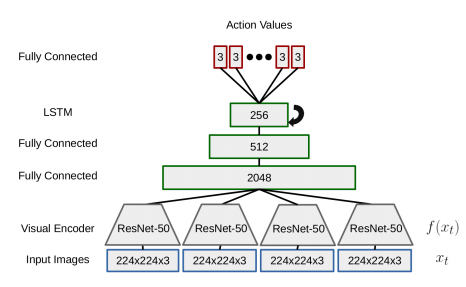

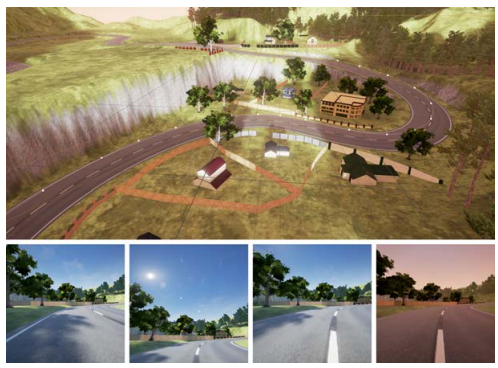

Learning long-horizon tasks on real robot hardware can be intractable, and transferring a learned policy from simulation to reality is still extremely challenging. We present a novel approach to model-free reinforcement learning that can leverage existing sub-optimal solutions as an algorithmic prior during training and deployment. During training, our gated fusion approach enables the prior to guide the initial stages of exploration, increasing sample-efficiency and enabling learning from sparse long-horizon reward signals. Importantly, the policy can learn to improve beyond the performance of the sub-optimal prior since the prior’s influence is annealed gradually. During deployment, the policy’s uncertainty provides a reliable strategy for transferring a simulation-trained policy to the real world by falling back to the prior controller in uncertain states. We show the efficacy of our Multiplicative Controller Fusion approach on the task of robot navigation and demonstrate safe transfer from simulation to the real world without any fine tuning.

[arXiv]

[website]

Learning long-horizon tasks on real robot hardware can be intractable, and transferring a learned policy from simulation to reality is still extremely challenging. We present a novel approach to model-free reinforcement learning that can leverage existing sub-optimal solutions as an algorithmic prior during training and deployment. During training, our gated fusion approach enables the prior to guide the initial stages of exploration, increasing sample-efficiency and enabling learning from sparse long-horizon reward signals. Importantly, the policy can learn to improve beyond the performance of the sub-optimal prior since the prior’s influence is annealed gradually. During deployment, the policy’s uncertainty provides a reliable strategy for transferring a simulation-trained policy to the real world by falling back to the prior controller in uncertain states. We show the efficacy of our Multiplicative Controller Fusion approach on the task of robot navigation and demonstrate safe transfer from simulation to the real world without any fine tuning.

[arXiv]

[website]

-

Probabilistic Object Detection: Definition and Evaluation In IEEE Winter Conference on Applications of Computer Vision (WACV), 2020.

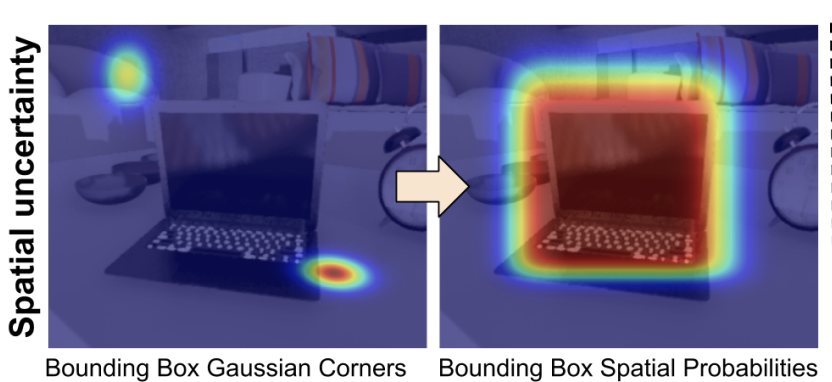

We introduce Probabilistic Object Detection, the task of detecting objects in images and accurately quantifying the spatial and semantic uncertainties of the detections. Given the lack of methods capable of assessing such probabilistic object detections, we present the new Probability-based Detection Quality measure (PDQ). Unlike AP-based measures, PDQ has no arbitrary thresholds and rewards spatial and label quality, and foreground/background separation quality while explicitly penalising false positive and false negative detections.

[arXiv]