Robotic Vision Scene Understanding Challenge

News

06 June 2023 Winning teams for RVSU 2023 Challenge announced

- 02 May 2023 RVSU 2023 Challenge submission date extended to May 27th

- 15 March 2023 RVSU 2023 Challenge officially launched alongside Embodied AI Workshop! Evaluation page now up and running on EvalAI

- 18 January 2023 Prizes announced for 2023 challenge. 1 RTX A6000 and up to 5 Jetson Nanos to be awarded for each of the top two teams.

- 16 December 2022 Embodied AI workshop confirmed for CVPR2023 hosting the latest Scene Understanding Challenge. Workshop date - June 19th 2023.

- 19 June 2022 Challenge results presented at CVPR 2022 Embodied AI workshop

- 31 May 2022 Challenge deadline exgtended to June 7th

- 28 March 2022 Release of challenge registration form

- 14 February 2022 Challenge released as part of CVPR 2022 Embodied AI workshop

- 20 June 2021 CVPR 2021 Workshop

- 17 February 2021 Challenge released as part of CVPR 2021 Embodied AI workshop

Overview

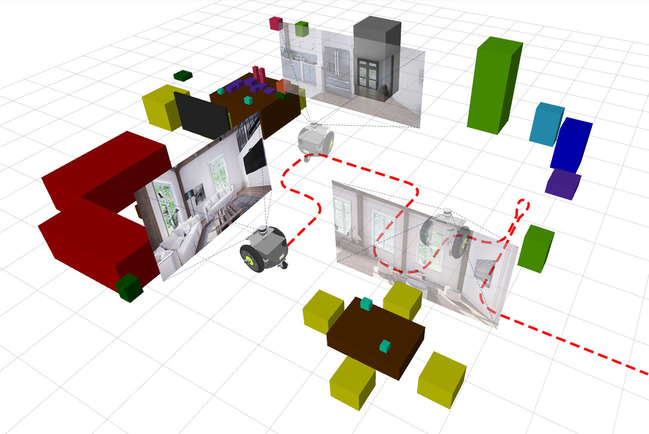

The Robotic Vision Scene Understanding Challenge evaluates how well a robotic vision system can understand the semantic and geometric aspects of its environment. The challenge consists of two distinct tasks: Object-based Semantic SLAM, and Scene Change Detection.

Key features of this challenge include:

- BenchBot, a complete software stack for running robotic systems

- Running algorithms in realistic 3D simulation, and on real robots, with only a few lines of Python code

- The BenchBot API, which allows simple interfacing with robots while supporting both OpenAI Gym-style and simple object-oriented Agent approaches

- Easy-to-use scripts for running simulated environments, executing code on a simulated robot, evaluating semantic scene understanding results, and automating code execution across multiple environments

- Use of the Nvidia Isaac SDK for interfacing with, and simulation of, high fidelity 3D environments

Submit your results to EvalAI - New challenge evaluation servers opening Feb 2023!

Note: While some levels of the challenge aren’t currently running in official competitions (passive mode), we leave the evaluation server open for all who wish to compete and improve in this area of research.

Watch the video below to learn more!

Challenge Tasks

The semantic scene understanding challenge is split into 3 modes, across 2 different semantic scene understanding tasks, for a total of 6 different challenge variations. The different variations open up the challenge to a wider range of participants, from those who want to focus solely on visual scene understanding to those wish to integrate robot localisation with visual detection algorithms.

The two different semantic scene understanding tasks are:

- Semantic SLAM: Participants use a robot to traverse around the environment, building up an object-based semantic map from the robot’s RGBD sensor observations and odomtry measurements.

- Scene change detection (SCD): Participants use a robot to traverse through an environment scene, building up a semantic understanding of the scene. Then the robot is moved to a new start position in the same environment, but with different conditions. Along with a possible change from day to night, the new scene has a number objects added and / or removed. Participants must produce an object-based semantic map describing the changes between the two scenes.

The object-based semantic maps generated by submissions are evaluated against the corresponding ground-truth object-based semantic map (for task 2 this is the map of changes in the second scene, with respect to the first). Please see the BenchBot Evaluation documentation for details on object-based semantic maps submission formats, and further explanation of the two different tasks.

Each task has 3 variations, corresponding to the following modes:

- Passive control, with ground-truth localisation (PGT): The robot follows a fixed-trajectory, and participants are given a single method to control the robot: moving to the next pose. The task cannot be continued once the entire trajectory has been traversed. Participants receive ground-truth poses for all robot components after each action.

- Active control, with ground-truth localisation (AGT): The robot can be controlled by either moving forward a requested distance, or rotating on the spot a requested number of degrees. The task cannot be continued if the robot collides with the environment. Participants receive ground-truth poses for all robot components after each action.

- Active control, using dead reckoning (i.e. no localisation) (ADR): The robot can be controlled by either moving forward a requested distance, or rotating on the spot a requested number of degrees. Participants receive poses derived from robot odometry after each action, with localisation error that accumulates over time.

Please see the BenchBot API documentation for full details about what actions and observations are available in each mode, and how to use them with your semantic scene understanding algorithms.

How to Participate

Currently, there is no active version of the challenge with prize money attached. However, the challenge evaluation server is available here on the EvalAI website. Please create an account, sign in, & click the “Participate” tab to enter our challenge. Full details on how to participate, the available software framework, and submission requirements are provided on the site.

Participating in the Semantic Scene Understanding Challenge is as simple as the 4 steps below. The BenchBot software stack is designed from the ground up to eliminate as many obstacles as possible, so you can focus on what matters: solving semantic scene understanding problems. A collection of resources, documentation, and examples are also available within the BenchBot ecosystem to support your experience while participating in the challenge.

To participate in our challenge:

- Download & install the BenchBot software stack. Use the examples to dive straight in & start playing (benchbot_run –list-examples)

- Choose a task to start working on a solution for, using

benchbot_run --list-tasksto list supported tasks - Start with the development environments “miniroom” and “house” which include ground-truth maps to aid in your algorithm development

- Develop a solution using the

benchbot_run,benchbot_submit, &benchbot_evalscripts - Create some results for your solution in the challenge environments using:

benchbot_batch -r carter -t <your_task> -E <batch_name> -z -n <your_submission_cmd> - Use the Submit tab at the top of our EvalAI page to submit your results for evaluation

Past Challenges

CVPR 2022

We provided a scene understanding challenge to the CVPR 2022 Embodied AI Workshop. This challenge saw 23 registered participants, 5 participant teams and a prize pool of 2 NVIDIA A6000 GPUs, $2,500 USD and up to 10 Jetson Nanos. The workshop itself hosted challenges by 9 different research organizations. Full details of this iteration of the challenge can be found on the CVPR 2022 challenge website. Please check out the workshop website for more details on the 9 other robotic vision/embodied AI challenges being run.

We provided a scene understanding challenge to the CVPR 2022 Embodied AI Workshop. This challenge saw 23 registered participants, 5 participant teams and a prize pool of 2 NVIDIA A6000 GPUs, $2,500 USD and up to 10 Jetson Nanos. The workshop itself hosted challenges by 9 different research organizations. Full details of this iteration of the challenge can be found on the CVPR 2022 challenge website. Please check out the workshop website for more details on the 9 other robotic vision/embodied AI challenges being run.

CVPR 2021

We provided a scene understanding challenge to the CVPR 2021 Embodied AI Workshop. This challenge saw 1 participant team but no teams able to beat the challenge baselines. The workshop itself hosted challenges by 9 different research organizations. Full details of this iteration of the challenge can be found on the CVPR 2021 challenge website. Please check out the workshop website for more details on the 9 other robotic vision/embodied AI challenges being run.

We provided a scene understanding challenge to the CVPR 2021 Embodied AI Workshop. This challenge saw 1 participant team but no teams able to beat the challenge baselines. The workshop itself hosted challenges by 9 different research organizations. Full details of this iteration of the challenge can be found on the CVPR 2021 challenge website. Please check out the workshop website for more details on the 9 other robotic vision/embodied AI challenges being run.

Questions?

Talk to us on Slack or contact us via email at contact@roboticvisionchallenge.org.

Organisers, Support, and Acknowledgements

Stay in touch and follow us on Twitter for news and announcements: @robVisChallenge.

Commonwealth Scientific and Industrial Research Organisation

(formerly Queensland University of Technology)

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

The Robotic Vision Challenges organisers have been associated with the Australian Centre for Robotic Vision and QUT Centre for Robotics at Queensland University of Technology (QUT) in Brisbane, Australia. In 2023 David Hall has continued work on the challenges, supported by the Commonwealth Scientific and Industrial Research Organisation (CSIRO).