The Probabilistic Object Detection Challenge

Overview

Our first challenge requires participants to detect objects in video data produced from high-fidelity simulations. The novelty of this challenge is that participants are rewarded for providing accurate estimates of both spatial and semantic uncertainty for every detection using probabilistic bounding boxes.

Accurate spatial and semantic uncertainty estimates are rewarded by our newly developed probability-based detection quality (PDQ) measure. Full details about this new measure are available in our WACV 2020 Paper (arxiv preprint).

We invite anyone who is interested in object detection and appreciates a good challenge to please participate and compete in the competition so that we may continue to push the state-of-the-art in object detection in directions more suited to robotics applications. We also appreciate any and all feedback about the challenge itself and look forward to hearing from you.

Challenge Participation and Presentation of Results

We maintain two evaluation servers on Codalab:

- An ongoing evaluation server with a public leaderboard that remains open year-round and can be used to benchmark your algorithm, e.g. for paper submissions. It contains a validation dataset, and a test-dev dataset.

- A competition evaluation server that will only be available before competitions we organise at major computer vision and robotics conferences.

Ongoing Evaluation Server

We maintain an ongoing evaluation server with a public leaderboard that can be used year-round to benchmark your approach for probabilistic object detection.

ECCV 2020 Competition Evaluation Server

We organised a ECCV 2020 workshop Beyond mAP: Rassessing the Evaluation of Object Detectors which hosted the 3rd iteration of our challenge. The workshop saw 4 workshop papers and 3 challenge participants. Full details and links to papers/presentations can be found on the workshop website.

We organised a ECCV 2020 workshop Beyond mAP: Rassessing the Evaluation of Object Detectors which hosted the 3rd iteration of our challenge. The workshop saw 4 workshop papers and 3 challenge participants. Full details and links to papers/presentations can be found on the workshop website.

IROS 2019 Competition Evaluation Server

We organised a workshop at IROS 2019 (8 November) on the topic of The Importance of Uncertainty in Deep Learning for Robotics. For that workshop, we ran a second round of the probabilistic object detection challenge.

We organised a workshop at IROS 2019 (8 November) on the topic of The Importance of Uncertainty in Deep Learning for Robotics. For that workshop, we ran a second round of the probabilistic object detection challenge.

CVPR 2019 Competition Evaluation Server

We organised a competition and workshop at CVPR 2019. Four participating teams presented their approaches and results. More details and links to their papers can be found on the workshop website.

We organised a competition and workshop at CVPR 2019. Four participating teams presented their approaches and results. More details and links to their papers can be found on the workshop website.

How to Cite

When using the dataset and evaluation in your publications, please cite:

@inproceedings{hall2020probability,

title={Probabilistic Object Detection: Definition and Evaluation},

author={Hall, David and Dayoub, Feras and Skinner, John, and Zhang, Haoyang and Miller, Dimity and Corke, Peter and Carneiro, Gustavo and Angelova, Anelia and S{\"u}nderhauf, Niko},

booktitle={IEEE Winter Conference on Applications of Computer Vision (WACV)},

year={2020}

What is Probabilistic Object Detection?

For robotics applications, detections must not just provide information about where and what an object is, but must also provide a measure of spatial and semantic uncertainty. Failing to do so can lead to catastrophic consequences from over or under-confident detections.

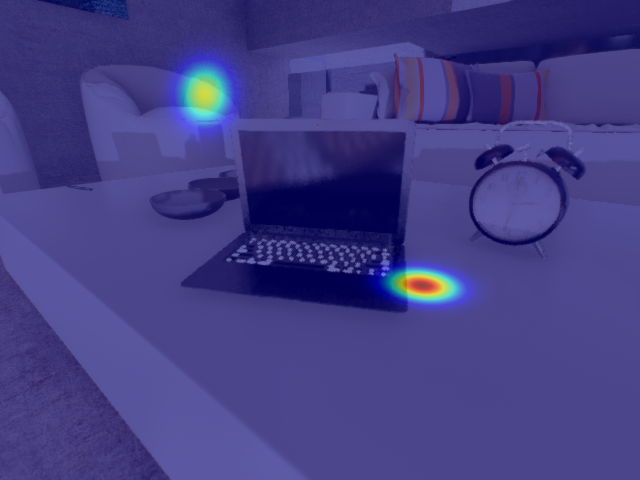

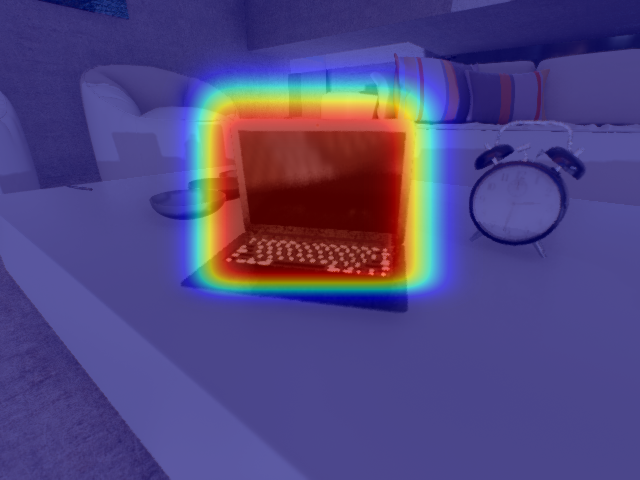

Semantic uncertainty can be provided as a categorical distribution over labels. Spatial uncertainty in the context of object detection can be expressed by augmenting the commonly used bounding box format with covariances for their corner points. That is, a bounding box is represented as two Gaussian distributions. See below for an illustration.

For more information on probabilistic object detection, and our PDQ evaluation measure check out the summary lecture which accompanies our WACV 2020 paper.

Datasets

For this challenge, we use realistic simulated data from a domestic robot scenario. The dataset contains scenes with cluttered surfaces, and day and night lighting conditions. We simulate domestic service robots of multiple sizes, resulting in sequences with three different camera heights above the ground plane.

We maintain three dataset splits:

- The test-challenge dataset is used on the competition evaluation server and only available during open competition phases. It contains over 56,000 images from 18 simulated indoor video sequences, approximately 24GB.

- The test-dev dataset is available on the ongoing evaluation server and can be used to benchmark approaches year-round, e.g. for use in publications. It contains 123,000 images from 18 indoor scenes (different to the test-challenge), day and night lighting variations, and different camera heights above ground simulating different household robots.

- The validation dataset is available on the ongoing evaluation server, and contains over 21,000 images in 4 simulated indoor video sequences, approximately 8.8GB. Ground truth information is available for this dataset. It uses the same classes as the test datasets, but different object models.

All datasets use the same subset of the Microsoft COCO classes:

['bottle', 'cup', 'knife', 'bowl', 'wine glass', 'fork', 'spoon', 'banana', 'apple', 'orange', 'cake', 'potted plant', 'mouse', 'keyboard', 'laptop', 'cell phone', 'book', 'clock', 'chair', 'dining table', 'couch', 'bed', 'toilet', 'television', 'microwave', 'toaster', 'refrigerator', 'oven', 'sink', 'person']

New Evaluation Measure - PDQ

We developed a new probability-based detection quality (PDQ) evaluation measure for this challenge, please see the arxiv paper for more details.

PDQ is a new visual object detector evaluation measure which not only assesses detection quality, but also accounts for the spatial and label uncertainties produced by object detection systems. Current evaluation measures such as mean average precision (mAP) do not take these two aspects into account, accepting detections with no spatial uncertainty and using only the label with the winning score instead of a full class probability distribution to rank detections.

To overcome these limitations, we propose the probability-based detection quality (PDQ) measure which evaluates both spatial and label probabilities, requires no thresholds to be predefined, and optimally assigns groundtruth objects to detections.

Our experimental evaluation shows that PDQ rewards detections with accurate spatial probabilities and explicitly evaluates label probability to determine detection quality. PDQ aims to encourage the development of new object detection approaches that provide meaningful spatial and label uncertainty measures.

Check out our PDQ bitbucket repo to use it in your own research. The repo also contains useful tools for visualising and analysing your data, and tools to help you use it with COCO data.

Organisers, Support, and Acknowledgements

Stay in touch and follow us on Twitter for news and announcements: @robVisChallenge.

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

Queensland University of Technology

The Robotic Vision Challenges organisers are with the Australian Centre for Robotic Vision at Queensland University of Technology (QUT) in Brisbane, Australia.

This project was supported by a Google Faculty Research Award to Niko Sünderhauf in 2018.

Supporters

We thank the following supporters for their valuable input and engaging discussions.

University of Adelaide

Google Brain

University of Adelaide