QuadricSLAM

QuadricSLAM uses constrained dual quadrics as 3D landmark representations, exploiting their ability to compactly represent the size, position and orientation of an object.

Our paper shows how 2D object detections can directly constrain the quadric parameters via a novel geometric error formulation. We develop a sensor model for object detectors that addresses the challenge of partially visible objects, and demonstrate how to jointly estimate the camera pose and constrained dual quadric parameters in factor graph based SLAM with a general perspective camera.

QuadricSLAM uses objects as landmarks and represents them as constrained dual quadrics in 3D space. QuadricSLAM jointly estimates camera poses and quadric parameters from odometry measurements and object detections, implicitly performing loop closures based on the object observations.

Contributions

With this research, we make the following contributions:

- We show how to parametrize object landmarks in factor-graph based SLAM as constrained dual quadrics.

- We demonstrate that visual object detection systems such as Faster R-CNN, SSD, or Mask R-CNN can be used as sensors in SLAM, and that their observations – the bounding boxes around objects – can directly constrain dual quadric parameters via our novel geometric error formulation.

- To incorporate quadrics into SLAM, we derive a factor graph-based SLAM formulation that jointly estimates the dual quadric and robot pose parameters.

- We provide a large-scale evaluation using 250 indoor trajectories through a high-fidelity simulation environment in combination with real world experiments on the TUM RGB-D dataset to show how object detections and dual quadric parametrization aid the SLAM solution.

Impressions

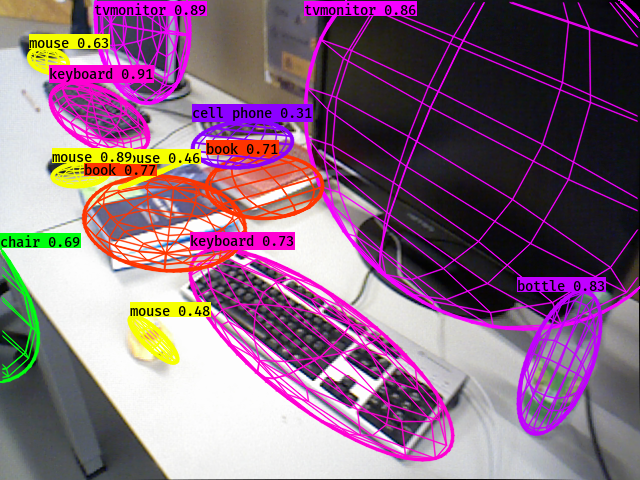

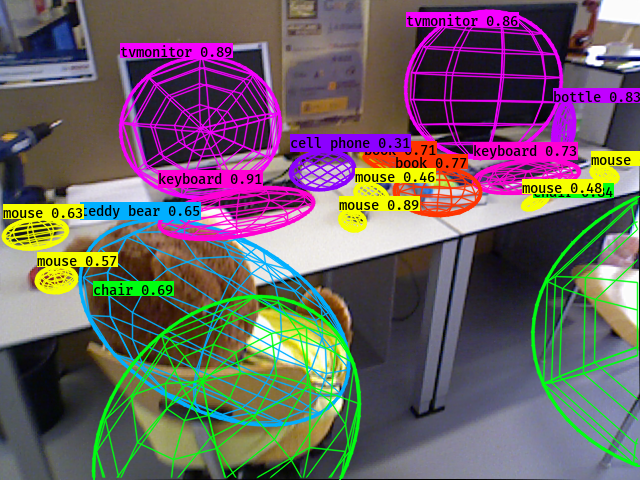

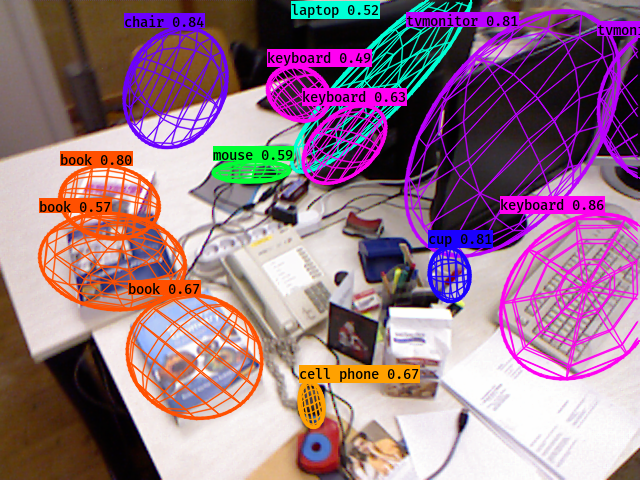

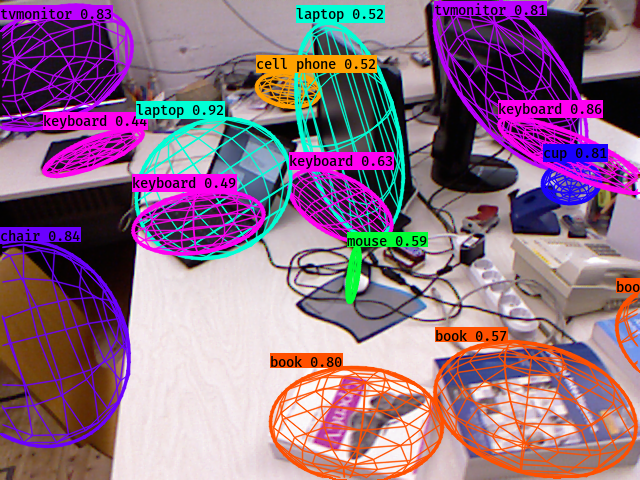

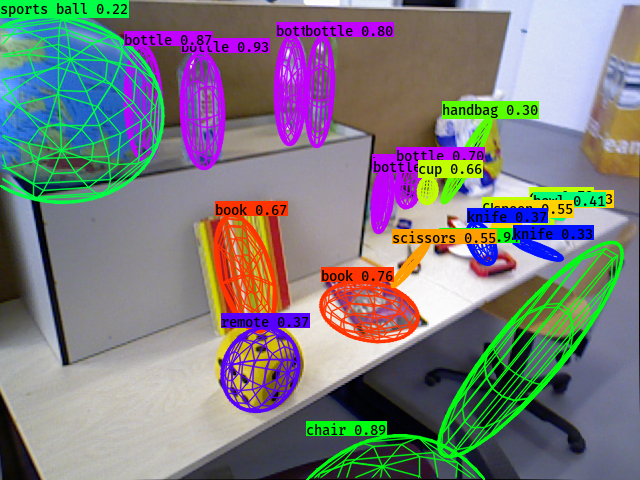

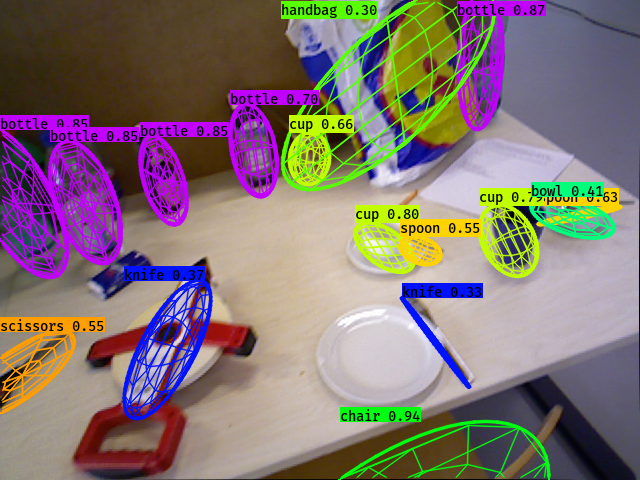

This video illustrates the quality of QuadricSLAM on a real world image sequence by projecting the estimated quadric landmarks into the video, based on the estimated camera pose.

We use a pretrained YOLOv3 network to generate 2D object detections, which are manually associated with distinct physical objects, and then introduced as factors in our factor graph formulation. Odometry measurements for this sequence (fr2_desk, from the TUM RGB-D dataset) has been provided using an implementation of ORB-SLAM2 with loop closures disabled.

Publications

Journal

- QuadricSLAM: Dual Quadrics from Object Detections as Landmarks in Object-oriented SLAM, Lachlan Nicholson, Michael Milford, Niko Sünderhauf, IEEE Robotics and Automation Letters (RA-L), 2018.

Workshop

-

QuadricSLAM: Dual Quadrics as SLAM Landmarks, Lachlan Nichsolson, Michael Milford, Niko Sünderhauf, Proc. of Workshop on Deep Learning for Visual SLAM, IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR-WS), 2018.

-

QuadricSLAM: Dual Quadrics from Object Detections, Lachlan Nichsolson, Michael Milford, Niko Sünderhauf, Proc. of Workshop on Multimodal Robot Perception: Representing a Complex World, IEEE Conference on Robotics and Automation (ICRA), 2018.

arXiv Preprints

- An Orientation Factor for Object-Oriented SLAM, Natalie Jablonsky, Michael Milford, Niko Sünderhauf, arXiv preprint, 2018.