Publications

2018

Journal

QuadricSLAM: Dual Quadrics from Object Detections as Landmarks in Object-oriented SLAM, Lachlan Nicholson, Michael Milford, Niko Sünderhauf

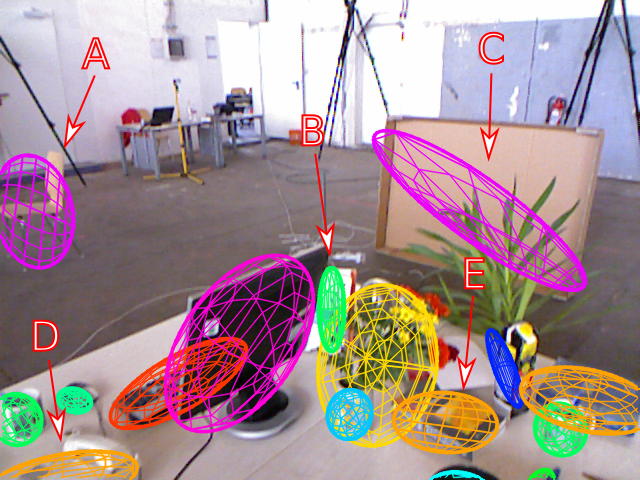

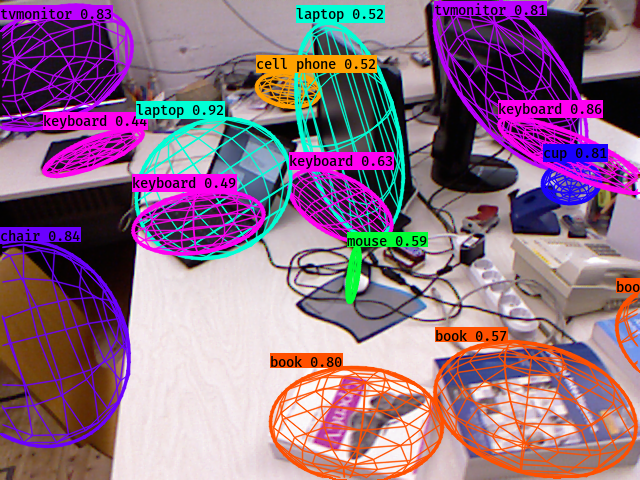

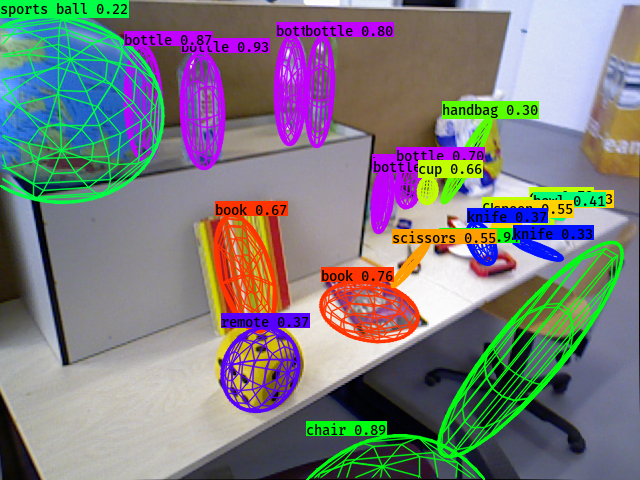

In this paper, we use 2D object detections from multiple views to simultaneously estimate a 3D quadric surface for each object and localize the camera position. We derive a SLAM formulation that uses dual quadrics as 3D landmark representations, exploiting their ability to compactly represent the size, position and orientation of an object, and show how 2D object detections can directly constrain the quadric parameters via a novel geometric error formulation. We develop a sensor model for object detectors that addresses the challenge of partially visible objects, and demonstrate how to jointly estimate the camera pose and constrained dual quadric parameters in factor graph based SLAM with a general perspective camera.

Workshops

QuadricSLAM: Dual Quadrics as SLAM Landmarks, Lachlan Nichsolson, Michael Milford, Niko Sünderhauf, Proc. of Workshop on Deep Learning for Visual SLAM, IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR) Workshops, 2018.

QuadricSLAM: Dual Quadrics from Object Detections, Lachlan Nichsolson, Michael Milford, Niko Sünderhauf, Proc. of Workshop on Multimodal Robot Perception: Representing a Complex World, IEEE Conference on Robotics and Automation (ICRA) Workshops, 2018.

arXiv Preprints

An Orientation Factor for Object-Oriented SLAM, Natalie Jablonsky, Michael Milford, Niko Sünderhauf, arXiv preprint, 2018.

Current approaches to object-oriented SLAM lack the ability to incorporate prior knowledge of the scene geometry, such as the expected global orientation of objects. We overcome this limitation by proposing a geometric factor that constrains the global orientation of objects in the map, depending on the objects’ semantics. This new geometric factor is a first example of how semantics can inform and improve geometry in object-oriented SLAM. We implement the geometric factor for the recently proposed QuadricSLAM that represents landmarks as dual quadrics. The factor probabilistically models the quadrics’ major axes to be either perpendicular to or aligned with the direction of gravity, depending on their semantic class. Our experiments on simulated and real-world datasets show that using the proposed factors to incorporate prior knowledge improves both the trajectory and landmark quality.

2017

Conference

Meaningful Maps With Object-Oriented Semantic Mapping, Niko Sünderhauf, Trung T. Pham, Yasir Latif, Michael Milford, Ian Reid. International Conference on Intelligent Robots and Systems (IROS), 2017.

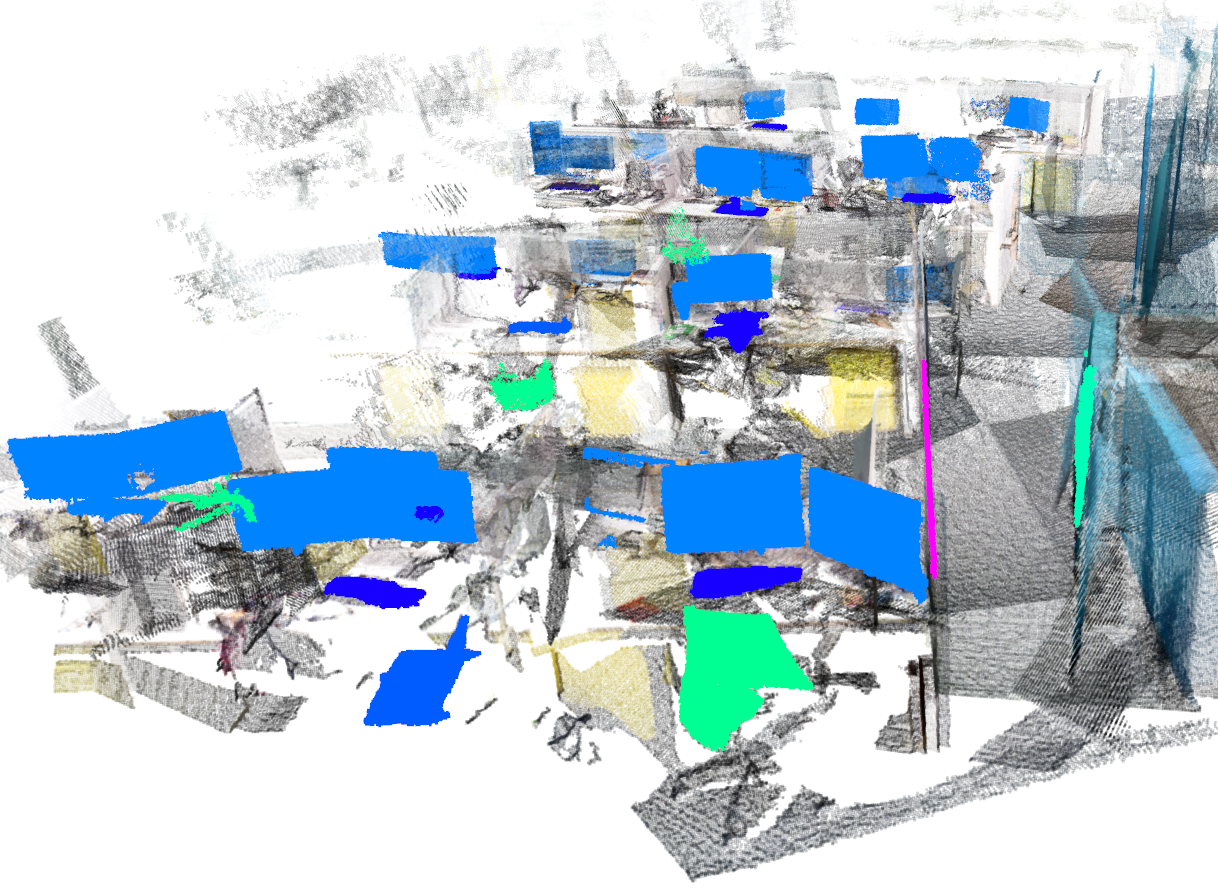

In this paper we address the problem of building environmental maps that include both semantically meaningful, object-level entities and point- or mesh-based geometrical representations. We simultaneously build geometric point cloud models of previously unseen instances of known object classes and create a map that contains these object models as central entities. Our system leverages sparse, feature-based RGB-D SLAM, image-based deep-learning object detection and 3D unsupervised segmentation.

Preprint

Dual Quadrics from Object Detection Bounding Boxes as Landmark Representations in SLAM, Niko Sünderhauf, Michael Milford. arXiv preprint, 2017.

We formulated our initial ideas of using dual quadrics as landmarks for SLAM in this paper. We showed how dual quadrics can be constrained from bounding box observations typically obtained from visual object detection.

2016

Conference

Place Categorization and Semantic Mapping on a Mobile Robot, Niko Sünderhauf, Feras Dayoub, Sean McMahon, Ben Talbot, Ruth Schulz, Peter Corke, Gordon Wyeth, Ben Upcroft, Michael Milford. International Conference on Robotics and Automation (ICRA), 2016.

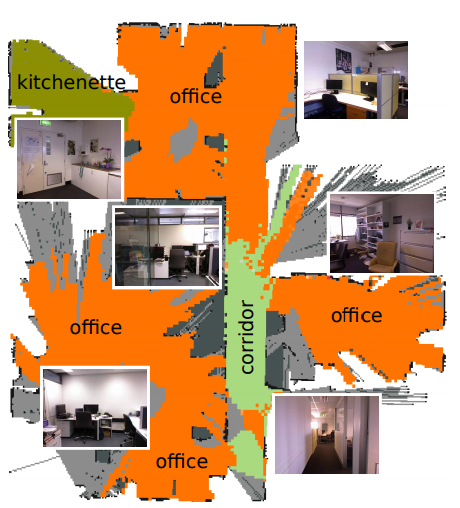

In this paper we focus on the challenging problem of place categorization and semantic mapping on a robot without environment-specific training. Motivated by their ongoing success in various visual recognition tasks, we build our system upon a state-of-the-art convolutional network. We overcome its closed-set limitations by complementing the network with a series of one-vs-all classifiers that can learn to recognize new semantic classes online. Prior domain knowledge is incorporated by embedding the classification system into a Bayesian filter framework that also ensures temporal coherence. We evaluate the classification accuracy of the system on a robot that maps a variety of places on our campus in real-time. We show how semantic information can boost robotic object detection performance and how the semantic map can be used to modulate the robot’s behaviour during navigation task.