Reliability of Deep Learning for Robotics

Despite the enormous progress of deep learning over the past decade, and the appearance of foundation models such as large language models or vision-language models in the past few years, questions about their reliability and robustnes remain. How do deep learning models react when presented with out-of-distribution data? How can they express the uncertainty in their predictions, and know when they don’t know? Are recent foundation models immune to open-set or out-of-distribution conditions?

These questions are of enormous importance if we want to apply deep learning (and AI in general) to safety-critical applications where mistakes could have catastrophic consequences.

Our research tackles critical challenges like open-set recognition, out-of-distribution (OOD) detection, and failure identification in visual perception.

Join the Team!

If you want to join our research efforts in these areas, please contact Dr Dimity Miller to apply for a PhD position.

Publications

-

Open-Set Recognition in the Age of Vision-Language Models arXiv preprint arXiv:2403.16528, 2024.

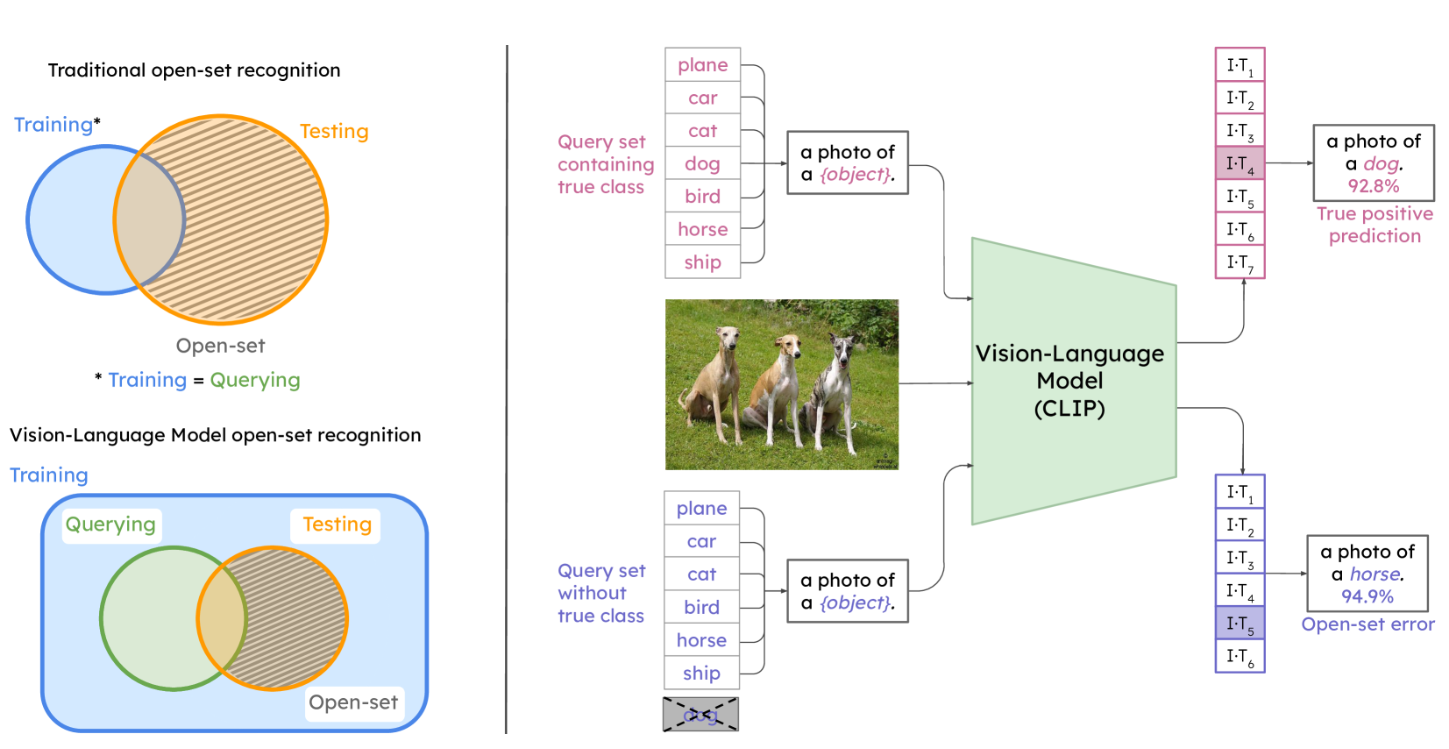

Are vision-language models (VLMs) open-set models because they are trained on internet-scale datasets? We answer this question with a clear no – VLMs introduce closed-set assumptions via their finite query set, making them vulnerable to open-set conditions. We systematically evaluate VLMs for open-set recognition and find they frequently misclassify objects not contained in their query set, leading to alarmingly low precision when tuned for high recall and vice versa. We show that naively increasing the size of the query set to contain more and more classes does not mitigate this problem, but instead causes diminishing task performance and open-set performance. We establish a revised definition of the open-set problem for the age of VLMs, define a new benchmark and evaluation protocol to facilitate standardised evaluation and research in this important area, and evaluate promising baseline approaches based on predictive uncertainty and dedicated negative embeddings on a range of VLM classifiers and object detectors.

[arXiv]

Are vision-language models (VLMs) open-set models because they are trained on internet-scale datasets? We answer this question with a clear no – VLMs introduce closed-set assumptions via their finite query set, making them vulnerable to open-set conditions. We systematically evaluate VLMs for open-set recognition and find they frequently misclassify objects not contained in their query set, leading to alarmingly low precision when tuned for high recall and vice versa. We show that naively increasing the size of the query set to contain more and more classes does not mitigate this problem, but instead causes diminishing task performance and open-set performance. We establish a revised definition of the open-set problem for the age of VLMs, define a new benchmark and evaluation protocol to facilitate standardised evaluation and research in this important area, and evaluate promising baseline approaches based on predictive uncertainty and dedicated negative embeddings on a range of VLM classifiers and object detectors.

[arXiv]

-

SAFE: Sensitivity-Aware Features for Out-of-Distribution Object Detection In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2023.

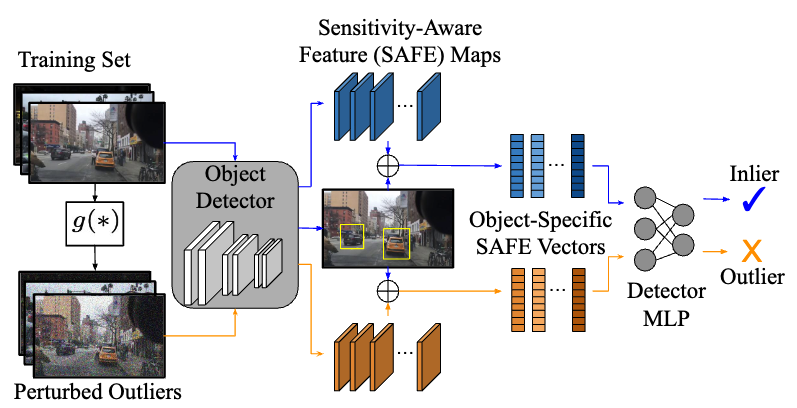

We address the problem of out-of-distribution (OOD)

detection for the task of object detection. We show that

residual convolutional layers with batch normalisation produce Sensitivity-Aware FEatures (SAFE) that are consistently powerful for distinguishing in-distribution from outof-distribution detections. We extract SAFE vectors for every detected object, and train a multilayer perceptron on

the surrogate task of distinguishing adversarially perturbed

from clean in-distribution examples. This circumvents the

need for realistic OOD training data, computationally expensive generative models, or retraining of the base object detector.

[arXiv]

We address the problem of out-of-distribution (OOD)

detection for the task of object detection. We show that

residual convolutional layers with batch normalisation produce Sensitivity-Aware FEatures (SAFE) that are consistently powerful for distinguishing in-distribution from outof-distribution detections. We extract SAFE vectors for every detected object, and train a multilayer perceptron on

the surrogate task of distinguishing adversarially perturbed

from clean in-distribution examples. This circumvents the

need for realistic OOD training data, computationally expensive generative models, or retraining of the base object detector.

[arXiv]

-

Hyperdimensional Feature Fusion for Out-Of-Distribution Detection In IEEE Winter Conference on Applications of Computer Vision (WACV), 2023.

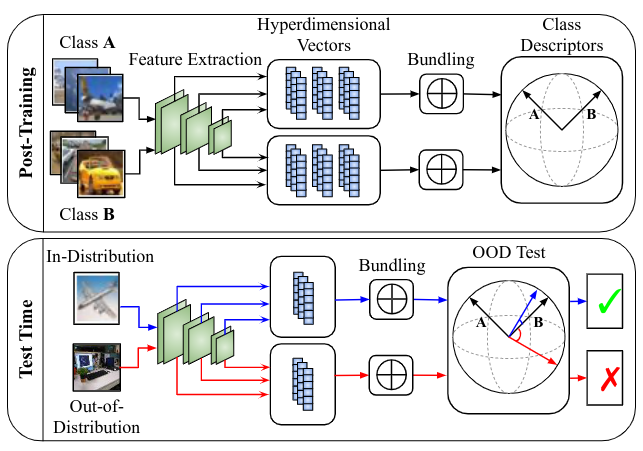

We introduce powerful ideas from Hyperdimensional

Computing into the challenging field of Out-of-Distribution

(OOD) detection. In contrast to most existing works that perform OOD detection based on only a single layer of a neural

network, we use similarity-preserving semi-orthogonal projection matrices to project the feature maps from multiple

layers into a common vector space. By repeatedly applying the bundling operation ⊕, we create expressive classspecific descriptor vectors for all in-distribution classes.

[arXiv]

We introduce powerful ideas from Hyperdimensional

Computing into the challenging field of Out-of-Distribution

(OOD) detection. In contrast to most existing works that perform OOD detection based on only a single layer of a neural

network, we use similarity-preserving semi-orthogonal projection matrices to project the feature maps from multiple

layers into a common vector space. By repeatedly applying the bundling operation ⊕, we create expressive classspecific descriptor vectors for all in-distribution classes.

[arXiv]

-

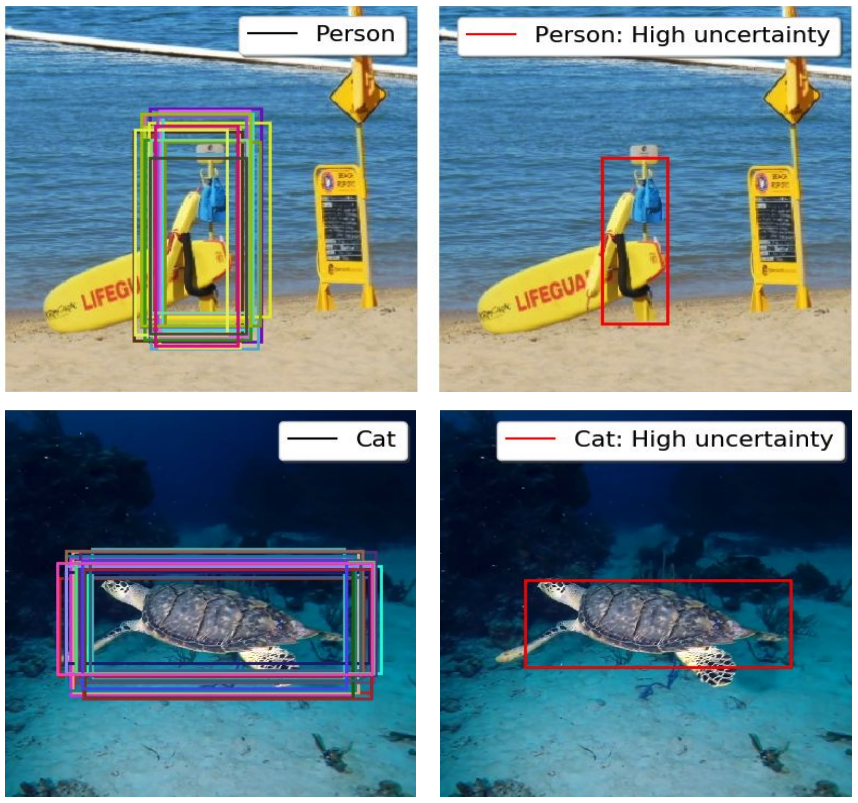

Uncertainty for Identifying Open-Set Errors in Visual Object Detection IEEE Robotics and Automation Letters (RA-L), 2021.

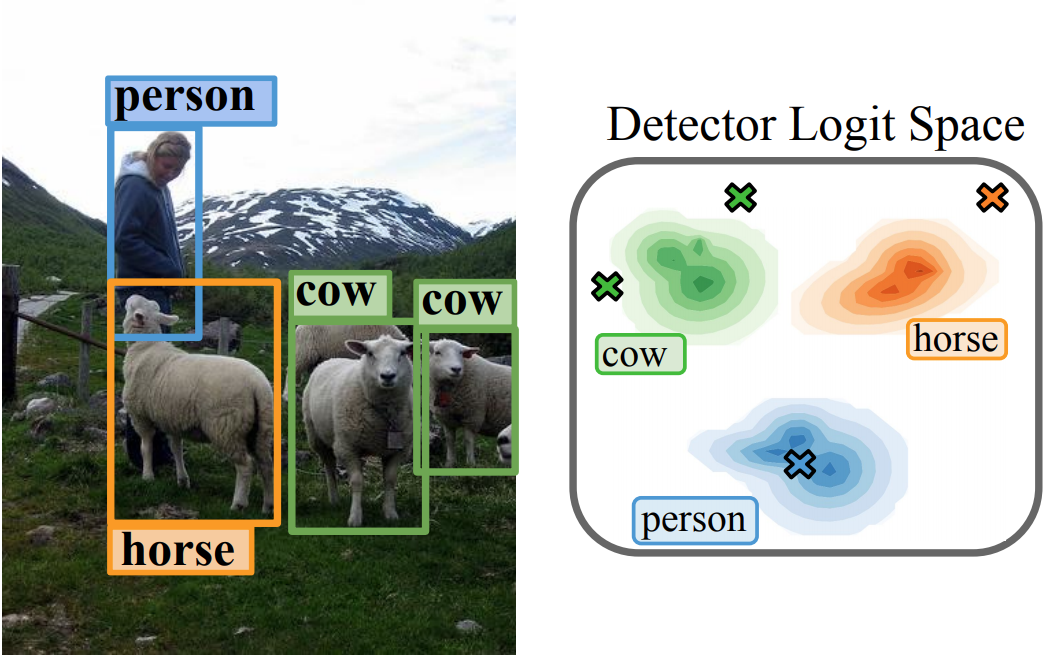

We propose GMM-Det, a real-time method for extracting

epistemic uncertainty from object detectors to identify and reject open-set errors. GMM-Det trains the detector to produce a

structured logit space that is modelled with class-specific Gaussian Mixture Models. At test time, open-set errors are identified

by their low log-probability under all Gaussian Mixture Models.

We test two common detector architectures, Faster R-CNN and

RetinaNet, across three varied datasets spanning robotics and

computer vision. Our results show that GMM-Det consistently

outperforms existing uncertainty techniques for identifying and

rejecting open-set detections, especially at the low-error-rate

operating point required for safety-critical applications. GMMDet maintains object detection performance, and introduces

only minimal computational overhead.

[arXiv]

We propose GMM-Det, a real-time method for extracting

epistemic uncertainty from object detectors to identify and reject open-set errors. GMM-Det trains the detector to produce a

structured logit space that is modelled with class-specific Gaussian Mixture Models. At test time, open-set errors are identified

by their low log-probability under all Gaussian Mixture Models.

We test two common detector architectures, Faster R-CNN and

RetinaNet, across three varied datasets spanning robotics and

computer vision. Our results show that GMM-Det consistently

outperforms existing uncertainty techniques for identifying and

rejecting open-set detections, especially at the low-error-rate

operating point required for safety-critical applications. GMMDet maintains object detection performance, and introduces

only minimal computational overhead.

[arXiv]

-

FSNet: A Failure Detection Framework for Semantic Segmentation IEEE Robotics and Automation Letters, 2022.

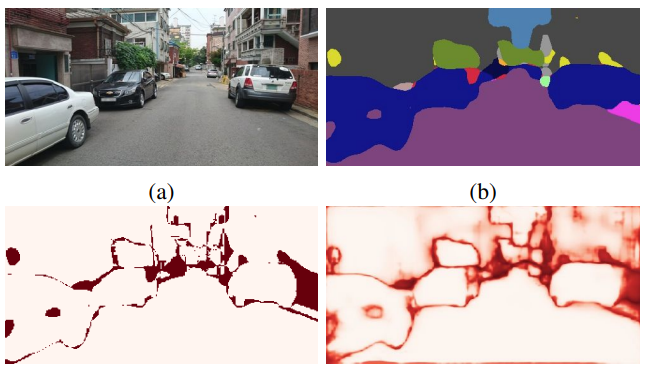

Semantic segmentation is an important task that helps autonomous vehicles understand their surroundings and navigate safely. However, during deployment, even the most mature segmentation models are vulnerable to various external factors that can degrade the segmentation performance with potentially catastrophic consequences for the vehicle and its surroundings. To address this issue, we propose a failure detection framework to identify pixel-level misclassification. We do so by exploiting internal features of the segmentation model and training it simultaneously with a failure detection network. During deployment, the failure detector flags areas in the image where the segmentation model has failed to segment correctly.

[arXiv]

Semantic segmentation is an important task that helps autonomous vehicles understand their surroundings and navigate safely. However, during deployment, even the most mature segmentation models are vulnerable to various external factors that can degrade the segmentation performance with potentially catastrophic consequences for the vehicle and its surroundings. To address this issue, we propose a failure detection framework to identify pixel-level misclassification. We do so by exploiting internal features of the segmentation model and training it simultaneously with a failure detection network. During deployment, the failure detector flags areas in the image where the segmentation model has failed to segment correctly.

[arXiv]

-

Class Anchor Clustering: A Loss for Distance-based Open Set Recognition In IEEE Winter Conference on Applications of Computer Vision (WACV), 2021.

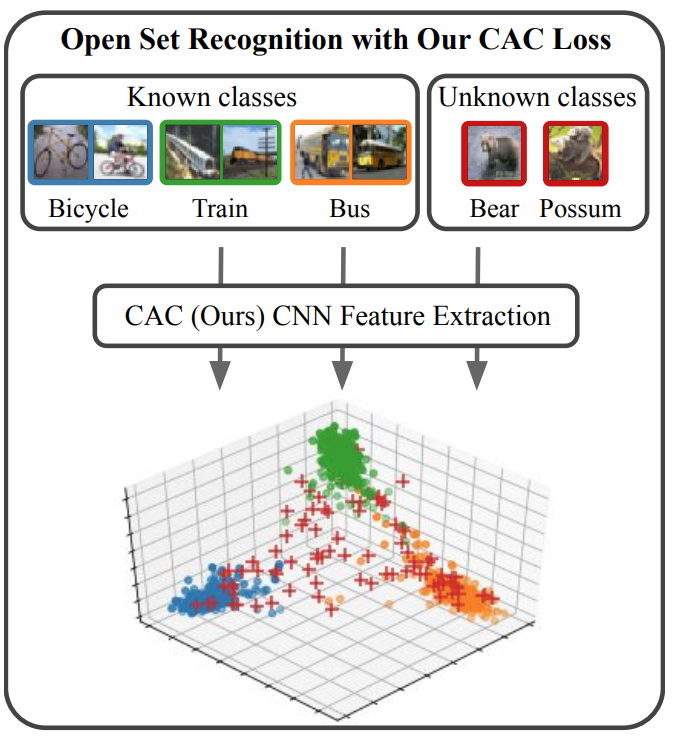

Existing open set classifiers distinguish between known and unknown inputs by measuring distance in a network’s logit space, assuming that known inputs cluster closer to the training data than unknown inputs. However, this approach is typically applied post-hoc to networks trained with cross-entropy loss, which neither guarantees nor encourages the hoped-for clustering behaviour. To overcome this limitation, we introduce Class Anchor Clustering (CAC) loss. CAC is an entirely distance-based loss that explicitly encourages training data to form tight clusters techniques on the challenging TinyImageNet dataset, achieving a 2.4% performance increase in AUROC.

[arXiv]

Existing open set classifiers distinguish between known and unknown inputs by measuring distance in a network’s logit space, assuming that known inputs cluster closer to the training data than unknown inputs. However, this approach is typically applied post-hoc to networks trained with cross-entropy loss, which neither guarantees nor encourages the hoped-for clustering behaviour. To overcome this limitation, we introduce Class Anchor Clustering (CAC) loss. CAC is an entirely distance-based loss that explicitly encourages training data to form tight clusters techniques on the challenging TinyImageNet dataset, achieving a 2.4% performance increase in AUROC.

[arXiv]

-

Online Monitoring of Object Detection Performance Post-Deployment In Proc. of IEEE International Conference on Intelligent Robots and Systems (IROS), 2021.

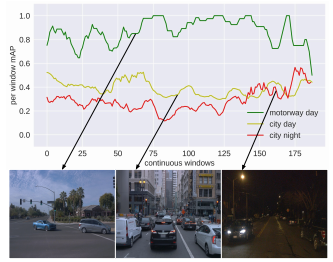

Post-deployment, an object detector is expected to operate at a similar level of performance that was reported on its testing dataset. However, when deployed onboard mobile robots that operate under varying and complex environmental conditions, the detector’s performance can fluctuate and occasionally degrade severely without warning. Undetected, this can lead the robot to take unsafe and risky actions based on low-quality and unreliable object detections. We address this problem and introduce a cascaded neural network that monitors the performance of the object detector by predicting the quality of its mean average precision (mAP) on a sliding window of the input frames.

[arXiv]

Post-deployment, an object detector is expected to operate at a similar level of performance that was reported on its testing dataset. However, when deployed onboard mobile robots that operate under varying and complex environmental conditions, the detector’s performance can fluctuate and occasionally degrade severely without warning. Undetected, this can lead the robot to take unsafe and risky actions based on low-quality and unreliable object detections. We address this problem and introduce a cascaded neural network that monitors the performance of the object detector by predicting the quality of its mean average precision (mAP) on a sliding window of the input frames.

[arXiv]

-

Performance Monitoring of Object Detection During Deployment arXiv preprint arXiv:2009.08650, 2020.

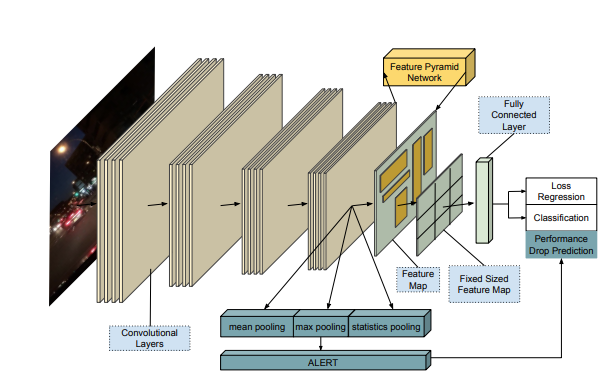

Performance monitoring of object detection is crucial for safety-critical applications such as autonomous vehicles that operate under varying and complex environmental conditions. Currently, object detectors are evaluated using summary metrics based on a single dataset that is assumed to be representative of all future deployment conditions. In practice, this assumption does not hold, and the performance fluctuates as a function of the deployment conditions. To address this issue, we propose an introspection approach to performance monitoring during deployment without the need for ground truth data. We do so by predicting when the per-frame mean average precision drops below a critical threshold using the detector’s internal features.

[arXiv]

Performance monitoring of object detection is crucial for safety-critical applications such as autonomous vehicles that operate under varying and complex environmental conditions. Currently, object detectors are evaluated using summary metrics based on a single dataset that is assumed to be representative of all future deployment conditions. In practice, this assumption does not hold, and the performance fluctuates as a function of the deployment conditions. To address this issue, we propose an introspection approach to performance monitoring during deployment without the need for ground truth data. We do so by predicting when the per-frame mean average precision drops below a critical threshold using the detector’s internal features.

[arXiv]

-

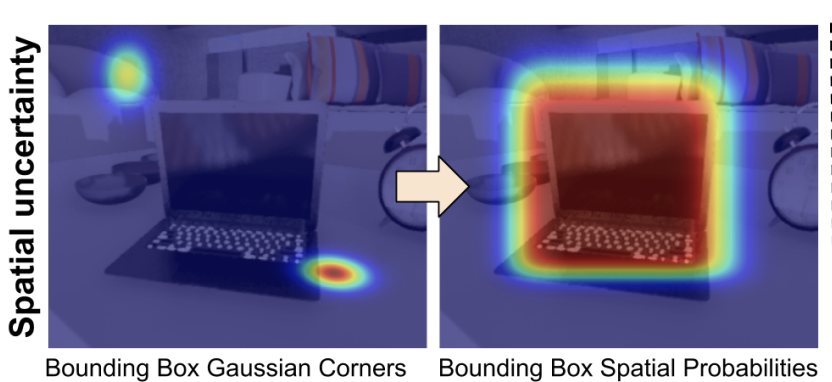

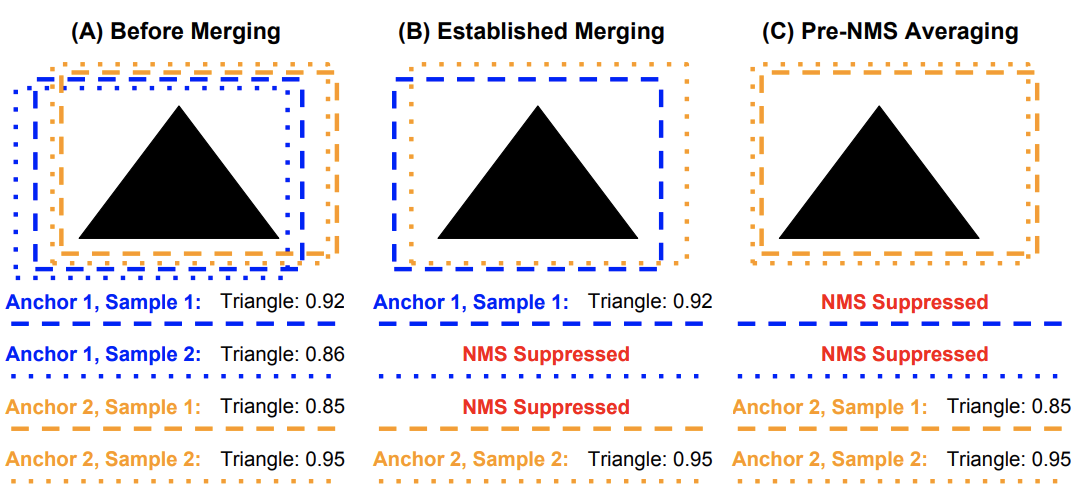

Probabilistic Object Detection: Definition and Evaluation In IEEE Winter Conference on Applications of Computer Vision (WACV), 2020.

We introduce Probabilistic Object Detection, the task of detecting objects in images and accurately quantifying the spatial and semantic uncertainties of the detections. Given the lack of methods capable of assessing such probabilistic object detections, we present the new Probability-based Detection Quality measure (PDQ). Unlike AP-based measures, PDQ has no arbitrary thresholds and rewards spatial and label quality, and foreground/background separation quality while explicitly penalising false positive and false negative detections.

[arXiv]

We introduce Probabilistic Object Detection, the task of detecting objects in images and accurately quantifying the spatial and semantic uncertainties of the detections. Given the lack of methods capable of assessing such probabilistic object detections, we present the new Probability-based Detection Quality measure (PDQ). Unlike AP-based measures, PDQ has no arbitrary thresholds and rewards spatial and label quality, and foreground/background separation quality while explicitly penalising false positive and false negative detections.

[arXiv]

-

A Probabilistic Challenge for Object Detection Nature Machine Intelligence, 2019.

To safely operate in the real world, robots need to evaluate how confident they are about what they see.

A new competition challenges computer vision algorithms to not just detect and localize objects, but also report how certain they are.

To this end, we introduce Probabilistic Object Detection, the task of detecting objects in images and accurately quantifying the spatial and semantic uncertainties of the detections.

To safely operate in the real world, robots need to evaluate how confident they are about what they see.

A new competition challenges computer vision algorithms to not just detect and localize objects, but also report how certain they are.

To this end, we introduce Probabilistic Object Detection, the task of detecting objects in images and accurately quantifying the spatial and semantic uncertainties of the detections.

-

Benchmarking Sampling-based Probabilistic Object Detectors In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019.

This paper provides the first benchmark for sampling-based probabilistic object detectors. A probabilistic object

detector expresses uncertainty for all detections that reliably indicates object localisation and classification performance. We compare performance for two sampling-based

uncertainty techniques, namely Monte Carlo Dropout and Deep Ensembles, when implemented into one-stage and

two-stage object detectors, Single Shot MultiBox Detector and Faster R-CNN.

This paper provides the first benchmark for sampling-based probabilistic object detectors. A probabilistic object

detector expresses uncertainty for all detections that reliably indicates object localisation and classification performance. We compare performance for two sampling-based

uncertainty techniques, namely Monte Carlo Dropout and Deep Ensembles, when implemented into one-stage and

two-stage object detectors, Single Shot MultiBox Detector and Faster R-CNN.

-

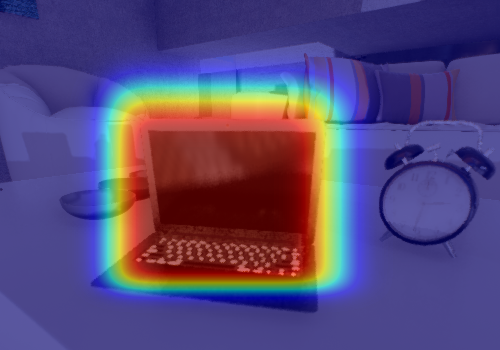

Evaluating Merging Strategies for Sampling-based Uncertainty Techniques in Object Detection In Proc. of IEEE International Conference on Robotics and Automation (ICRA), 2019.

There has been a recent emergence of sampling-based techniques for estimating epistemic uncertainty in deep neural networks. While these methods can be applied to classification or semantic segmentation tasks by simply averaging samples, this is not the case for object detection, where detection sample bounding boxes must be accurately associated and merged. A weak merging strategy can significantly degrade the performance of the detector and yield an unreliable uncertainty measure. This paper provides the first in-depth investigation of the effect of different association and merging strategies. We compare different combinations of three spatial and two semantic affinity measures with four clustering methods for MC Dropout with a Single Shot Multi-Box Detector. Our results show that the correct choice of affinity-clustering combinations can greatly improve the effectiveness of the classification and spatial uncertainty estimation and the resulting object detection performance. We base our evaluation on a new mix of datasets that emulate near open-set conditions (semantically similar unknown classes), distant open-set conditions (semantically dissimilar unknown classes) and the common closed-set conditions (only known classes).

[arXiv]

There has been a recent emergence of sampling-based techniques for estimating epistemic uncertainty in deep neural networks. While these methods can be applied to classification or semantic segmentation tasks by simply averaging samples, this is not the case for object detection, where detection sample bounding boxes must be accurately associated and merged. A weak merging strategy can significantly degrade the performance of the detector and yield an unreliable uncertainty measure. This paper provides the first in-depth investigation of the effect of different association and merging strategies. We compare different combinations of three spatial and two semantic affinity measures with four clustering methods for MC Dropout with a Single Shot Multi-Box Detector. Our results show that the correct choice of affinity-clustering combinations can greatly improve the effectiveness of the classification and spatial uncertainty estimation and the resulting object detection performance. We base our evaluation on a new mix of datasets that emulate near open-set conditions (semantically similar unknown classes), distant open-set conditions (semantically dissimilar unknown classes) and the common closed-set conditions (only known classes).

[arXiv]

-

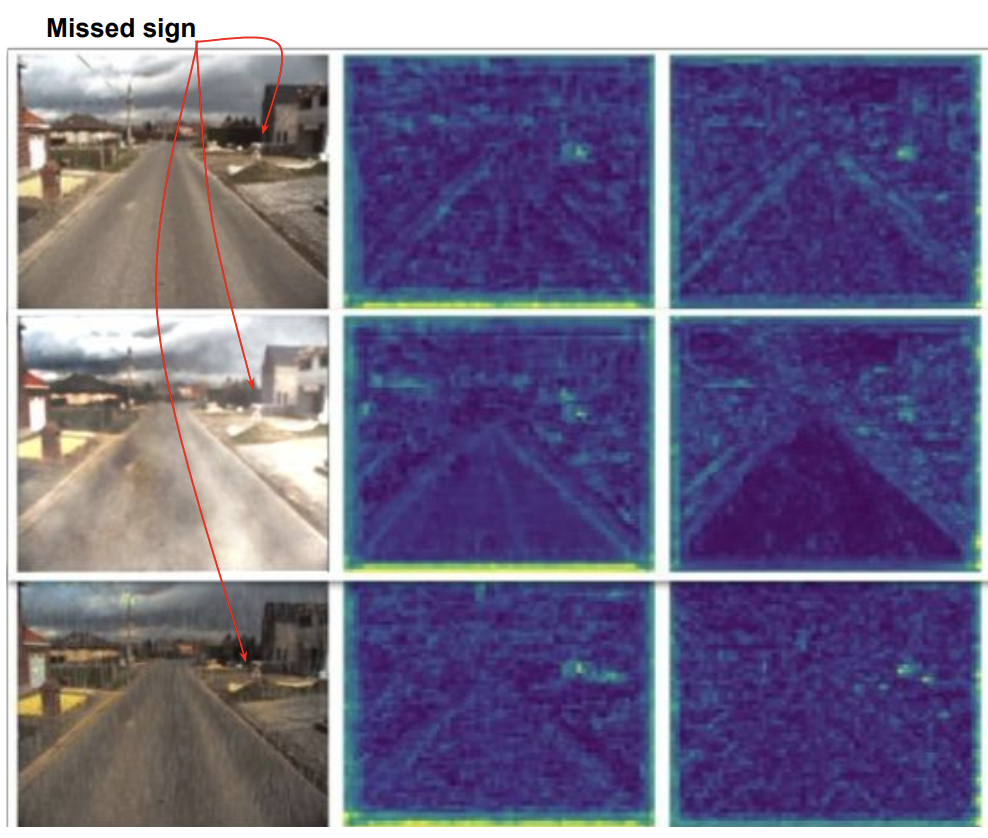

Did You Miss the Sign? A False Negative Alarm System for Traffic Sign Detectors In Proc. of IEEE International Conference on Intelligent Robots and Systems (IROS), 2019.

In this paper, we propose an approach to identify traffic signs that have been mistakenly discarded by the object detector. The proposed method raises an alarm when it discovers a failure by the object detector to detect a traffic sign. This approach can be useful to evaluate the performance of the detector during the deployment phase. We trained a single shot multi-box object detector to detect traffic signs and used its internal features to train a separate false negative detector (FND). During deployment, FND decides whether the traffic sign detector has missed a sign or not.

[arXiv]

In this paper, we propose an approach to identify traffic signs that have been mistakenly discarded by the object detector. The proposed method raises an alarm when it discovers a failure by the object detector to detect a traffic sign. This approach can be useful to evaluate the performance of the detector during the deployment phase. We trained a single shot multi-box object detector to detect traffic signs and used its internal features to train a separate false negative detector (FND). During deployment, FND decides whether the traffic sign detector has missed a sign or not.

[arXiv]

-

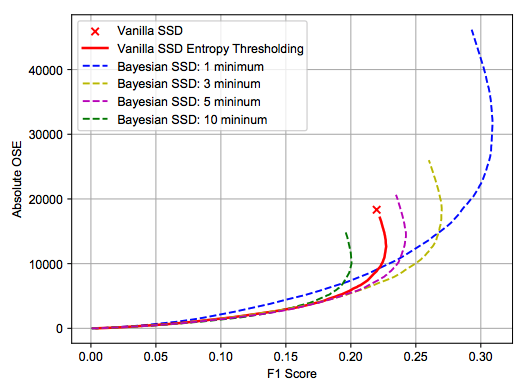

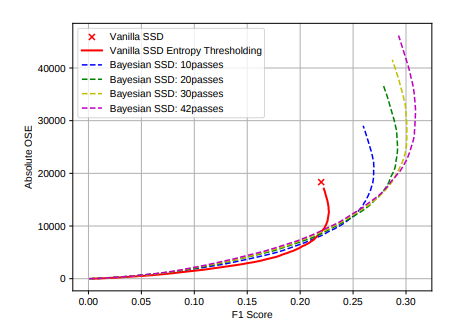

Dropout Sampling for Robust Object Detection in Open-Set Conditions In Proc. of IEEE International Conference on Robotics and Automation (ICRA), 2018.

Dropout Variational Inference, or Dropout Sampling, has been recently proposed as an

approximation technique for Bayesian Deep Learning and evaluated for image classification

and regression tasks. This paper investigates the utility of Dropout Sampling for object

detection for the first time. We demonstrate how label uncertainty can be extracted from a

state-of-the-art object detection system via Dropout Sampling. We show that this uncertainty

can be utilized to increase object detection performance under the open-set conditions that

are typically encountered in robotic vision. We evaluate this approach on a large synthetic

dataset with 30,000 images, and a real-world dataset captured by a mobile robot in a

versatile campus environment.

Dropout Variational Inference, or Dropout Sampling, has been recently proposed as an

approximation technique for Bayesian Deep Learning and evaluated for image classification

and regression tasks. This paper investigates the utility of Dropout Sampling for object

detection for the first time. We demonstrate how label uncertainty can be extracted from a

state-of-the-art object detection system via Dropout Sampling. We show that this uncertainty

can be utilized to increase object detection performance under the open-set conditions that

are typically encountered in robotic vision. We evaluate this approach on a large synthetic

dataset with 30,000 images, and a real-world dataset captured by a mobile robot in a

versatile campus environment.

-

Dropout Variational Inference Improves Object Detection in Open-Set Conditions In Proc. of NIPS Workshop on Bayesian Deep Learning, 2017.

One of the biggest current challenges of visual object detection is reliable operation in open-set

conditions. One way to handle the open-set problem is to utilize the uncertainty of the model to reject predictions

with low probability. Bayesian Neural Networks (BNNs), with variational inference commonly

used as an approximation, is an established approach to estimate model uncertainty. Here we extend the concept of Dropout sampling to object detection for the first time. We evaluate

Bayesian object detection on a large synthetic and a real-world dataset and show how the estimated

label uncertainty can be utilized to increase object detection performance under open-set conditions.

One of the biggest current challenges of visual object detection is reliable operation in open-set

conditions. One way to handle the open-set problem is to utilize the uncertainty of the model to reject predictions

with low probability. Bayesian Neural Networks (BNNs), with variational inference commonly

used as an approximation, is an established approach to estimate model uncertainty. Here we extend the concept of Dropout sampling to object detection for the first time. We evaluate

Bayesian object detection on a large synthetic and a real-world dataset and show how the estimated

label uncertainty can be utilized to increase object detection performance under open-set conditions.

-

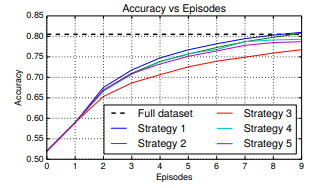

Episode-Based Active Learning with Bayesian Neural Networks In Workshop on Deep Learning for Robotic Vision, Conference on Computer Vision and Pattern Recognition (CVPR), 2017.

We investigate different strategies for active learning

with Bayesian deep neural networks. We focus our analysis

on scenarios where new, unlabeled data is obtained episodically,

such as commonly encountered in mobile robotics

applications. An evaluation of different strategies for acquisition,

updating, and final training on the CIFAR-10 dataset

shows that incremental network updates with final training

on the accumulated acquisition set are essential for best

performance, while limiting the amount of required human

labeling labor.

We investigate different strategies for active learning

with Bayesian deep neural networks. We focus our analysis

on scenarios where new, unlabeled data is obtained episodically,

such as commonly encountered in mobile robotics

applications. An evaluation of different strategies for acquisition,

updating, and final training on the CIFAR-10 dataset

shows that incremental network updates with final training

on the accumulated acquisition set are essential for best

performance, while limiting the amount of required human

labeling labor.